Software Testing Interview Preparation Guide

1. 250+ Technical Interview Questions & Answers

- Software Testing Fundamentals (35 Questions)

- Manual Testing Deep Dive (40 Questions)

- Testing Types and Techniques (45 Questions)

- Non-Functional Testing (25 Questions)

- Agile and Scrum Methodology (25 Questions)

- Automation Testing Basics (30 Questions)

⚡ Become a Job-Ready Software Tester —

Join the Testing Course Today!

Section 1: Software Testing Fundamentals (35 Questions)

Q1. What is software testing in simple terms?

Software testing is like being a detective who checks if a product works properly before it reaches customers. Imagine you bought a new phone and some features don’t work – frustrating, right? Testers prevent this by finding problems before users do. They verify that the software behaves as expected, matches requirements, and delivers a good experience to end-users.

Q2. Why do we need software testing?

Testing saves companies from embarrassment and financial losses. Think about a banking app that transfers wrong amounts or a shopping website that charges customers twice. These mistakes damage reputation and cost money. Testing catches these issues early when they’re cheaper to fix. It ensures quality, builds customer trust, and protects the brand image.

Q3. What are the main goals of software testing?

The primary goals include finding defects before users do, verifying that requirements are met, ensuring the product is reliable and secure, validating user experience, and giving stakeholders confidence in the product quality. Testing also helps in understanding risks and making informed decisions about releases.

Q4. Explain Software Development Life Cycle in your own words.

SDLC is the journey a software takes from idea to reality. It starts with gathering requirements – understanding what users need. Then comes design – planning how to build it. Next is development – actually writing the code. After that comes testing – checking if everything works. Finally, deployment – releasing it to users, followed by maintenance – fixing issues and adding improvements.

Q5. What are the different phases of SDLC?

The typical phases are Requirements Gathering, System Design, Implementation or Coding, Testing, Deployment, and Maintenance. Each phase has specific activities and deliverables. Some companies follow all phases strictly, while others like Agile teams may overlap these phases in short cycles called sprints.

Q6. What is STLC and how is it different from SDLC?

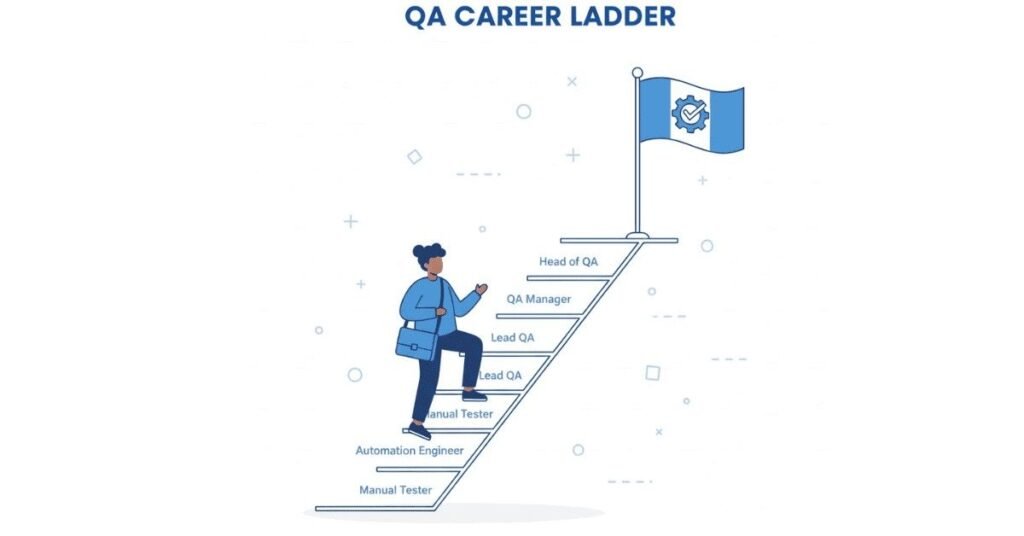

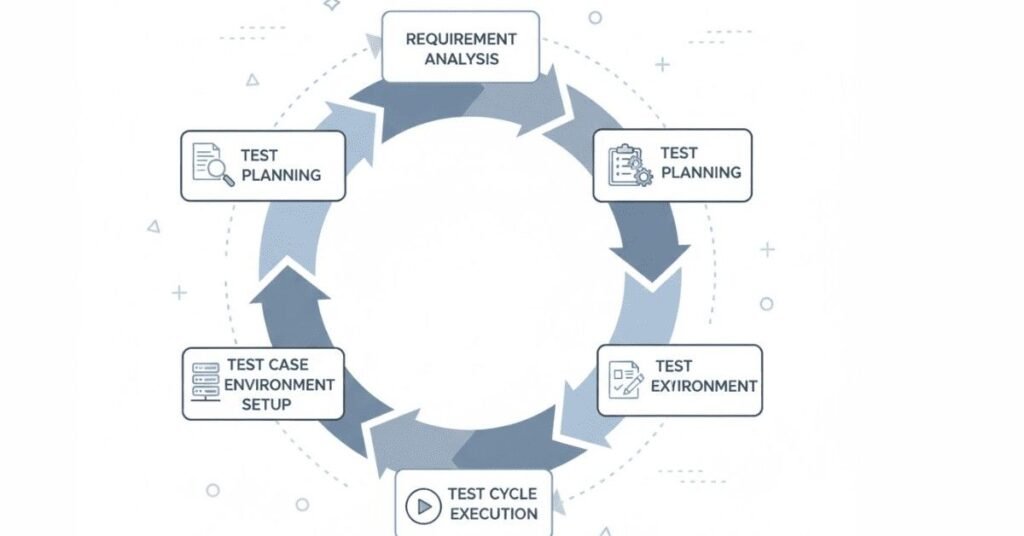

STLC stands for Software Testing Life Cycle – it’s the specific journey that testing activities follow. While SDLC covers the entire software creation process, STLC focuses only on testing. STLC includes phases like test planning, test design, test execution, and test closure. Think of SDLC as building a house, and STLC as specifically inspecting that house for quality.

Q7. What are the phases of STLC?

STLC has six main phases: Requirement Analysis (understanding what to test), Test Planning (deciding how to test), Test Case Development (creating test scenarios), Test Environment Setup (preparing testing tools), Test Execution (actually running tests), and Test Closure (documenting results and lessons learned).

Q8. What is the difference between verification and validation?

Verification asks “Are we building the product right?” – checking if we’re following the plan correctly. Validation asks “Are we building the right product?” – checking if what we built actually solves the user’s problem. Verification happens during development, validation happens after. Think of verification as checking the recipe while cooking, and validation as tasting the final dish.

Q9. What is a test plan and why is it important?

A test plan is your roadmap for testing. It documents what you’ll test, how you’ll test it, who will do it, what tools you’ll use, and how much time it will take. It’s like planning a trip – you decide destinations, routes, budget, and timeline. Without a test plan, testing becomes chaotic and important things get missed.

Q10. What should a good test plan contain?

A comprehensive test plan includes test objectives, scope (what’s included and excluded), test strategy, resources needed, schedule, entry and exit criteria, risk analysis, deliverables, and approval signatures. It should also mention the testing tools, environment details, and roles and responsibilities of team members.

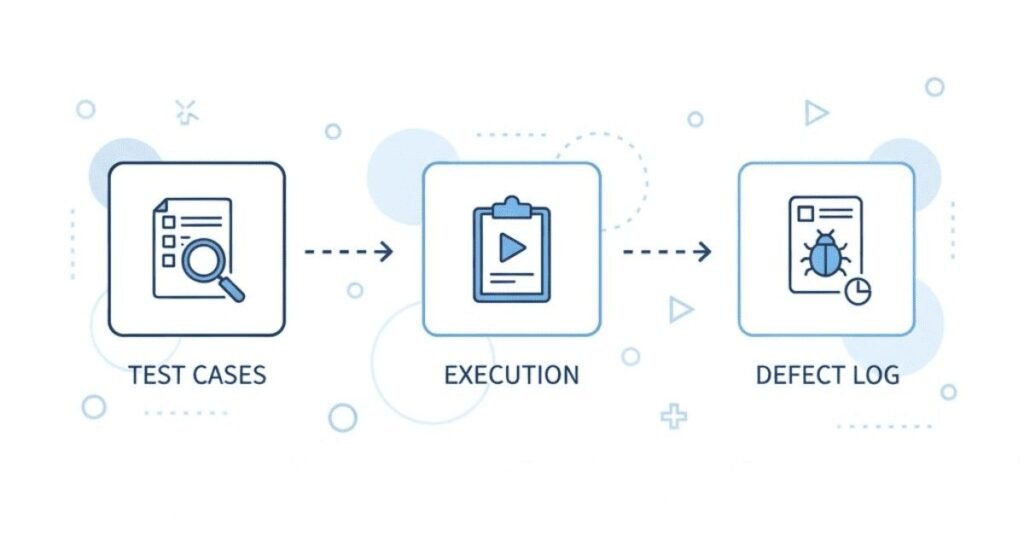

Q11. What is a test case?

A test case is a set of step-by-step instructions to check if a specific feature works correctly. It includes preconditions (what needs to be ready), test steps (what to do), test data (what information to use), expected results (what should happen), and actual results (what actually happened). It’s like following a recipe with exact measurements.

Q12. What are the components of a good test case?

Every test case should have a unique Test Case ID, a clear title, description, preconditions, test steps written in simple language, test data values, expected results for each step, actual results section, pass or fail status, priority level, and the name of who created it. Additional fields might include execution date and environment details.

Q13. What is the difference between test scenario and test case?

A test scenario is a high-level description of what to test – like “verify login functionality.” A test case is the detailed step-by-step instruction – like “enter username, enter password, click login button, verify dashboard appears.” One scenario can have multiple test cases. Scenarios are the “what,” test cases are the “how.”

Q14. What is a bug or defect?

A bug is when software doesn’t behave as expected or required. It could be a feature not working, something crashing, incorrect calculations, poor performance, or bad user experience. Bugs happen due to coding errors, misunderstood requirements, environment issues, or integration problems. Finding and reporting bugs is a tester’s core responsibility.

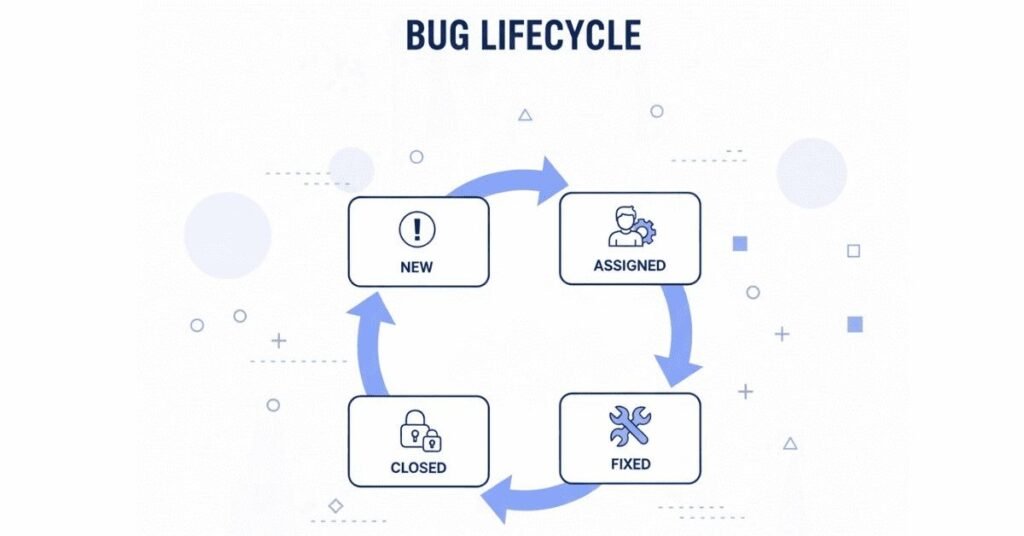

Q15. What is the bug life cycle?

The bug life cycle tracks a defect’s journey from discovery to closure. It starts when a tester finds and reports it (New status). A lead reviews and assigns it (Assigned). Developer fixes it (Fixed). Tester verifies the fix (Verified). If working, it’s closed (Closed). If the issue persists, it’s reopened (Reopened). The cycle continues until the bug is properly fixed.

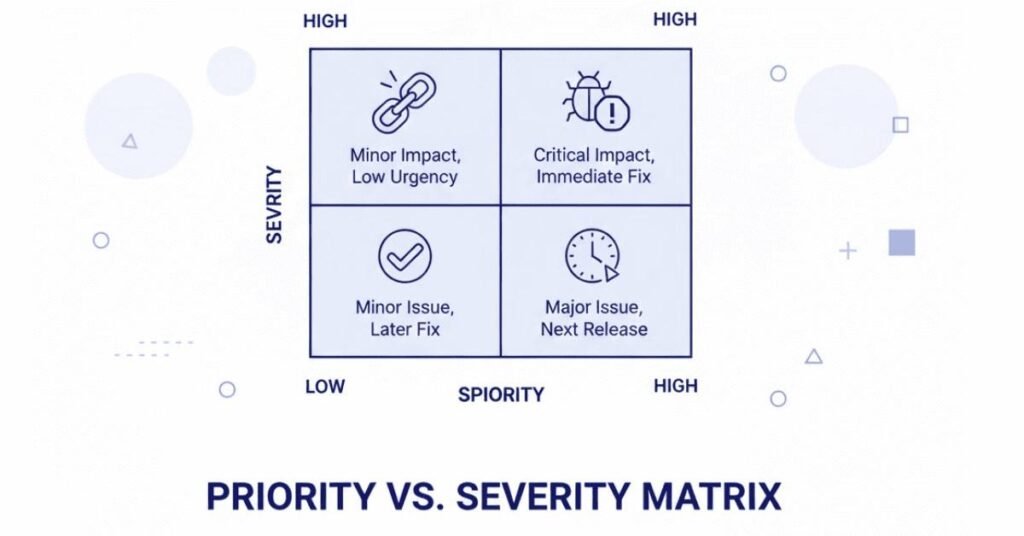

Q16. What is the difference between severity and priority?

Severity measures how serious the bug’s impact is on the system – how badly it breaks functionality. Priority measures how urgently it needs to be fixed from a business perspective. A high severity bug might have low priority if it occurs in a rarely used feature. A low severity cosmetic issue might have high priority before a major product launch.

Q17. Give examples of severity levels.

Critical Severity: Application crashes, data loss, security breaches. High Severity: Major features not working, incorrect calculations. Medium Severity: Minor features failing, workarounds available. Low Severity: Cosmetic issues, spelling mistakes, minor UI problems. The categorization helps teams decide which bugs to fix first.

Q18. Give examples of priority levels.

High Priority: Bugs blocking release, affecting majority of users, legal compliance issues. Medium Priority: Bugs affecting some users, features with workarounds. Low Priority: Nice-to-have fixes, minor improvements, cosmetic changes. Priority is decided by product managers and business stakeholders based on impact and deadlines.

Q19. What is RTM in testing?

RTM stands for Requirements Traceability Matrix. It’s a document that maps requirements to test cases, ensuring every requirement is tested and every test case links back to a requirement. It helps track coverage and ensures nothing is missed. Think of it as a checklist that proves you’ve tested everything that was requested.

Q20. Why is RTM important?

RTM ensures complete test coverage, helps identify missing requirements or tests, provides traceability for audits and compliance, helps impact analysis when requirements change, and gives stakeholders confidence that all requirements are validated. It’s especially important in regulated industries like healthcare and finance.

Q21. What is a test strategy?

A test strategy is the high-level approach to testing for an entire organization or project. It defines testing objectives, types of testing to perform, tools to use, risk management approach, and overall testing philosophy. Unlike a test plan which is project-specific, a test strategy is more permanent and applies across multiple projects.

Q22. What is the difference between test plan and test strategy?

Test strategy is the big picture – organizational approach to testing across all projects. Test plan is the specific plan for one project. Strategy is created once and updated rarely. Plans are created for each project. Strategy is created by senior management, plans by project test managers.

Q23. What is entry criteria in testing?

Entry criteria are conditions that must be met before testing can begin. Examples include test environment is ready, test data is available, test cases are written and reviewed, build is deployed and stable, and necessary access permissions are granted. Entry criteria prevent teams from starting testing when they’re not ready, which wastes time.

Q24. What is exit criteria in testing?

Exit criteria define when testing is complete and ready to move forward. Examples include all planned test cases executed, critical bugs fixed and verified, test coverage meets the target percentage, no high priority open bugs, and stakeholder approval obtained. Exit criteria prevent premature releases and ensure quality standards are met.

Q25. What is a test environment?

A test environment is a setup that mimics the real production environment where users will use the software. It includes servers, databases, networks, devices, and configurations. Having a proper test environment is crucial because bugs might only appear in specific setups. Testers need environments that closely match where customers will use the product.

Q26. What is a defect report?

A defect report is a formal document describing a bug found during testing. It includes a unique defect ID, summary, detailed description, steps to reproduce, actual versus expected results, screenshots or videos, severity, priority, environment details, and assigned developer. Good defect reports help developers understand and fix issues quickly.

Q27. What makes a good defect report?

A good defect report is clear, concise, and reproducible. It has a descriptive title, detailed steps that anyone can follow, specific expected and actual results, relevant screenshots, mentions the environment and build version, and uses professional language without blame. The goal is helping developers fix the issue, not criticizing their work.

Q28. What is configuration management in testing?

Configuration management is tracking and controlling changes to software, test cases, test data, and test environments. It ensures everyone works with the correct versions, changes are documented, and you can roll back if needed. Tools like Git, SVN, or TFS help with configuration management.

Q29. What is risk-based testing?

Risk-based testing prioritizes testing efforts based on the risk level of different features. High-risk areas get more testing attention. Risk is determined by considering probability of failure and impact of failure. For example, payment processing is high risk, so it gets thorough testing. A help text typo is low risk.

Q30. What are the principles of software testing?

Seven key principles guide testing: Testing shows presence of defects, not absence. Exhaustive testing is impossible. Early testing saves time and money. Defects cluster together in certain modules. Tests wear out and need updating. Testing is context-dependent. Absence of errors doesn’t mean the software is usable.

Q31. What is static testing?

Static testing examines documents, code, and designs without executing the program. It includes reviews, walkthroughs, inspections, and static analysis tools. Think of it as proofreading a book versus reading it aloud. Static testing finds issues in requirements and design early, preventing costly fixes later.

Q32. What is dynamic testing?

Dynamic testing involves running the actual software with test data and checking the output. It includes all types of testing where the application is executed – functional testing, performance testing, security testing. If you’re clicking buttons and entering data in the application, you’re doing dynamic testing.

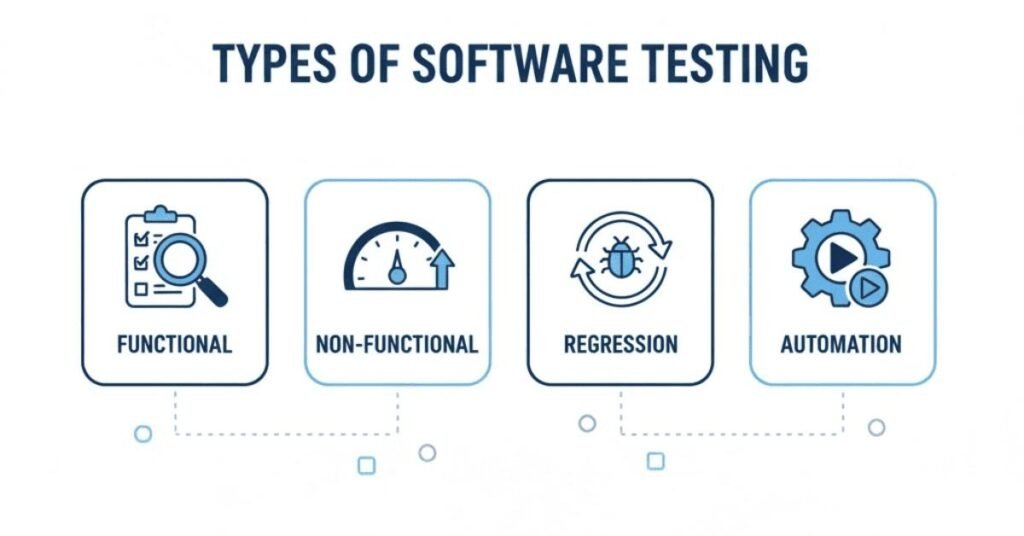

Q33. What is the difference between functional and non-functional testing?

Functional testing checks what the system does – features and functions working correctly. Non-functional testing checks how well the system does it – performance, security, usability, reliability. Functional asks “Does login work?” Non-functional asks “How fast is login? Is it secure? Is it user-friendly?”

Q34. What is positive and negative testing?

Positive testing uses valid inputs to verify the system works as expected. Negative testing uses invalid inputs to verify the system handles errors gracefully. For login, positive testing uses correct credentials. Negative testing tries wrong passwords, blank fields, special characters, SQL injection attempts, etc.

Q35. What is exploratory testing?

Exploratory testing is testing without predefined test cases – the tester explores the application like an end user, using creativity and intuition to find bugs. It’s like exploring a new city without a map versus following a guided tour. Both approaches have value. Exploratory testing often finds unexpected issues that scripted tests miss.

Section 2: Manual Testing Deep Dive (40 Questions)

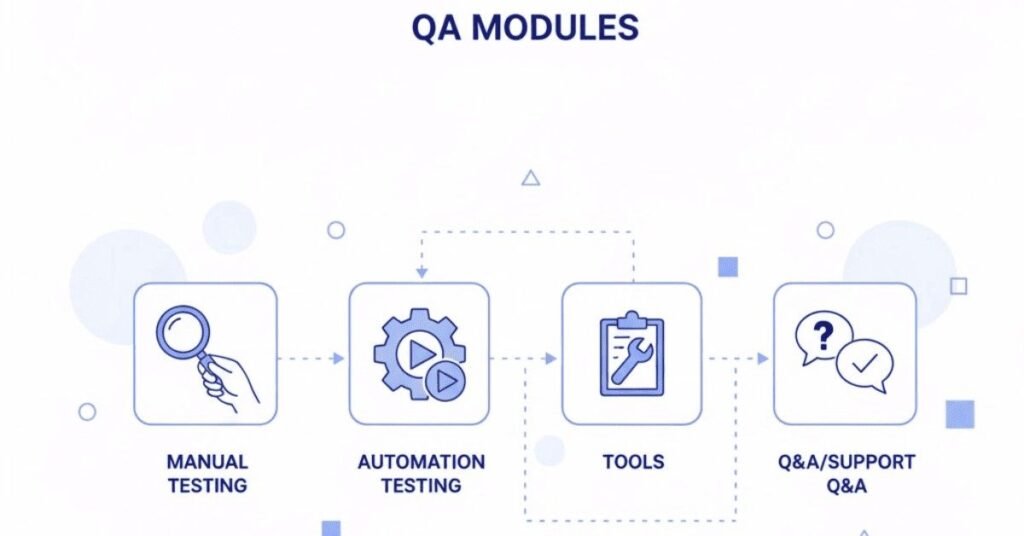

Q36. What is manual testing?

Manual testing means a human tester manually executes test cases without using automation tools. The tester acts like a real user, clicking buttons, entering data, navigating pages, and checking results. It’s like checking homework yourself versus using an automated grading tool. Manual testing is essential for usability, user experience, and exploratory testing.

Q37. What are the advantages of manual testing?

Manual testing provides human insight into user experience that automation cannot replicate. It’s flexible, doesn’t require programming skills, works well for exploratory testing, better for usability evaluation, cost-effective for small projects, and can adapt quickly to changes. Testers can spot visual issues and use intuition to find unexpected problems.

Q38. What are the disadvantages of manual testing?

Manual testing is time-consuming, prone to human error, gets boring with repetitive tests, cannot test high volume data easily, hard to do performance or load testing manually, and documentation maintenance is challenging. For regression testing with hundreds of test cases, manual execution becomes impractical and expensive.

Q39. When should you choose manual testing over automation?

Choose manual testing for usability testing, exploratory testing, ad-hoc testing, when requirements change frequently, for short-term projects, when budget is limited, for testing user experience and visual appeal, and when test cases won’t be repeated many times. If something requires human judgment, manual testing is the way to go.

Q40. What is the role of a manual tester?

A manual tester analyzes requirements, creates test plans and test cases, sets up test environments, executes tests, reports bugs, verifies fixes, participates in requirement reviews, provides feedback on usability, maintains test documentation, and communicates with developers and stakeholders. They’re quality advocates ensuring the product meets standards.

Q41. How do you write effective test cases?

Start by understanding requirements thoroughly. Identify test scenarios covering different aspects. Write clear, step-by-step instructions that anyone can follow. Use specific test data. Define expected results precisely. Make test cases reusable and maintainable. Include both positive and negative scenarios. Review with peers. Keep language simple and unambiguous.

Q42. What is test case design technique?

Test case design techniques are methods to create test cases systematically ensuring good coverage with minimum test cases. They include equivalence partitioning, boundary value analysis, decision table testing, state transition testing, and use case testing. These techniques help identify the most important test scenarios.

Q43. Explain boundary value analysis with an example.

Boundary Value Analysis focuses on testing values at boundaries since errors often occur there. For a field accepting age 18-60, instead of testing random values, test boundary values: 17 (just below), 18 (minimum), 19 (just above minimum), 59 (just below maximum), 60 (maximum), 61 (just above). This catches boundary-related bugs efficiently.

Q44. Explain equivalence partitioning with an example.

Equivalence Partitioning divides input data into groups where all values should behave similarly. For age 18-60, we have three partitions: below 18, between 18-60, above 60. Instead of testing all ages, pick one representative value from each partition. This reduces test cases while maintaining coverage.

Q45. What is decision table testing?

Decision table testing is useful when the system behavior changes based on multiple conditions. Create a table with all possible condition combinations and corresponding actions. For loan eligibility based on age, income, and credit score, the decision table shows all combinations and whether the loan is approved or rejected.

Q46. What is state transition testing?

State transition testing is used when the system changes states based on events. Like an ATM card: Active state → enter wrong PIN three times → Blocked state → contact bank → Active state again. You test all possible state changes and verify the system responds correctly to events in each state.

Q47. What is use case testing?

Use case testing creates test cases from user scenarios or use cases. It focuses on real-world user behavior. For example, an e-commerce use case: browse products → add to cart → apply coupon → proceed to checkout → enter shipping details → select payment → place order. Test cases follow this user journey.

Q48. How do you prioritize test cases?

Prioritize based on business criticality, usage frequency, risk level, and customer impact. High priority goes to critical features like payment processing, frequently used features like login, high-risk areas, and features mentioned in the release notes. Low priority goes to rarely used features and minor cosmetic elements.

Q49. What is test case review?

Test case review is when peers or leads examine test cases before execution to find gaps, redundancies, or errors. It ensures test cases align with requirements, are clear and executable, have good coverage, and follow standards. Reviews improve quality and catch issues early, saving execution time.

Q50. What is a test suite?

A test suite is a collection of related test cases grouped together for execution. You might have a login test suite, payment test suite, or regression test suite. Organizing test cases into suites makes management easier and allows running specific groups of tests based on needs.

Q51. What is test data and why is it important?

Test data is the input values used in test cases. Good test data is crucial for effective testing. It should cover valid data, invalid data, boundary values, special characters, null values, and large data sets. Using realistic test data that mimics production data helps find real-world issues.

Q52. How do you manage test data?

Test data management involves creating, storing, maintaining, and disposing of test data. Use techniques like data masking for sensitive information, create reusable data sets, document data dependencies, automate data creation where possible, and ensure data is available in test environments before testing begins.

Q53. What is traceability matrix and how do you create it?

A traceability matrix maps requirements to test cases. Create it in Excel or testing tools with columns: Requirement ID, Requirement Description, Test Case IDs, Status. Each requirement should link to one or more test cases. This ensures no requirement is missed and every test case has a purpose.

Q54. What is the difference between test case and test script?

A test case is a document with steps, data, and expected results written for human testers. A test script is executable code written for automation tools. Test cases can be executed manually or converted into test scripts for automation. Test scripts use programming languages and automation frameworks.

Q55. What is smoke testing?

Smoke testing is a quick check to verify the build is stable enough for detailed testing. It’s like checking if a device powers on before testing all features. Smoke tests cover critical paths – can users log in, access main features, perform basic operations. If smoke tests fail, the build is rejected immediately.

Q56. What is sanity testing?

Sanity testing is a quick check after receiving a build with specific bug fixes or small changes. You verify that the fixes work and didn’t break related functionality. It’s narrower and deeper than smoke testing. If a login bug was fixed, sanity testing thoroughly tests login and related features.

Q57. What is the difference between smoke and sanity testing?

Smoke testing is broad and shallow, checking overall build stability across major features. Sanity testing is narrow and deep, focusing on specific changed areas. Smoke happens at the beginning of testing cycles. Sanity happens after bug fixes. Both are types of quick testing to save time on unstable builds.

Q58. What is regression testing?

Regression testing ensures new changes didn’t break existing functionality. When developers add features or fix bugs, there’s risk of breaking something that worked before. Regression testing reruns previously passed test cases to catch these unintended side effects. It’s essential after every code change.

Q59. What is the difference between re-testing and regression testing?

Re-testing verifies a specific bug fix – testing the exact scenario that failed before to confirm it now works. Regression testing checks if the fix broke anything else – running broader test suites to ensure existing features still work. Re-testing is focused, regression testing is broad.

Q60. What is ad-hoc testing?

Ad-hoc testing is informal testing without planning or documentation. Testers randomly test the application trying to break it using intuition and creativity. It’s useful for finding unexpected issues but cannot be replicated. Think of it as freestyle testing versus choreographed testing.

Q61. What is monkey testing?

Monkey testing provides random inputs to the system trying to break it, like a monkey randomly pressing buttons. It checks system stability under unpredictable user behavior. It’s useful for finding crashes and unexpected errors but doesn’t follow any logic or test specific scenarios.

Q62. What is a test log?

A test log is a detailed record of test execution activities. It captures which test cases were executed, by whom, when, on which environment, what was the result, and any observations or issues. Test logs provide audit trails and help analyze testing progress and quality metrics.

Q63. What is test summary report?

A test summary report is a document prepared at the end of testing summarizing all testing activities and results. It includes test objectives, scope, test cases executed, pass/fail counts, defects found, testing timelines, risks, and recommendations. It helps stakeholders make release decisions.

Q64. What is defect triage?

Defect triage is a meeting where the team reviews reported bugs, discusses their validity, assigns severity and priority, assigns them to developers, and decides which to fix and which to defer. It ensures efficient defect management and aligns the team on priorities.

Q65. What is defect leakage?

Defect leakage occurs when a bug escapes to the next phase or production that should have been caught earlier. High defect leakage indicates testing gaps. It’s measured as defects found in production divided by total defects. Organizations track this metric to improve testing effectiveness.

Q66. What is defect removal efficiency?

Defect Removal Efficiency measures how effective testing is at finding bugs. Formula: DRE = Defects found before release / Total defects × 100. If testing found 90 bugs and 10 reached production, DRE is 90%. Higher DRE means better testing. Industry targets DRE above 90%.

Q67. What is test coverage?

Test coverage measures how much of the application is tested. Types include requirement coverage (percentage of requirements tested), code coverage (percentage of code executed during testing), and test case coverage (percentage of scenarios covered). Higher coverage generally means better quality, though 100% is rarely achieved or necessary.

Q68. What are different types of test coverage?

Requirement coverage ensures all requirements have test cases. Feature coverage ensures all features are tested. Code coverage measures code executed during testing including statement coverage, branch coverage, and path coverage. Risk coverage ensures high-risk areas are thoroughly tested. Each coverage type provides different insights into testing completeness.

Q69. What is a test harness?

A test harness is a collection of software and test data configured to test a program unit by running it under varying conditions and monitoring its behavior and outputs. It includes test execution engine, test scripts, test data, and reporting mechanisms. Test harnesses automate test execution and result collection.

Q70. What is formal testing?

Formal testing follows documented processes, uses approved test plans and test cases, maintains proper documentation, and requires stakeholder approvals. It’s structured and traceable. Opposite is informal or ad-hoc testing. Formal testing is essential for regulated industries, large projects, and situations requiring audit trails.

Q71. What is test oracle?

A test oracle is a mechanism to determine whether a test passed or failed. It could be requirements documentation, existing systems, manual calculations, or expert knowledge. For example, to verify interest calculation, you might manually calculate expected results or compare with an existing proven system.

Q72. What is error guessing?

Error guessing is a test case design technique based on tester’s experience and intuition about where defects might exist. Experienced testers know common error patterns like off-by-one errors, null pointer issues, division by zero, boundary problems. They create test cases targeting these likely problem areas.

Q73. What is test execution?

Test execution is the phase where testers run test cases on the application, compare actual results with expected results, report defects for failures, and mark test cases as pass, fail, blocked, or skipped. It’s the hands-on testing phase where testers interact with the software.

Q74. What is test closure?

Test closure is the final phase where testing is formally completed. Activities include evaluating test completion criteria, writing test summary reports, gathering metrics, documenting lessons learned, archiving test artifacts, and getting stakeholder sign-off. It formally ends the testing phase and captures knowledge for future projects.

Q75. What are metrics in testing?

Metrics are quantitative measures used to track and assess testing progress and quality. Common metrics include number of test cases executed, pass/fail percentage, defect density, defect severity distribution, test coverage percentage, test execution rate, and defect detection rate. Metrics help make data-driven decisions.

Section 3: Testing Types and Techniques (45 Questions)

Q76. What is white box testing?

White box testing examines the internal structure, code, and logic of the software. Testers need programming knowledge and access to source code. They test code paths, loops, conditions, and statements. It’s like inspecting a car’s engine versus just driving it. White box testing finds code-level bugs like logic errors and improper conditions.

Q77. What are white box testing techniques?

Key techniques include statement coverage (ensuring every line of code executes), branch coverage (testing all decision branches), path coverage (testing all possible paths through code), condition coverage (testing all boolean conditions), and loop testing (testing loops with zero, one, and multiple iterations).

Q78. What is black box testing?

Black box testing examines functionality without knowing internal code or structure. Testers treat the system as a black box, providing inputs and verifying outputs against requirements. No programming knowledge needed. It focuses on what the system does, not how. Most manual testing is black box testing.

Q79. What are black box testing techniques?

Main techniques are equivalence partitioning (dividing inputs into groups), boundary value analysis (testing limits), decision table testing (testing condition combinations), state transition testing (testing state changes), use case testing (testing user scenarios), and error guessing (using experience to find bugs).

Q80. What is gray box testing?

Gray box testing combines white and black box approaches. Testers have partial knowledge of internal structure but test from an external perspective. For example, knowing database structure helps design better data testing, but testing happens through the application interface. It’s practical and commonly used.

Q81. What is unit testing?

Unit testing tests individual components or functions in isolation. Developers usually write unit tests for their code. Each function is tested separately with various inputs. For example, testing a calculation function with different numbers. Unit testing catches bugs early when they’re cheapest to fix.

Q82. What is integration testing?

Integration testing verifies that different modules or components work together correctly. After unit testing individual pieces, integration testing checks their interactions. For example, testing if the login module correctly integrates with the database module. Integration bugs often occur at interfaces between modules.

Q83. What are different integration testing approaches?

Big Bang approach integrates all modules at once and tests. Top-Down approach tests high-level modules first, stubbing lower modules. Bottom-Up approach tests low-level modules first, creating drivers for higher modules. Sandwich approach combines top-down and bottom-up. Incremental approach adds and tests modules gradually.

Q84. What is system testing?

System testing tests the complete integrated system against requirements. It’s end-to-end testing of the entire application. Both functional and non-functional aspects are tested. System testing happens after integration testing and before user acceptance testing. It validates the complete product.

Q85. What is user acceptance testing?

UAT is testing by actual users or client representatives to verify the system meets business needs and is ready for production. It’s the final testing phase before release. Users test real-world scenarios to ensure the system solves their problems. UAT approval is often required for go-live decisions.

Q86. What is alpha testing?

Alpha testing is done by internal employees, usually the testing team or developers, at the development site before releasing to external users. It simulates real user environment and usage. Alpha testing happens in a controlled environment and helps catch issues before wider release.

Q87. What is beta testing?

Beta testing is done by actual customers or selected external users at their own locations. The software is released to a limited audience to test in real-world conditions. Feedback from beta users helps improve the product before full release. Many companies run public or private beta programs.

Q88. What is the difference between alpha and beta testing?

Alpha testing is internal, conducted at the developer’s site, in a controlled lab environment, with internal testers. Beta testing is external, at user sites, in real-world conditions, with actual customers. Alpha finds technical issues, beta validates market readiness and user satisfaction.

Q89. What is functional testing?

Functional testing verifies that each feature works according to requirements. It tests what the system does – can users log in, make purchases, generate reports, etc. Functional testing validates user actions, input processing, output generation, and business logic. It’s requirement-based testing.

Q90. What types of functional testing exist?

Types include unit testing, integration testing, system testing, smoke testing, sanity testing, regression testing, user acceptance testing, and interface testing. Each focuses on different aspects of functionality. Together they ensure the application works correctly from individual functions to complete workflows.

Q91. What is non-functional testing?

Non-functional testing evaluates how well the system performs rather than what it does. It tests quality attributes like performance, security, usability, reliability, scalability, and compatibility. Non-functional aspects often determine user satisfaction as much as features do.

Q92. What is performance testing?

Performance testing checks how the system performs under various conditions. It measures response times, throughput, resource usage, and stability. Performance testing ensures the application is fast enough, handles expected load, and remains stable. It answers questions like “How quickly does the page load?”

Q93. What are types of performance testing?

Load testing checks behavior under expected load. Stress testing pushes beyond limits to find breaking points. Spike testing checks sudden load increases. Endurance testing runs sustained load over time. Volume testing handles large data volumes. Scalability testing verifies system growth capability.

Q94. What is load testing?

Load testing simulates expected user load to verify the system handles it properly. For example, testing if a website works well with 1000 concurrent users. It measures response times, transaction rates, and resource utilization under normal and peak load conditions. Load testing prevents performance surprises at launch.

Q95. What is stress testing?

Stress testing pushes the system beyond normal limits to find breaking points and observe failure behavior. It helps understand maximum capacity and how gracefully the system degrades under extreme conditions. For example, increasing users until the system crashes, then identifying the bottleneck.

Q96. What is volume testing?

Volume testing checks system behavior with large volumes of data. For example, testing database performance with millions of records, or checking if reports generate properly with huge data sets. Volume testing ensures the application handles data growth and identifies database performance issues.

Q97. What is security testing?

Security testing identifies vulnerabilities, threats, and risks in the application. It checks authentication, authorization, data encryption, SQL injection prevention, cross-site scripting protection, and compliance with security standards. Security testing protects sensitive data and prevents unauthorized access.

Q98. What are common security testing types?

Vulnerability scanning uses automated tools to find known vulnerabilities. Penetration testing involves ethical hackers attempting to break into the system. Security auditing reviews code and infrastructure for security issues. Risk assessment identifies potential threats. Each provides different security insights.

Q99. What is usability testing?

Usability testing evaluates how easy and intuitive the application is for users. Testers observe real users performing tasks, noting confusion, errors, and frustrations. It checks navigation, layout, content clarity, and overall user experience. Good usability means users can accomplish tasks efficiently without frustration.

Q100. What is compatibility testing?

Compatibility testing verifies the application works across different browsers, operating systems, devices, screen resolutions, and configurations. For example, testing a website on Chrome, Firefox, Safari, and Edge, on Windows, Mac, and Linux, on desktop, tablet, and mobile. Compatibility testing ensures broad accessibility.

Q101. What is UI testing?

UI testing validates the graphical user interface – buttons, menus, icons, layouts, colors, fonts, images, and overall visual design. It checks that UI elements appear correctly, are properly aligned, and match design specifications. UI testing also verifies that interface elements are functional and responsive.

Q102. What is database testing?

Database testing validates data integrity, data validity, stored procedures, triggers, and database performance. It involves checking data accuracy after transactions, schema validation, table and column constraints, backup and recovery procedures, and SQL query performance. Database testing ensures data is stored and retrieved correctly.

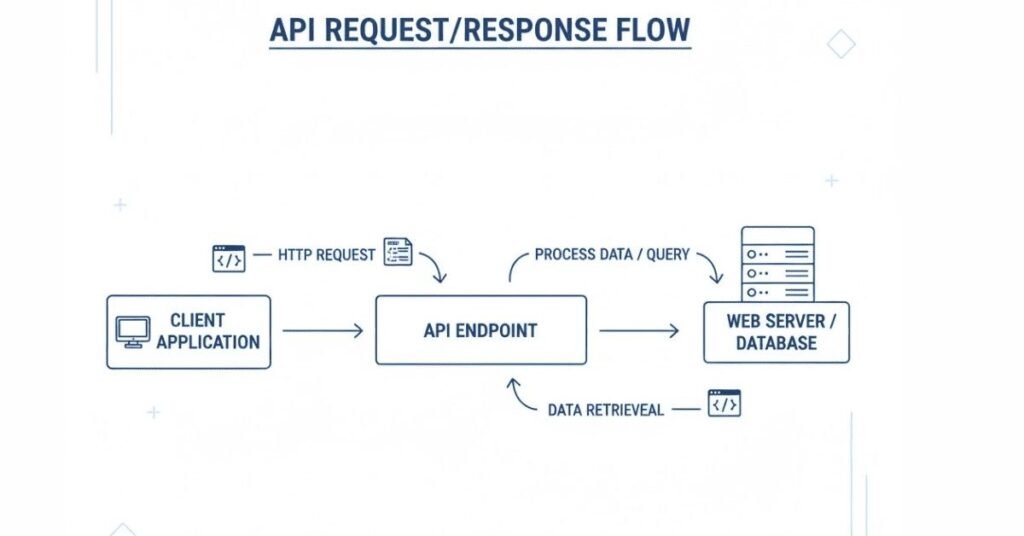

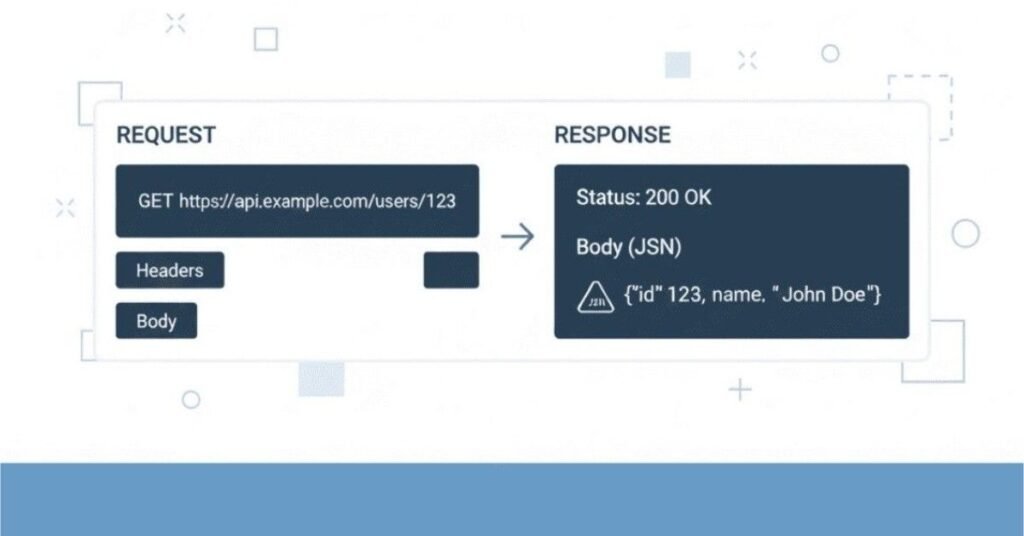

Q103. What is API testing?

API testing validates application programming interfaces – the connections between different software systems. It tests request-response cycles, data formats, error handling, security, and performance of APIs. API testing happens at the integration layer without a user interface, using tools like Postman or Rest Assured.

Q104. What is mobile testing?

Mobile testing validates applications on mobile devices. It includes functional testing of features, usability on small screens, performance on limited resources, battery consumption, network conditions (WiFi, 3G, 4G, 5G), interruptions (calls, messages), and compatibility across devices and OS versions.

Q105. What is accessibility testing?

Accessibility testing ensures applications are usable by people with disabilities. It checks screen reader compatibility, keyboard navigation, color contrast, text size adjustability, and compliance with standards like WCAG. Accessible applications are inclusive and often legally required for public-facing systems.

Q106. What is localization testing?

Localization testing verifies the application works properly for specific locales – languages, currencies, date formats, cultural norms. It checks translations, text expansion, regional settings, and local regulations. For example, testing a shopping app with Indian currency, date formats, and Hindi language.

Q107. What is globalization testing?

Globalization testing verifies the application works internationally without requiring code changes for different locales. It checks that the system supports multiple languages, currencies, time zones, and cultural conventions simultaneously. Globalization makes localization easier.

Q108. What is recovery testing?

Recovery testing checks how well the system recovers from crashes, hardware failures, or other disasters. It tests backup and restore procedures, failover mechanisms, and data recovery capabilities. Recovery testing ensures business continuity and minimal data loss during failures.

Q109. What is installation testing?

Installation testing verifies the software installs, upgrades, and uninstalls properly across different environments and configurations. It checks installation scripts, prerequisites, documentation accuracy, space requirements, and installation time. Proper installation testing prevents customer frustration during deployment.

Q110. What is configuration testing?

Configuration testing validates the application works correctly with different configuration settings and combinations of hardware and software. For example, testing accounting software with different tax configurations, or gaming software with different graphics settings.

Q111. What is compliance testing?

Compliance testing ensures the application meets industry standards, legal requirements, and regulatory guidelines. Examples include HIPAA for healthcare, PCI-DSS for payment processing, GDPR for data privacy. Compliance testing often requires documentation and certification.

Q112. What is exploratory testing and when to use it?

Exploratory testing is simultaneous learning, test design, and execution. Testers explore the application, learning as they go, designing tests on the fly. It’s useful for new features, finding edge cases, supplementing scripted tests, and when documentation is incomplete. Experienced testers excel at exploratory testing.

Q113. What is end-to-end testing?

End-to-end testing validates complete application workflows from start to finish, including all integrated components, databases, external interfaces, and networks. For example, testing an e-commerce journey from product search to order delivery notification, involving front-end, backend, payment gateway, and email systems.

Q114. What is interface testing?

Interface testing checks communication between application modules, APIs, databases, and external systems. It verifies data transfer, error handling, communication protocols, and compatibility between integrated components. Interface testing catches integration issues before full system testing.

Q115. What is mutation testing?

Mutation testing evaluates test suite quality by deliberately introducing bugs (mutations) into code and checking if tests catch them. If tests fail, they’re effective. If tests still pass, it indicates gaps in test coverage. Mutation testing is advanced and typically automated.

Q116. What is concurrency testing?

Concurrency testing checks how the application handles multiple users or processes accessing the same resources simultaneously. It tests race conditions, deadlocks, and data corruption under concurrent access. Important for multi-user applications and databases.

Q117. What is internationalization testing?

Internationalization testing verifies the application can be adapted to various languages and regions without engineering changes. It’s similar to globalization testing but focuses more on the ability to support multiple locales rather than testing each locale specifically.

Q118. What is destructive testing?

Destructive testing attempts to break the application by providing invalid inputs, removing resources, killing processes, or creating extreme conditions. The goal is finding limits and observing failure modes. It’s similar to negative testing but more aggressive.

Q119. What is pairwise testing?

Pairwise testing is a technique that tests all possible pairs of input parameters rather than all combinations. It significantly reduces test cases while maintaining good coverage. For example, if you have 5 parameters with 3 values each, pairwise testing needs far fewer tests than exhaustive testing.

Q120. What is mutation testing in simple terms?

Think of mutation testing as checking if your security system actually works by staging small break-ins. You intentionally inject small bugs into code and see if your test cases catch them. If a mutated (buggy) code passes your tests, your tests need improvement.

🗺️ Follow the 90 Days Software Testing Roadmap to Learn the Right Way →

Section 4: Non-Functional Testing (25 Questions)

Q121. Why is non-functional testing important?

Non-functional aspects determine user satisfaction and product success as much as features. A feature-rich application that’s slow, insecure, or hard to use will fail. Non-functional testing ensures the application is performant, secure, reliable, and user-friendly, creating positive user experiences.

Q122. What is response time in performance testing?

Response time is how long the system takes to respond to a user action. For example, time from clicking submit until seeing results. Good response times keep users satisfied. Different actions have different acceptable response times – simple searches should be under 1 second, complex reports might allow 5-10 seconds.

Q123. What is throughput in performance testing?

Throughput measures how many transactions or requests the system processes per unit time, like transactions per second. Higher throughput means better performance. For example, a payment system processing 100 transactions per second has higher throughput than one processing 50.

Q124. What is latency?

Latency is the delay before a transfer of data begins following an instruction. It’s the waiting time. Lower latency means faster responsiveness. Network latency affects web applications significantly. Users notice latency above 100 milliseconds.

Q125. What tools are used for performance testing?

Popular tools include JMeter (open source, widely used), LoadRunner (enterprise-level), Gatling (developer-friendly), BlazeMeter (cloud-based), K6 (modern scripting), and New Relic (monitoring). Each tool has strengths for different scenarios. JMeter is often the starting point for learning performance testing.

Q126. What is a bottleneck?

A bottleneck is a point in the system where performance is limited, like a narrow section of road causing traffic jams. Common bottlenecks include slow database queries, insufficient server resources, network bandwidth limits, or inefficient code. Performance testing identifies bottlenecks for optimization.

Q127. What is scalability testing?

Scalability testing checks if the system can grow to handle increased load by adding resources. Vertical scalability means adding more power to existing servers. Horizontal scalability means adding more servers. Cloud applications should scale horizontally for cost-effectiveness.

Q128. What is spike testing?

Spike testing suddenly increases the load to extreme levels and observes system behavior. It simulates scenarios like ticket sales opening, flash sales, or viral content. Spike testing reveals if the system handles sudden traffic surges or crashes embarrassingly.

Q129. What is soak testing?

Soak testing, also called endurance testing, runs the system under significant load for extended periods (hours or days). It finds memory leaks, resource exhaustion, and degradation over time. Systems might perform well for minutes but fail after hours due to accumulated issues.

Q130. What metrics do you monitor during performance testing?

Key metrics include response time, throughput, error rate, CPU utilization, memory usage, disk I/O, network bandwidth, database connections, server requests per second, and concurrent users. Monitoring these metrics identifies performance issues and bottlenecks.

Q131. What is authentication in security testing?

Authentication verifies user identity – confirming you are who you claim to be. It involves usernames, passwords, biometrics, tokens, or certificates. Security testing checks authentication mechanisms for weaknesses, password policies, session management, and protection against brute force attacks.

Q132. What is authorization in security testing?

Authorization determines what an authenticated user can access and do. It’s about permissions and roles. Security testing verifies users can only access allowed resources, privilege escalation isn’t possible, and role-based access control works correctly.

Q133. What is SQL injection?

SQL injection is an attack where malicious SQL code is inserted into input fields, potentially accessing or modifying database data. For example, entering ‘ OR ‘1’=’1 in a login field might bypass authentication. Security testing checks if applications properly sanitize inputs to prevent SQL injection.

Q134. What is cross-site scripting?

Cross-site scripting (XSS) injects malicious scripts into web pages viewed by other users. Attackers might steal cookies, session tokens, or personal information. Security testing ensures user inputs are properly encoded and scripts cannot execute in browsers.

Q135. What is penetration testing?

Penetration testing, or ethical hacking, has security experts attempting to break into the system like real hackers would. They try various attack vectors to find vulnerabilities. Penetration testing provides realistic security assessment beyond automated scanning.

Q136. What security testing tools do you know?

Common tools include Burp Suite (web application security), OWASP ZAP (vulnerability scanning), Nessus (vulnerability assessment), Metasploit (penetration testing), Wireshark (network analysis), and Nmap (network scanning). Each tool serves different security testing purposes.

Q137. What is usability heuristics?

Usability heuristics are general principles for user interface design, like visibility of system status, consistency, error prevention, and aesthetic minimalism. Usability testing often uses these heuristics as guidelines to evaluate interface quality.

Q138. What is A/B testing?

A/B testing shows different versions of a feature to different users and compares which performs better. For example, testing two different checkout button colors to see which gets more clicks. A/B testing helps make data-driven design decisions.

Q139. What is browser compatibility testing?

Browser compatibility testing ensures web applications work correctly across different browsers (Chrome, Firefox, Safari, Edge) and their versions. Browsers interpret code differently, so testing each browser prevents users from having broken experiences based on their browser choice.

Q140. What is cross-browser testing?

Cross-browser testing is the same as browser compatibility testing – validating consistent functionality and appearance across browsers. Automated tools like BrowserStack, Sauce Labs, or Selenium Grid help test multiple browser combinations efficiently.

Q141. What is responsive testing?

Responsive testing verifies web applications adapt properly to different screen sizes and orientations – desktop, tablet, and mobile devices. It checks that layouts adjust, text remains readable, images scale, and functionality works on all screen sizes.

Q142. What is device compatibility testing?

Device compatibility testing checks applications work across different devices with various screen sizes, resolutions, hardware capabilities, and OS versions. Important for mobile apps since Android alone has thousands of device models.

Q143. What is backward compatibility testing?

Backward compatibility testing ensures new versions work with older versions or legacy systems. For example, ensuring new software opens files created by older versions, or new APIs work with existing client applications without breaking changes.

Q144. What is forward compatibility testing?

Forward compatibility testing checks if current systems work with future versions or standards. It’s less common but important for long-lived applications. For example, ensuring current software can read files that might be saved in future formats.

Q145. What is reliability testing?

Reliability testing checks if the system consistently performs correctly over time under specified conditions. It measures Mean Time Between Failures (MTBF) and Mean Time To Repair (MTTR). Reliable systems have high MTBF and low MTTR.

Section 5: Agile and Scrum Methodology (25 Questions)

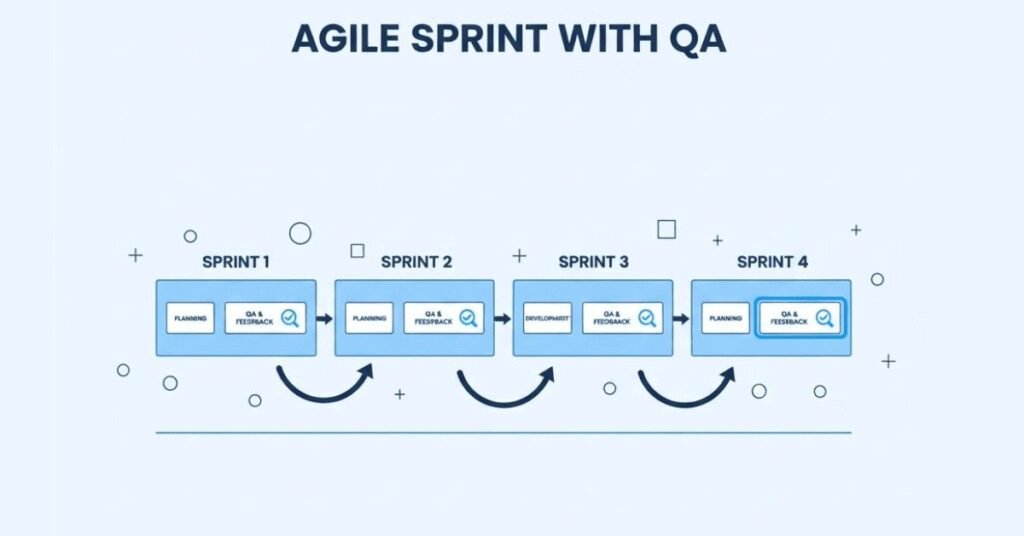

Q146. What is Agile testing?

Agile testing is testing integrated throughout development in short iterations rather than as a final phase. Testers work alongside developers, testing features as they’re built. Agile testing emphasizes collaboration, quick feedback, and adapting to changes. It’s continuous rather than a one-time event.

Q147. What are the principles of Agile testing?

Key principles include continuous testing throughout development, whole team approach where everyone is responsible for quality, quick feedback to developers, test automation to enable speed, user story based testing, adapting to changes, and preventing defects rather than just finding them.

Q148. What is the role of a tester in Agile?

Agile testers participate in planning, clarify requirements through discussions, write acceptance criteria, create and execute tests continuously, automate tests, collaborate closely with developers, provide quick feedback, and ensure quality throughout the sprint. They’re embedded in development teams, not separate.

Q149. What is a user story?

A user story describes a feature from the user’s perspective, typically following the format: “As a [user role], I want [feature] so that [benefit].” For example, “As a customer, I want to save items to a wishlist so that I can purchase them later.”

Q150. What is acceptance criteria?

Acceptance criteria define conditions that must be met for a user story to be considered complete and acceptable. They’re testable conditions written in clear, specific language. For example, for a login story: “User can login with valid credentials,” “Error message appears for invalid credentials,” “User redirected to dashboard after successful login.”

Q151. What is Scrum?

Scrum is an Agile framework that organizes work into time-boxed iterations called sprints, typically 2-4 weeks long. It has specific roles (Product Owner, Scrum Master, Development Team), events (Sprint Planning, Daily Standup, Sprint Review, Sprint Retrospective), and artifacts (Product Backlog, Sprint Backlog, Increment).

Q152. What is a sprint?

A sprint is a fixed time period (usually 2-4 weeks) during which the team completes a set of user stories from the backlog. At the end of each sprint, the team delivers a potentially shippable product increment. Sprints create rhythm and enable regular feedback and adaptation.

Q153. What is a product backlog?

The product backlog is a prioritized list of features, enhancements, and fixes for the product. The Product Owner maintains it, continuously refining and reprioritizing based on business value. Items at the top are detailed and ready for development; items further down are less refined.

Q154. What is a sprint backlog?

The sprint backlog is the subset of product backlog items selected for a specific sprint, plus the plan for delivering them. The development team owns it and updates it daily as work progresses. It represents the team’s commitment for the sprint.

Q155. What is an epic?

An epic is a large user story that’s too big to complete in one sprint. Epics are broken down into smaller user stories. For example, “Online Shopping” might be an epic containing stories like “Add to Cart,” “Checkout Process,” “Payment Integration,” and “Order Tracking.”

Q156. What is sprint planning?

Sprint planning is a meeting at the start of each sprint where the team selects user stories from the product backlog, defines sprint goals, breaks stories into tasks, and estimates effort. The team commits to what they’ll deliver by sprint end.

Q157. What is a daily standup?

A daily standup is a brief (15-minute) daily meeting where team members share what they did yesterday, what they’ll do today, and any blockers. It keeps everyone synchronized, identifies obstacles quickly, and promotes accountability. Testers share testing progress and impediments.

Q158. What is sprint review?

Sprint review happens at sprint end, where the team demonstrates completed work to stakeholders, gathers feedback, and discusses what to build next. It’s an opportunity for stakeholders to see progress and influence direction. Successful demos require thorough testing.

Q159. What is sprint retrospective?

Sprint retrospective is a meeting after sprint review where the team reflects on the sprint process – what went well, what didn’t, and how to improve. It focuses on process improvement rather than product. Team members openly discuss issues and commit to improvements.

Q160. What is Definition of Done?

Definition of Done is a shared understanding of what “complete” means for a user story. It typically includes development complete, code reviewed, unit tests passed, functional tests passed, documentation updated, and acceptance criteria met. Items not meeting the Definition of Done aren’t considered complete.

Q161. What is Definition of Ready?

Definition of Ready describes when a user story is ready for development. Criteria might include acceptance criteria defined, dependencies identified, estimated by the team, and small enough to complete in one sprint. Ready stories prevent confusion and wasted effort during sprints.

Q162. What is story point?

Story points are relative units for estimating user story size and complexity, considering effort, complexity, and uncertainty. Teams might use Fibonacci numbers (1, 2, 3, 5, 8, 13) where larger numbers indicate larger stories. Story points enable velocity tracking and capacity planning.

Q163. What is velocity in Agile?

Velocity is the amount of work a team completes in a sprint, measured in story points. Teams track velocity over sprints to predict future capacity. For example, if a team averages 30 story points per sprint, they can plan around that capacity.

Q164. What is burndown chart?

A burndown chart shows remaining work versus time during a sprint. It visualizes whether the team is on track to complete their sprint commitment. The ideal line shows expected progress; the actual line shows real progress. Gaps indicate risks to sprint goals.

Q165. What is test-driven development?

Test-Driven Development (TDD) writes tests before writing code. The process is: write a failing test, write minimal code to pass the test, refactor code while keeping tests passing. TDD ensures code is testable and has good test coverage from the start.

Q166. What is behavior-driven development?

Behavior-Driven Development (BDD) extends TDD by writing tests in natural language that describe system behavior from a business perspective. BDD uses frameworks like Cucumber with Gherkin syntax (Given-When-Then). It improves collaboration between technical and non-technical team members.

Q167. What is continuous integration?

Continuous Integration (CI) automatically builds and tests code whenever developers commit changes. CI catches integration issues quickly, maintains code quality, and provides fast feedback. Tools like Jenkins, GitLab CI, or GitHub Actions enable CI workflows.

Q168. What is continuous testing?

Continuous testing integrates automated tests into CI/CD pipelines, running tests automatically with each code change. It provides immediate feedback on code quality and catches regressions early. Continuous testing enables fast, confident releases.

Q169. What is pair testing?

Pair testing has two testers working together on the same feature – one executing tests while the other observes, suggests ideas, and notes issues. It combines strengths, catches more bugs, shares knowledge, and reduces blind spots. Similar to pair programming but for testing.

Q170. What is exploratory testing in Agile?

In Agile, exploratory testing complements automated tests by finding unexpected issues through human intuition and creativity. Testers explore new features each sprint, thinking like users, trying edge cases, and evaluating user experience. It balances structured and flexible testing approaches.

Section 6: Automation Testing Basics (30 Questions)

Q171. What is automation testing?

Automation testing uses software tools and scripts to execute test cases automatically without human intervention. Scripts simulate user actions, compare actual and expected results, and report outcomes. Automation makes testing faster, more reliable, and repeatable, especially for regression and large test suites.

Q172. When should you automate tests?

Automate tests for regression testing, repetitive tests, tests needing multiple data sets, tests run on multiple environments or configurations, performance and load testing, and stable features that won’t change frequently. Don’t automate tests that require human judgment, change frequently, or aren’t worth the investment.

Q173. When should you NOT automate tests?

Don’t automate tests for frequently changing features, exploratory testing, usability testing, ad-hoc testing, one-time tests, or tests where automation cost exceeds benefits. New features under active development often change too much to justify automation. Manual testing is more cost-effective in these cases.

Q174. What are advantages of automation testing?

Automation is faster than manual execution, enables regression testing efficiently, allows running tests unattended (overnight), provides consistent results without human error, supports performance and load testing, runs tests on multiple configurations simultaneously, and frees testers for exploratory testing.

Q175. What are disadvantages of automation testing?

Automation requires initial investment in tools, scripts, and training. It needs maintenance as applications change. Not all testing can be automated – usability and exploratory testing require human insight. False positives waste time. Automation cannot replace human testers, only augment them.

Q176. What is an automation framework?

An automation framework is a structured set of guidelines, libraries, and practices for creating and managing automation scripts efficiently. Frameworks provide reusable components, consistent structure, reporting capabilities, and best practices. They make automation scalable and maintainable.

Q177. What types of automation frameworks exist?

Common types include Linear/Record-Playback (simple recorded scripts), Modular (organized into reusable modules), Data-Driven (separates test logic from data), Keyword-Driven (uses keywords representing actions), Hybrid (combines approaches), and BDD frameworks (uses natural language specifications).

Q178. What is a data-driven framework?

Data-driven frameworks separate test data from test scripts. The same script runs with different data sets from external sources like Excel, CSV, or databases. For example, one login script tests multiple username-password combinations from a data file. This approach maximizes script reusability.

Q179. What is a keyword-driven framework?

Keyword-driven frameworks use keywords representing actions like “click,” “enterText,” or “verifyTitle.” Test cases are written using these keywords without programming. Keywords map to reusable code functions. This approach makes tests readable by non-programmers and promotes reusability.

Q180. What is Page Object Model?

Page Object Model (POM) is a design pattern where each web page is represented as a class containing page elements and methods. It separates test logic from page structure, making scripts maintainable. When page changes, you update only the page object, not all tests using that page.

Q181. What automation tools do you know?

Popular tools include Selenium (web applications), Appium (mobile applications), JUnit/TestNG (test frameworks), Cucumber (BDD), JMeter (performance testing), Postman/Rest Assured (API testing), and Jenkins (CI/CD). Each tool serves specific automation needs and often tools are combined.

Q182. What is Selenium?

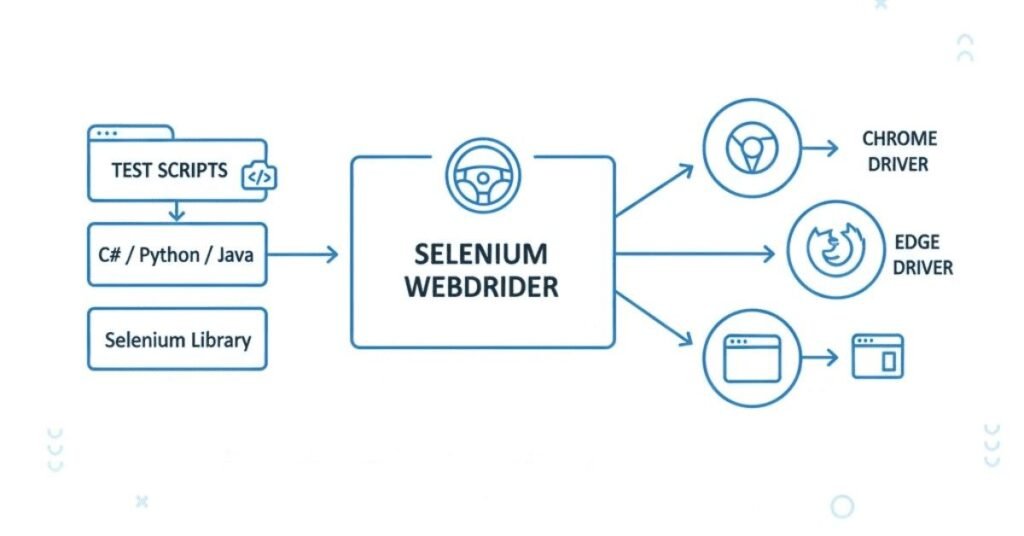

Selenium is an open-source automation tool for web applications. It supports multiple browsers, programming languages (Java, Python, C#, JavaScript), and operating systems. Selenium WebDriver controls browsers programmatically, simulating user interactions. It’s the most popular web automation tool due to flexibility and community support.

Q183. What are components of Selenium?

Selenium suite includes Selenium WebDriver (for creating browser automation scripts), Selenium IDE (recording tool), Selenium Grid (parallel execution across machines), and previously Selenium RC (now deprecated). WebDriver is the core component used in most automation projects.

Q184. What is Selenium WebDriver?

Selenium WebDriver is an API that allows scripts to control web browsers programmatically. It provides methods to find elements, perform actions (click, type, select), navigate pages, and extract information. WebDriver communicates directly with browsers, making automation faster and more reliable than older approaches.

Q185. What programming languages does Selenium support?

Selenium WebDriver supports Java, Python, C#, JavaScript, Ruby, and Kotlin. Java is most commonly used, but Python is popular for its simplicity. The choice depends on team skills, existing infrastructure, and project requirements. All languages offer similar capabilities.

Q186. What is TestNG?

TestNG is a testing framework for Java that provides features for organizing and running tests, including annotations, test configuration, parallel execution, data providers, reporting, and assertions. It’s more powerful than JUnit and widely used with Selenium for web automation projects.

Q187. What is JUnit?

JUnit is another popular Java testing framework, simpler than TestNG. It provides annotations for test methods, setup/teardown, assertions, and test execution. JUnit is widely used for unit testing but can also support integration and automation testing with Selenium.

Q188. What is Cucumber?

Cucumber is a BDD tool that lets you write test scenarios in plain English using Gherkin syntax (Given-When-Then). It bridges communication between technical and non-technical team members. Feature files describe behavior, step definitions implement the logic, and test runners execute scenarios.

Q189. What is Gherkin language?

Gherkin is a simple language for writing test scenarios in a structured format using keywords like Feature, Scenario, Given, When, Then, And, But. It’s readable by everyone regardless of technical background. Example: “Given user is on login page, When user enters credentials, Then dashboard is displayed.”

Q190. What are locators in Selenium?

Locators identify elements on web pages so automation scripts can interact with them. Selenium provides locators by ID, name, class name, tag name, link text, partial link text, CSS selector, and XPath. Choosing the right locator makes scripts reliable and maintainable.

Q191. What is XPath?

XPath is a query language for selecting elements in HTML/XML documents. It navigates the document tree structure using paths. Selenium uses XPath to locate elements when other locators don’t work. Absolute XPath starts from root, relative XPath starts from anywhere. Relative XPath is preferred for maintainability.

Q192. What is CSS selector?

CSS selectors use CSS syntax to locate elements, offering an alternative to XPath. They’re often faster than XPath and more readable. CSS selectors use syntax like #id for IDs, .classname for classes, tagname for tags, and various combinations for complex selections.

Q193. What are waits in Selenium?

Waits handle timing issues when pages load or elements appear. Implicit wait applies globally, waiting specified time for elements. Explicit wait waits for specific conditions like element visibility. Fluent wait polls for conditions at intervals with custom exceptions. Proper waits make tests stable and reliable.

Q194. What is synchronization in automation testing?

Synchronization ensures automation scripts wait for the application to be ready before proceeding. Web applications load asynchronously, elements appear dynamically, and processing takes time. Without synchronization, scripts fail because they act before the application is ready. Waits provide synchronization.

Q195. What is POM in automation?

POM (Page Object Model) is a design pattern organizing automation code by page. Each page is a class with elements as variables and actions as methods. Tests use page objects instead of directly interacting with elements. This makes code reusable, maintainable, and readable.

Q196. What is Jenkins?

Jenkins is an open-source automation server for continuous integration and continuous delivery. It automatically builds, tests, and deploys applications. Jenkins integrates with version control, runs automation tests, generates reports, and notifies teams of results. It’s essential for DevOps and continuous testing.

Q197. What is continuous integration?

Continuous Integration (CI) automatically builds and tests code when developers commit changes. CI catches integration issues early, maintains code quality, and provides fast feedback. Jenkins, GitLab CI, CircleCI, and GitHub Actions are popular CI tools. CI is fundamental to modern development practices.

Q198. What is continuous delivery?

Continuous Delivery (CD) automatically deploys tested code to staging or production environments. Combined with CI (CI/CD), it enables rapid, reliable releases. Automated tests in CI/CD pipelines ensure only quality code deploys. CD reduces deployment risks and enables frequent releases.

Q199. What is a test script?

A test script is automated code that executes test cases. It contains commands to launch applications, perform actions, verify results, and report outcomes. Test scripts are written in programming languages using automation tools. Well-written scripts are modular, reusable, and maintainable.

Q200. How do you handle dynamic elements in automation?

Dynamic elements change attributes like ID or class names each time the page loads, making them challenging to locate. Handle them using relative XPath based on static attributes, CSS selectors with partial matches, waiting for element visibility, using contains or starts-with functions in XPath, locating by nearby stable elements, or using dynamic waits. Avoid absolute XPath or IDs that change frequently.

Q201. What is implicit wait?

Implicit wait tells WebDriver to wait for a specified time when searching for elements before throwing an exception. It applies globally to all elements in the script. For example, setting implicit wait to 10 seconds means WebDriver waits up to 10 seconds for any element to appear. Set it once at the beginning of your script.

Q202. What is explicit wait?

Explicit wait waits for a specific condition on a particular element before proceeding. It’s more flexible than implicit wait because you define exactly what to wait for – element visibility, clickability, presence, text to appear, etc. Use explicit waits when you know certain elements take longer to load.

Q203. What is fluent wait?

Fluent wait is similar to explicit wait but with additional capabilities. You can define polling frequency (how often to check the condition) and which exceptions to ignore while waiting. For example, check every 2 seconds for element visibility while ignoring NoSuchElementException, with a maximum timeout of 30 seconds.

Q204. What is the difference between implicit and explicit wait?

Implicit wait applies globally to all elements throughout the script, while explicit wait applies to specific elements. Implicit wait only waits for element presence, while explicit wait can wait for various conditions like visibility, clickability, or text. Explicit wait is more flexible and recommended for modern automation. Never mix both types.

Q205. What are assertions in automation testing?

Assertions verify expected results match actual results in automation scripts. If assertion fails, the test fails. Common assertions include assertEquals (checks if two values are equal), assertTrue (checks if condition is true), assertFalse (checks if condition is false), and assertNotNull (checks if value is not null). Assertions validate test outcomes automatically.

Q206. What is the difference between hard assertion and soft assertion?

Hard assertion stops test execution immediately if it fails – subsequent steps don’t execute. Soft assertion continues executing the test even if assertion fails, collecting all failures and reporting at the end. Use hard assertions for critical validations that make further testing meaningless. Use soft assertions to check multiple validations in one test.

Q207. What is parallel testing?

Parallel testing runs multiple test cases simultaneously on different machines, browsers, or environments. It dramatically reduces execution time. For example, running 100 test cases sequentially might take 5 hours, but running them in parallel on 10 machines reduces time to 30 minutes. Tools like Selenium Grid and cloud platforms enable parallel testing.

Q208. What is Selenium Grid?

Selenium Grid runs tests on multiple machines and browsers simultaneously. It has a hub that distributes tests to registered nodes (machines with different browser-OS combinations). Grid enables parallel execution, cross-browser testing, and reduces test execution time. It’s essential for large test suites needing quick feedback.

Q209. What is cross-browser testing?

Cross-browser testing verifies applications work correctly across different browsers like Chrome, Firefox, Safari, Edge, and their versions. Browsers render content differently, so what works in Chrome might break in Safari. Automation scripts can run on multiple browsers using WebDriver, ensuring consistent user experience everywhere.

Q210. What is headless browser testing?

Headless browser testing runs browsers without a graphical user interface, making execution faster and suitable for CI/CD pipelines. Headless browsers consume less resources and run in the background. Chrome and Firefox support headless mode. It’s perfect for automated testing in server environments without displays.

Q211. What is screenshot capturing in Selenium?

Screenshot capturing takes images of the browser when tests run, useful for debugging failures or documenting results. Selenium’s TakesScreenshot interface captures screenshots programmatically. Best practice is capturing screenshots on test failures automatically. Screenshots help understand what went wrong without re-running tests.

Q212. How do you handle pop-ups in Selenium?

JavaScript alerts are handled using Alert interface with methods like accept, dismiss, getText, and sendKeys. Windows pop-ups are handled by switching between window handles. For authentication pop-ups, pass credentials in the URL or use Robot class. Each pop-up type requires different handling approaches.

Q213. How do you handle frames in Selenium?

Frames are HTML documents embedded within other HTML documents. Selenium cannot directly access elements inside frames – you must switch to the frame first using driver.switchTo().frame(). You can switch using frame name, ID, index, or WebElement. After working with frame elements, switch back to default content before accessing main page elements.

Q214. How do you handle multiple windows?

Each browser window has a unique handle. Get all window handles, iterate through them, switch to the desired window using driver.switchTo().window(handle), perform actions, and switch back. Store the parent window handle before opening new windows to easily switch back. Window handling is common in applications opening links in new tabs.

Q215. What is data-driven testing?

Data-driven testing separates test logic from test data, allowing the same test to run with multiple data sets. Test data comes from external sources like Excel, CSV, databases, or JSON files. For example, one login test script runs with 50 different username-password combinations from Excel, effectively creating 50 test cases.

Q216. How do you read data from Excel in automation?

Apache POI library reads Excel files in Java automation. It provides classes to open workbooks, access sheets, read cells, and extract data. Create a utility method to read Excel data, then call it from test scripts. Excel is popular for test data because non-technical people can easily update data without touching code.

Q217. What is a test execution report?

Test execution reports show test results including total tests run, passed, failed, skipped, execution time, and failure details. Good reports help stakeholders understand quality quickly. TestNG generates HTML reports automatically. For better reports, integrate tools like Extent Reports, Allure, or custom HTML reports with screenshots.

Q218. What is extent report?

Extent Reports is a popular reporting library for test automation that creates beautiful, detailed HTML reports with test execution details, screenshots, logs, and system information. It integrates easily with TestNG or JUnit. Reports include dashboard views, trend analysis, and can be customized with company branding. Stakeholders prefer Extent Reports for readability.

Q219. What is Maven in automation testing?

Maven is a build automation tool that manages project dependencies, compiles code, runs tests, and generates reports. The pom.xml file defines project configuration and dependencies. Maven automatically downloads required libraries, making project setup easy. It integrates with CI/CD tools, standardizes project structure, and simplifies dependency management across teams.

Q220. What is the purpose of pom.xml?

The pom.xml (Project Object Model) file is Maven’s configuration file containing project information, dependencies, build configurations, and plugins. When you add Selenium dependency in pom.xml, Maven automatically downloads Selenium jars and all related dependencies. Updating versions is simple – change version number in pom.xml, and Maven handles the rest.

Section 7: Selenium and WebDriver (35 Questions)

Q221. What browsers does Selenium WebDriver support?