Machine Learning Interview Preparation Guide

1. 300+ Technical Interview Questions & Answers

- Python Programming Fundamentals: 30 Questions

- Mathematics for Machine Learning: 30 Questions

- Data Libraries – NumPy: 35 Questions

- Data Libraries – Pandas: 25 Questions

- Data Visualization with Matplotlib: 15 Questions

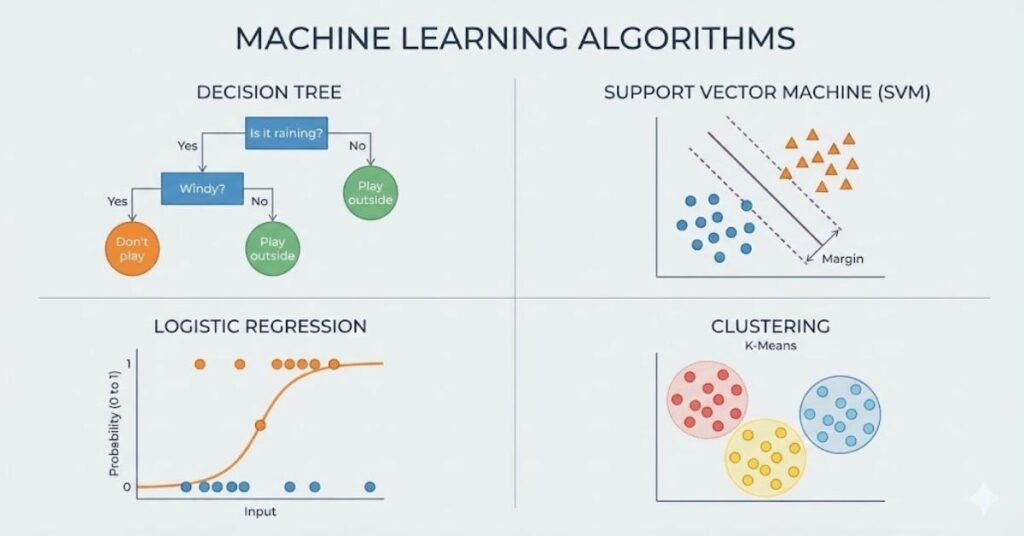

- Machine Learning Fundamentals: 20 Questions

- Classification Models: 20 Questions

- Regression Models: 25 Questions

- Support Vector Machines: 30 Questions

- Decision Trees: 15 Questions

- Random Forests and Ensemble Methods: 40 Questions

- Unsupervised Learning Techniques: 15 Questions

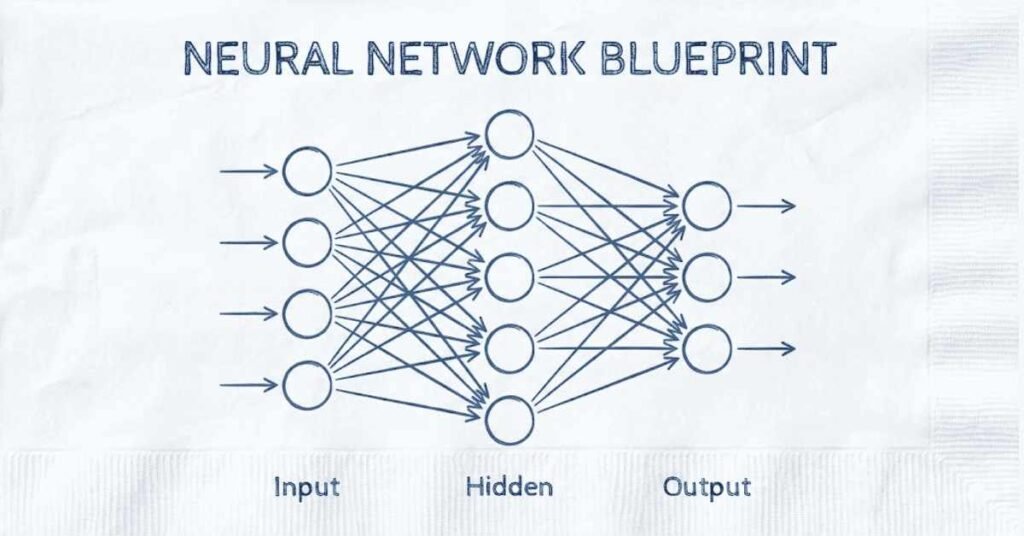

- Neural Networks with Keras: 25 Questions

- Training Deep Neural Networks: 25 Questions

- TensorFlow Data Processing: 25 Questions

- Generative AI and LLMs: 25 Questions

⚡ Become a Job-Ready ML & Deep Learning Engineer — Join the Course Today!

Chapter 1: Python Programming Fundamentals

1.1 Basic Python Syntax and Data Types

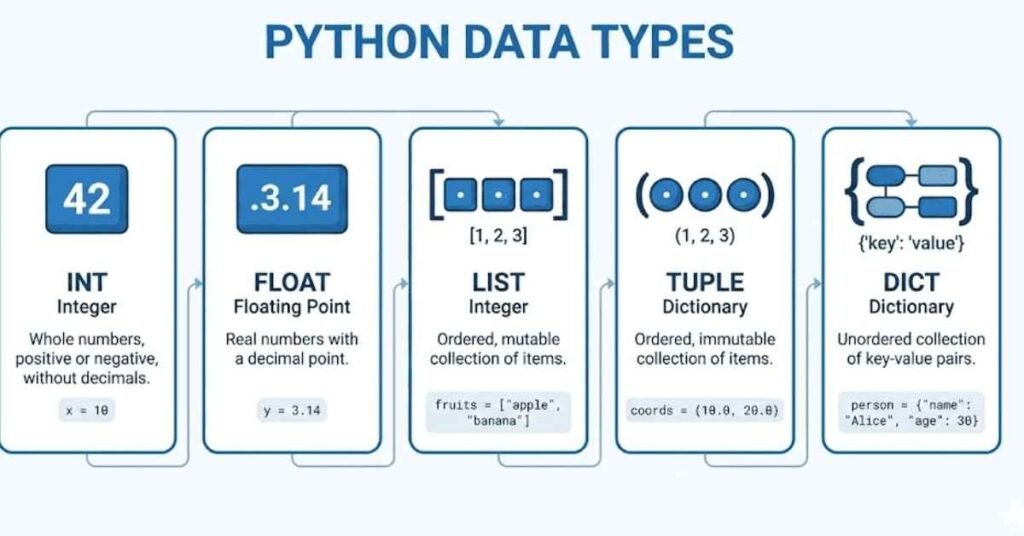

Q1. What are the main data types in Python used for machine learning?

Python has several core data types that you’ll use constantly in machine learning projects. The basic ones include integers for whole numbers, floats for decimal numbers, strings for text data, and booleans for true or false values. For machine learning specifically, you’ll work heavily with lists to store collections of data, tuples for immutable sequences, dictionaries for key-value pairs like feature names and values, and sets for unique elements. Understanding these is crucial because your entire dataset manipulation depends on handling these types correctly.

Q2. How do you handle string manipulation in Python for text preprocessing?

String manipulation is essential when working with text data in machine learning. You can use methods like split to break text into words, strip to remove whitespace, lower or upper to standardize case, and replace to substitute characters. For example, if you’re cleaning customer reviews, you might use text.lower().strip() to make everything lowercase and remove extra spaces. You can also use slicing with brackets to extract parts of strings, and the join method to combine multiple strings together.

Q3. What is the difference between a list and a tuple in Python?

Lists and tuples might look similar, but they have one key difference. Lists are mutable, meaning you can change them after creation by adding, removing, or modifying elements. Tuples are immutable, so once you create them, they cannot be changed. In machine learning, you use lists when you need flexibility, like storing training data that might be updated. Tuples are better for fixed data like image dimensions or coordinates that should never change. Tuples are also slightly faster and use less memory.

Q4. Explain list comprehension and why it’s useful in data processing.

List comprehension is a compact way to create lists in Python using a single line of code. Instead of writing a for loop with multiple lines, you can write something like squared_numbers equals bracket x multiplied by x for x in range ten bracket. This creates a list of squared numbers from zero to nine. In machine learning, list comprehension makes your code cleaner and often faster when transforming data, filtering datasets, or applying functions to multiple elements. It’s particularly useful when preprocessing features or creating new variables from existing ones.

Q5. What are Python dictionaries and how are they used in machine learning?

Dictionaries store data as key-value pairs, like a real dictionary stores words and definitions. In machine learning, dictionaries are incredibly useful for storing model parameters, hyperparameters, or mapping categorical values to numbers. For example, you might create a dictionary to map color names to numeric codes like red colon one, blue colon two. You can access values quickly using keys, add new pairs easily, and iterate through both keys and values. They’re also perfect for storing model configurations or evaluation metrics.

1.2 Control Flow and Loops

Q6. How do if-else statements work in Python and their role in ML pipelines?

If-else statements help your program make decisions based on conditions. In machine learning, you use them constantly for data validation, error handling, and implementing logic. For example, you might check if a value is missing before processing it, or decide which algorithm to use based on dataset size. The syntax is straightforward with if condition followed by colon, then indented code. You can add elif for multiple conditions and else for the default case. This conditional logic is fundamental for building robust ML pipelines.

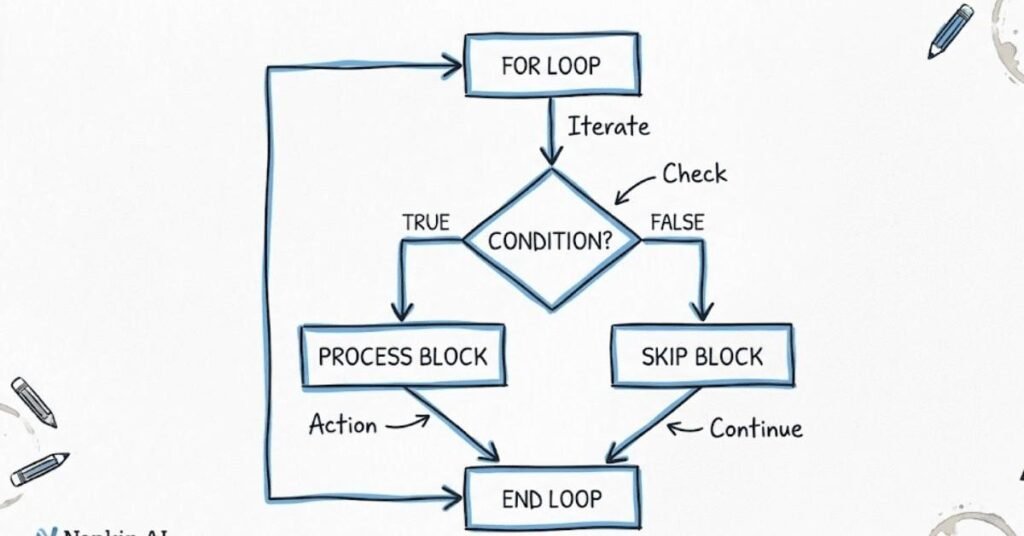

Q7. Explain the difference between for loops and while loops.

For loops iterate over a sequence for a predetermined number of times, while while loops continue until a condition becomes false. In machine learning, for loops are more common because you usually know how many iterations you need, like looping through each data point or each training epoch. You write for item in collection followed by your code. While loops are useful when you don’t know how many iterations you need upfront, like training a model until it reaches a certain accuracy threshold. Understanding when to use each makes your code more efficient.

Q8. What is the enumerate function and when should you use it?

Enumerate is a handy function that gives you both the index and the value when looping through a sequence. Instead of writing a counter variable manually, enumerate does it automatically. For example, for index comma value in enumerate my list gives you both. In machine learning, this is useful when you need to track position while processing data, like labeling which row you’re on in a dataset or tracking which epoch you’re in during model training. It makes your code cleaner and more readable.

Q9. How do you use break and continue statements in loops?

Break and continue give you control over loop execution. Break immediately exits the entire loop, while continue skips the rest of the current iteration and moves to the next one. In machine learning, you might use break when you’ve found what you’re looking for and don’t need to continue searching, saving computation time. Continue is useful for skipping invalid data points without stopping the entire process. For example, if you encounter a corrupted image in your dataset, you can use continue to skip it and process the next one.

Q10. What are nested loops and when are they necessary in ML?

Nested loops are loops inside other loops. They’re necessary when working with multidimensional data like matrices or images. For instance, to process every pixel in an image, you need one loop for rows and another for columns. In machine learning, you use nested loops for tasks like calculating distances between all pairs of points, comparing every prediction with every actual value, or performing grid search over multiple hyperparameters. However, they can be slow, so you should use vectorized operations when possible.

1.3 Functions and Lambda Expressions

Q11. Why are functions important in machine learning code?

Functions are reusable blocks of code that perform specific tasks. In machine learning, they help you organize code, avoid repetition, and make your work more maintainable. You might create a function for data preprocessing, another for model training, and another for evaluation. This way, you can call these functions whenever needed without rewriting code. Functions also make debugging easier because you can test each part independently. Good function design is the difference between messy scripts and professional ML code.

Q12. How do you define a function with default parameters?

Default parameters give function arguments preset values that are used if no value is provided when calling the function. You define them in the function signature like def train_model learning_rate equals zero point zero one. This is extremely useful in machine learning for setting default hyperparameters. Users can call your function with just required arguments and get sensible defaults, or they can override any parameter they want to customize. It makes your functions more flexible and user-friendly.

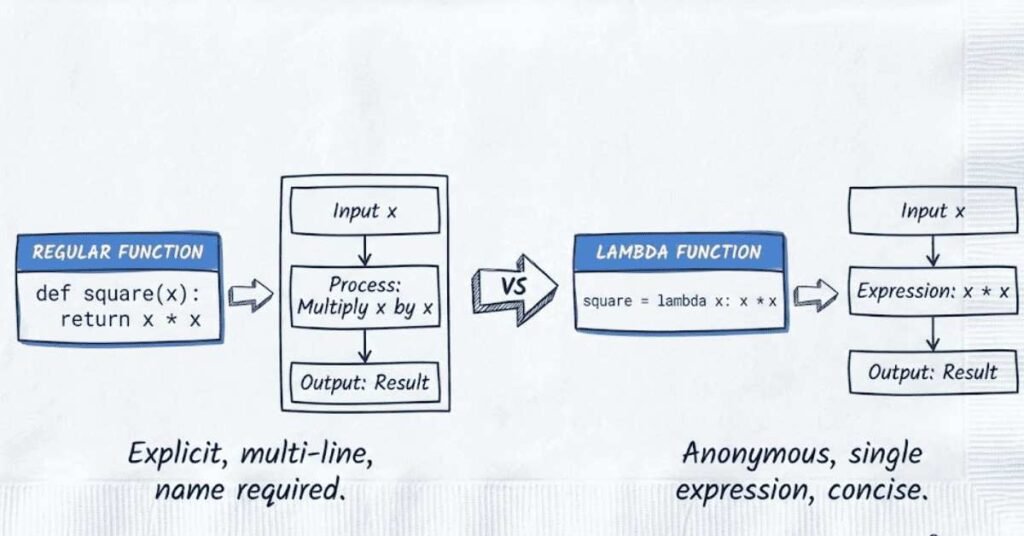

Q13. What are lambda functions and when should you use them?

Lambda functions are small anonymous functions defined in a single line without a formal def statement. They’re written as lambda arguments colon expression. In machine learning, you use them for quick operations that don’t need a full function definition, like applying a transformation to data. For example, when using map or filter functions, or sorting data with custom criteria. If your operation is simple and used only once, lambda is perfect. For complex operations used multiple times, regular functions are better.

Q14. Explain the concept of function scope and global variables.

Scope determines where variables can be accessed in your code. Variables defined inside a function have local scope and cannot be accessed outside. Variables defined at the top level have global scope and can be accessed anywhere. In machine learning projects, managing scope properly prevents bugs and makes code predictable. You should avoid global variables for model parameters or data because they can be accidentally modified. Instead, pass data as function arguments and return results explicitly.

Q15. How do you return multiple values from a function?

Python functions can return multiple values by separating them with commas. The function actually returns a tuple containing all values. For example, return accuracy comma loss comma model returns three values that you can unpack when calling the function. In machine learning, this is incredibly useful for returning multiple metrics from evaluation functions, or returning both predictions and confidence scores. You can unpack them as accuracy comma loss comma model equals evaluate_model or keep them as a tuple.

1.4 Object-Oriented Programming Concepts

Q16. What is Object-Oriented Programming and why is it used in ML frameworks?

Object-Oriented Programming organizes code around objects that combine data and functions that work with that data. Instead of having separate variables and functions floating around, you group related things together in classes. In machine learning, frameworks like TensorFlow and PyTorch use OOP extensively. Your model is an object with attributes like weights and methods like fit and predict. This makes code more organized, reusable, and easier to understand. You can create custom classes for datasets, models, or preprocessing pipelines.

Q17. How do you create a class in Python?

You create a class using the class keyword followed by the class name. Inside, you define an init method that runs when creating new objects, setting up initial attributes. Then you add other methods for different functionalities. For example, a NeuralNetwork class might have init to set layers, a forward method to make predictions, and a train method to update weights. Each method’s first parameter is self, which refers to the specific object instance. Classes help you build custom ML components that integrate smoothly with existing frameworks.

Q18. What is the difference between a class and an object?

A class is a blueprint or template that defines what something should look like and what it can do. An object is an actual instance created from that class. Think of a class as a cookie cutter and objects as the actual cookies made from it. In machine learning, you might have a DecisionTree class that defines how decision trees work. Then you create multiple tree objects from that class, each potentially trained on different data. The class defines the structure, while objects are the real working models.

Q19. Explain inheritance and how it’s used in ML model development.

Inheritance allows you to create new classes based on existing ones, inheriting their attributes and methods. The new class can add or override functionality. In machine learning, this is powerful for building model hierarchies. For example, Keras has a base Model class, and specific architectures like Sequential inherit from it. You can create your own custom model class that inherits from a base class, automatically getting standard methods while adding your specific logic. This promotes code reuse and consistency.

Q20. What are class attributes versus instance attributes?

Class attributes are shared by all instances of a class, while instance attributes are unique to each object. Class attributes are defined directly in the class body, while instance attributes are typically set in the init method using self. In machine learning, you might use a class attribute to store the algorithm name that all instances share, while instance attributes store the specific weights and hyperparameters unique to each trained model. Understanding this distinction prevents bugs where you accidentally share data between different models.

1.5 File Handling and Exception Management

Q21. How do you read data from a CSV file in Python?

Reading CSV files is a fundamental skill in machine learning since most datasets come in this format. You can use Python’s built-in csv module or the more powerful pandas library. With pandas, you simply write pd dot read_csv followed by the filename. This loads the entire dataset into a DataFrame that you can easily manipulate. You can specify parameters like which delimiter to use, whether there’s a header row, which columns to read, and how to handle missing values. Understanding these options helps you load data correctly.

Q22. What is the difference between reading a file with open versus using pandas?

Using open gives you raw file access where you read line by line and parse data yourself. This is useful for custom file formats or when you need fine control. Pandas read_csv, on the other hand, automatically parses the data into a structured DataFrame with rows and columns, handles different data types, and provides many options for data cleaning during import. For machine learning, pandas is usually better because it saves time and provides immediate access to powerful data manipulation methods. Use open for simple text files or custom formats.

Q23. How do you handle exceptions in Python and why is it important?

Exceptions are errors that occur during program execution. Handling them prevents your code from crashing unexpectedly. You use try-except blocks where you put potentially problematic code in the try section and handle errors in the except section. In machine learning, exceptions might occur when loading corrupted data files, when calculations produce invalid results like division by zero, or when memory runs out. Proper exception handling makes your ML pipelines robust, allowing them to skip bad data points or retry operations instead of failing completely.

Q24. What are the common exceptions you might encounter in ML code?

Several exceptions frequently appear in machine learning work. FileNotFoundError happens when trying to load a nonexistent dataset. ValueError occurs when functions receive the wrong type or shape of data, like passing strings to a function expecting numbers. MemoryError appears when your dataset is too large for available RAM. IndexError happens when accessing array indices that don’t exist. TypeError occurs when performing operations on incompatible types. KeyError appears when accessing missing dictionary keys. Knowing these helps you write better error handling code.

Q25. How do you write data to files in Python?

Writing data to files preserves your results for later use. With basic Python, you open a file in write mode and use the write method. For machine learning, you typically use pandas to write DataFrames to CSV files with to_csv, or numpy to save arrays with save or savetxt. You can also pickle Python objects to save entire models or preprocessors. When writing, consider whether to overwrite existing files or append to them, what format to use, and whether to compress large files. Proper file writing ensures reproducibility.

1.6 Python Libraries Overview

Q26. What are the essential Python libraries for machine learning?

Several libraries form the foundation of machine learning in Python. NumPy handles numerical arrays and mathematical operations efficiently. Pandas manages structured data in DataFrames, perfect for datasets with rows and columns. Matplotlib and Seaborn create visualizations to understand data and results. Scikit-learn provides standard machine learning algorithms and tools. TensorFlow and PyTorch enable deep learning. SciPy offers scientific computing functions. Each library has its strength, and professional ML engineers use combinations of these tools based on project needs.

Q27. Why is NumPy faster than regular Python lists for numerical operations?

NumPy arrays are much faster than Python lists for numerical work because of how they store data in memory. Lists store pointers to objects scattered in memory, while NumPy arrays store data in continuous blocks. This allows NumPy to use optimized C code for operations and leverage CPU features for parallel processing. When you multiply a NumPy array by a number, it happens in one optimized operation. With lists, you’d loop through each element individually in slow Python code. For machine learning with millions of numbers, this speed difference is crucial.

Q28. What is the purpose of importing libraries with aliases?

Importing libraries with aliases gives them shorter names for convenience. You write import numpy as np or import pandas as pd. This saves typing and follows community conventions that make code recognizable to other developers. Everyone knows np refers to NumPy and pd to Pandas. Using standard aliases also makes your code more readable and maintainable. However, for your own custom modules, descriptive imports are better than obscure aliases.

Q29. How do you install external Python libraries?

You install libraries using package managers, primarily pip. From your command line or terminal, you type pip install followed by the library name, like pip install scikit-learn. For specific versions, you add equals signs and version numbers. Conda is another popular option, especially for managing dependencies in data science environments. You can also install multiple packages at once by listing them in a requirements text file and running pip install minus r requirements dot txt. Managing installations properly ensures reproducibility across different computers.

Q30. What are virtual environments and why should you use them?

Virtual environments are isolated Python installations that keep project dependencies separate. Without them, all projects share the same libraries, which causes conflicts when different projects need different versions. You create a virtual environment with venv or conda, activate it, then install project-specific packages. In machine learning, this is essential because different projects might need different TensorFlow versions or conflicting package requirements. Virtual environments ensure your project works consistently and doesn’t break when you update libraries for other work.

Quick Tip: Need Clear Guidance? Explore Machine Learning How-To Guide!

Chapter 2: Mathematics for Machine Learning

2.1 Linear Algebra Essentials

Q31. What is a vector and how is it used in machine learning?

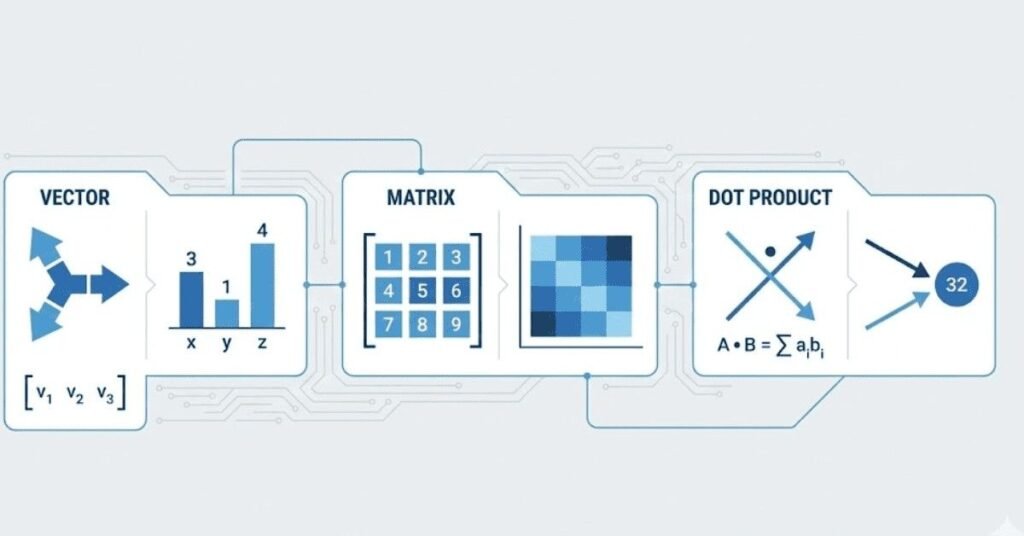

A vector is an ordered list of numbers that represents a point in space or a direction. In machine learning, vectors are everywhere. Each data point in your dataset is a vector where each number represents a feature. For example, a house might be represented as a vector containing size, number of rooms, and age. Model parameters are also vectors. Operations on vectors like addition, scaling, and dot products form the mathematical foundation of how algorithms learn patterns from data.

Q32. Explain what a matrix is and its role in ML algorithms.

A matrix is a rectangular array of numbers arranged in rows and columns. Your entire dataset is typically stored as a matrix where each row is a data point and each column is a feature. In deep learning, matrices represent layers of neurons and the weights connecting them. Many ML operations involve matrix multiplication, which efficiently computes relationships between features and predictions. Understanding matrices is essential because most algorithms work by performing mathematical operations on these number grids.

Q33. What is the dot product and why is it important?

The dot product takes two vectors and produces a single number by multiplying corresponding elements and summing the results. It measures how much two vectors point in the same direction. In machine learning, dot products appear everywhere. Linear regression predictions are dot products between your input features and learned weights. Neural network layers use dot products to combine inputs. Similarity between data points is often measured using dot products. This simple operation underpins much of how models make predictions.

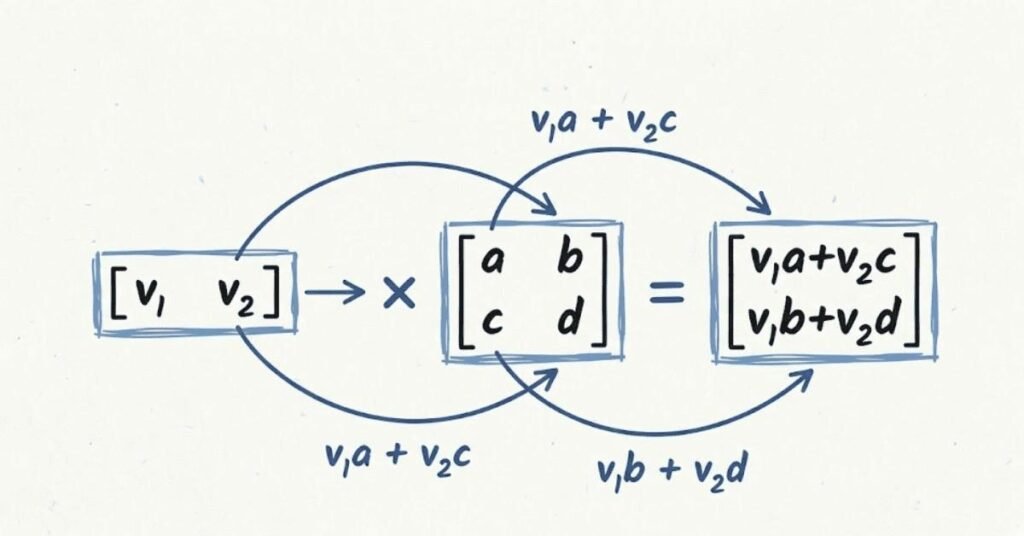

Q34. What does matrix multiplication represent in neural networks?

Matrix multiplication in neural networks represents information flowing through layers and being transformed. When you multiply your input matrix by a weight matrix, you’re computing a weighted sum for each neuron. Each neuron receives inputs from all previous neurons, multiplied by learned weights, and produces an output. This transformation happens at each layer. Understanding matrix multiplication helps you see how networks process information, why certain layer sizes work together, and how gradients flow backward during training.

Q35. What is the transpose of a matrix?

Transposing a matrix flips it over its diagonal, swapping rows and columns. A three by two matrix becomes a two by three matrix. In machine learning, transposition is used constantly in mathematical formulas. When computing gradients, you often need to transpose weight matrices. When reshaping data or preparing it for specific operations, transposition ensures dimensions align correctly. While it seems like a simple flip, proper transposition is crucial for making matrix operations work correctly in ML algorithms.

2.2 Matrix Operations and Transformations

Q36. What is the identity matrix and why is it special?

The identity matrix is a square matrix with ones on the diagonal and zeros elsewhere. It’s special because multiplying any matrix by the identity matrix leaves it unchanged, similar to how multiplying a number by one leaves it unchanged. In machine learning, the identity matrix appears in regularization techniques, in understanding inverse matrices, and in initialization strategies for neural networks. It represents the concept of doing nothing, which is sometimes exactly what you need mathematically.

Q37. Explain matrix inversion and when it’s used.

Matrix inversion finds a matrix that, when multiplied with the original, produces the identity matrix. It’s like finding the reciprocal of a number. In machine learning, inversion appears in the closed-form solution for linear regression and in certain optimization algorithms. However, computing inverses is expensive and numerically unstable, especially for large matrices. Modern ML typically avoids direct inversion, using iterative methods instead. Understanding inversion helps you grasp why some algorithms work and why others use different approaches.

Q38. What are eigenvalues and eigenvectors?

Eigenvalues and eigenvectors describe special directions in which a matrix stretches space. When you multiply a matrix by its eigenvector, the result points in the same direction, just scaled by the eigenvalue. These concepts appear in Principal Component Analysis, which finds directions of maximum variance in data. They also help analyze neural network stability and behavior. While the math can be complex, think of eigenvectors as important directions in your data and eigenvalues as how important each direction is.

Q39. What is matrix rank and why does it matter?

Matrix rank tells you how many independent rows or columns a matrix has. A matrix is full rank if all rows or columns are independent, meaning each provides unique information. In machine learning, rank matters for several reasons. Low rank matrices indicate redundant features in your data. Rank affects whether certain operations like inversion are possible. In dimensionality reduction, you deliberately create lower rank approximations of data. Understanding rank helps you identify data quality issues and choose appropriate algorithms.

Q40. How do you determine if a matrix is singular?

A singular matrix is one that cannot be inverted, similar to how you cannot divide by zero. You can identify singular matrices by calculating their determinant, which equals zero for singular matrices. Singular matrices occur when rows or columns are linearly dependent, meaning some can be expressed as combinations of others. In machine learning, singularity indicates problems like perfect multicollinearity in linear regression. Modern algorithms handle near-singular matrices using regularization techniques that add small values to make inversion stable.

2.3 Statistics Fundamentals

Q41. What is the difference between mean, median, and mode?

These three measures describe the center of your data differently. Mean is the average, calculated by summing all values and dividing by count. It’s sensitive to extreme values called outliers. Median is the middle value when data is sorted, making it robust to outliers. Mode is the most frequent value. In machine learning, choosing the right measure matters. For house prices with some mansions, median better represents typical prices than mean. For predicting categories, mode makes sense. Understanding these differences helps you summarize data appropriately.

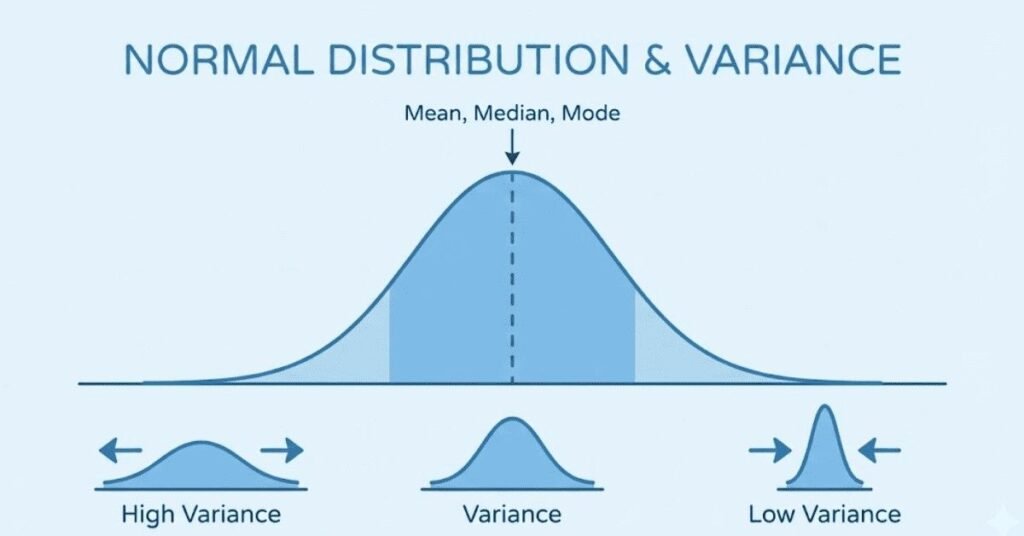

Q42. Explain variance and standard deviation.

Variance measures how spread out your data is from the mean. You calculate it by finding the average squared difference from the mean. Standard deviation is simply the square root of variance, putting the measure back in the original units. In machine learning, these statistics appear everywhere. Feature scaling uses standard deviation. Understanding prediction uncertainty requires variance. High variance in model predictions indicates overfitting. Low variance might indicate underfitting. These measures help you understand both your data and model behavior.

Q43. What is covariance and correlation?

Covariance measures whether two variables tend to increase or decrease together. Positive covariance means they move together, negative means they move opposite, and zero means no relationship. Correlation is standardized covariance ranging from negative one to positive one, making it easier to interpret. In machine learning, correlation helps you understand relationships between features, identify redundant features, and interpret model behavior. High correlation between features might indicate multicollinearity problems in linear models.

Q44. What is a normal distribution and why is it important?

A normal distribution, shaped like a bell curve, describes how many natural phenomena behave. Most values cluster around the mean, with fewer values appearing as you move away. It’s completely described by its mean and standard deviation. In machine learning, normal distributions appear constantly. Many algorithms assume data is normally distributed. Error terms in linear regression should be normally distributed. The Central Limit Theorem explains why averages tend toward normal distributions. Understanding normality helps you choose appropriate models and validate assumptions.

Q45. What are percentiles and quartiles?

Percentiles divide your data into hundred equal parts. The 25th percentile means twenty-five percent of data falls below that value. Quartiles are specific percentiles dividing data into four parts, at the 25th, 50th, and 75th percentiles. In machine learning, percentiles help you understand data distribution, identify outliers, and create features. The interquartile range between the 25th and 75th percentiles is robust to outliers. When analyzing model errors, looking at different percentiles reveals whether your model struggles with certain types of predictions.

2.4 Probability Theory

Q46. What is probability and how is it used in machine learning?

Probability quantifies uncertainty, ranging from zero for impossible events to one for certain events. In machine learning, probability is fundamental. Classification models output probability estimates for each class. Bayesian methods explicitly model uncertainty. Probabilistic graphical models represent relationships between variables. Understanding probability helps you interpret model confidence, quantify prediction uncertainty, and make better decisions when outcomes are uncertain. Many ML algorithms are essentially sophisticated probability calculators finding the most likely explanation for data.

Q47. Explain conditional probability.

Conditional probability measures the chance of one event happening given that another has occurred. Written as P of A given B, it represents the probability of A when you know B is true. In machine learning, conditional probabilities are everywhere. Naive Bayes classifiers compute the probability of a class given observed features. In sequence models, you predict the next word given previous words. Medical diagnosis models estimate disease probability given symptoms. Understanding conditional probability helps you build models that incorporate available information to make better predictions.

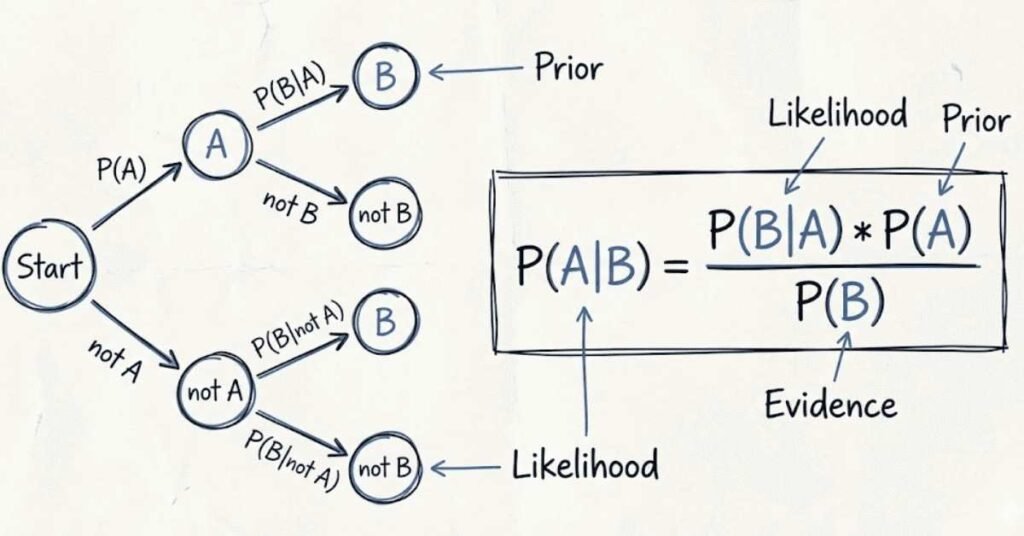

Q48. What is Bayes Theorem?

Bayes Theorem provides a formula for updating beliefs based on new evidence. It relates the probability of A given B to the probability of B given A. In machine learning, this enables you to reverse conditional probabilities. Given how likely symptoms are for each disease, Bayes Theorem computes how likely each disease is given observed symptoms. Naive Bayes classifiers use this principle. Bayesian optimization for hyperparameter tuning applies it. The theorem formalizes learning from data, making it foundational to many ML approaches.

Q49. What is the difference between independent and dependent events?

Independent events don’t affect each other. Knowing one happened doesn’t change the probability of the other. Coin flips are independent since previous results don’t influence future ones. Dependent events do affect each other. Drawing cards without replacement creates dependence since each draw changes remaining possibilities. In machine learning, assuming independence often simplifies calculations, like Naive Bayes assuming features are independent. Understanding when this assumption holds or fails affects model accuracy and choice of algorithm.

Q50. What are random variables?

A random variable assigns numbers to outcomes of random processes. Rather than describing outcomes as heads or tails, you assign numeric values like zero or one. Random variables can be discrete, taking specific values like integers, or continuous, taking any value in a range. In machine learning, your data can be viewed as observations of random variables. Features are random variables with certain distributions. Understanding this perspective helps you apply probability theory to data analysis and modeling.

2.5 Differential Calculus Applications

Q51. What is a derivative and why does it matter in machine learning?

A derivative measures how a function changes as its input changes, representing the slope at each point. In machine learning, derivatives are crucial for training models. Gradient descent uses derivatives to determine how to adjust model parameters to reduce error. The derivative tells you which direction to move parameters and how quickly the error changes. Without derivatives, you couldn’t efficiently optimize the millions of parameters in modern neural networks. Understanding derivatives helps you grasp how learning algorithms actually work.

Q52. What is a gradient?

A gradient is the multidimensional version of a derivative. While a derivative applies to functions with one input, gradients apply to functions with multiple inputs. The gradient is a vector pointing in the direction of steepest increase, with each component being the partial derivative with respect to one input. In machine learning, you compute gradients of your loss function with respect to all model parameters. These gradients tell you how to adjust each parameter to reduce error, forming the basis of gradient descent optimization.

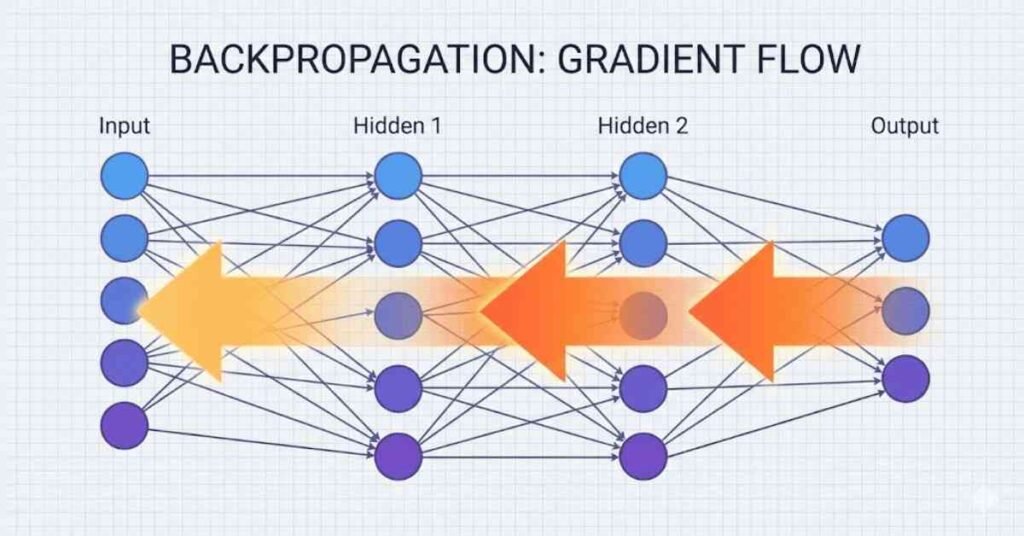

Q53. Explain the chain rule and its importance in backpropagation.

The chain rule is a calculus technique for finding derivatives of composite functions. If you have a function inside another function, the chain rule tells you how to break down the derivative into manageable pieces. In neural networks, backpropagation uses the chain rule to compute gradients layer by layer, flowing backward from output to input. Each layer’s gradient depends on the next layer’s gradient, creating a chain of computations. Without the chain rule, training deep neural networks would be impossible since you couldn’t efficiently compute all the needed gradients.

Q54. What is a partial derivative?

A partial derivative measures how a function changes with respect to one variable while keeping all other variables constant. For a function with multiple inputs, you have one partial derivative for each input. In machine learning, when optimizing models with many parameters, you compute partial derivatives for each parameter. This tells you how changing just that parameter affects your loss. Collecting all partial derivatives gives you the gradient. Understanding partial derivatives helps you see how each parameter contributes to model performance.

Q55. What is the purpose of second derivatives in optimization?

Second derivatives measure how the rate of change itself changes, indicating curvature. While first derivatives give slope, second derivatives tell whether you’re in a valley, on a hill, or at an inflection point. In machine learning optimization, second derivatives appear in advanced methods like Newton’s method and in understanding convergence behavior. Positive second derivatives indicate you’re in a valley, suggesting you’ve found a minimum. Negative values indicate a hill or maximum. Second-order optimization methods use this curvature information to converge faster than simple gradient descent.

2.6 Mathematical Optimization Basics

Q56. What is an optimization problem in machine learning?

An optimization problem involves finding the best solution from all possible solutions according to some criterion. In machine learning, you optimize model parameters to minimize prediction error or maximize accuracy. The criterion you optimize is called the objective or loss function. The challenge is that with millions of parameters and complex models, finding the absolute best solution is often impossible. Instead, algorithms find good solutions using techniques like gradient descent. Understanding optimization helps you choose algorithms, set hyperparameters, and diagnose training issues.

Q57. What is a local minimum versus a global minimum?

A local minimum is a point where the function value is lower than nearby points but might not be the absolute lowest point overall. A global minimum is the absolute lowest point across the entire function. Imagine a hilly landscape where local minima are valleys surrounded by higher ground, while the global minimum is the deepest valley overall. In machine learning, optimization algorithms often get stuck in local minima, finding decent but not optimal solutions. Deep learning with many parameters has countless local minima, yet surprisingly, most are acceptably good for practical purposes.

Q58. What is a convex function and why is convexity important?

A convex function curves upward like a bowl, having the property that any local minimum is also the global minimum. Mathematically, the line segment between any two points on the function lies above the function itself. Convex functions are important in machine learning because they’re easier to optimize. If your loss function is convex, gradient descent is guaranteed to find the global minimum. Linear regression with squared error is convex. However, neural networks are non-convex, making optimization more challenging but still practically solvable.

Q59. What are constraints in optimization problems?

Constraints are restrictions on acceptable solutions. You might require parameters to be positive, sum to one, or stay within certain bounds. Constrained optimization finds the best solution while satisfying all constraints. In machine learning, constraints appear when normalizing probabilities, enforcing sparsity, or limiting model complexity. Some algorithms handle constraints directly, while others use penalty methods that add terms to the loss function when constraints are violated. Understanding constraints helps you formulate problems correctly and choose appropriate optimization methods.

Q60. What is the difference between convex and non-convex optimization?

Convex optimization deals with convex functions where every local minimum is global, making problems easier to solve with guarantees. Non-convex optimization tackles functions with multiple local minima and complex landscapes where finding the global minimum is hard or impossible. Traditional machine learning often uses convex optimization. Deep learning uses non-convex optimization since neural networks aren’t convex. Despite theoretical difficulties, practical algorithms and techniques like momentum, adaptive learning rates, and good initialization make non-convex optimization work remarkably well for training deep models.

Chapter 3: Data Libraries - NumPy

3.1 Introduction to NumPy Arrays

Q61. What is NumPy and why is it essential for machine learning?

NumPy is the fundamental package for numerical computing in Python, providing support for large multidimensional arrays and matrices along with mathematical functions. It’s essential for machine learning because it makes numerical operations fast and convenient. Instead of using slow Python loops, NumPy performs operations on entire arrays at once using optimized C code. All other ML libraries build on NumPy. Your data starts as NumPy arrays, matrices in scikit-learn are NumPy arrays, and even TensorFlow tensors work similarly to NumPy arrays.

Q62. What is the difference between a Python list and a NumPy array?

Python lists are flexible containers that can hold different types of objects, but they’re slow for numerical operations. NumPy arrays must contain elements of the same type, but this restriction enables massive speed improvements. Arrays store data in continuous memory blocks, allowing vectorized operations that process all elements simultaneously. For machine learning with thousands or millions of numbers, NumPy arrays are hundreds of times faster. Lists are for general collections, while arrays are for numerical computation where performance matters.

Q63. How do you create a NumPy array from a Python list?

Creating NumPy arrays from lists is straightforward. You use np.array and pass your list as an argument. For example, np.array bracket one comma two comma three creates a one-dimensional array. For two-dimensional arrays, you pass a list of lists where each inner list becomes a row. You can also specify the data type using the dtype parameter, controlling whether you want integers, floats, or other types. This conversion is often your first step when bringing data into NumPy for machine learning processing.

Q64. What are NumPy array attributes like shape, size, and dtype?

NumPy arrays have useful attributes that describe them. Shape is a tuple showing dimensions, like three comma four for a three-row, four-column matrix. Size gives the total number of elements. Dtype tells you the data type of elements, like int64 or float32. Ndim shows the number of dimensions. These attributes help you understand your data structure, debug dimension mismatches, and verify operations produce expected results. Checking shape is especially important to ensure arrays align correctly for mathematical operations.

Q65. How do you check and change the data type of a NumPy array?

You check data type using the dtype attribute. To change types, use the astype method with the desired type. For example, array.astype np.float32 converts to 32-bit floats. Type conversion matters in machine learning for memory efficiency and computational speed. Using float32 instead of float64 halves memory usage and often speeds up GPU computation without significantly affecting accuracy. Converting data types appropriately optimizes your pipeline while ensuring numerical precision meets your needs.

3.2 Array Creation and Manipulation

Q66. What are different ways to create NumPy arrays besides converting lists?

NumPy provides many convenience functions for array creation. np.zeros creates arrays filled with zeros, useful for initializing result containers. np.ones creates arrays of ones. np.arange creates sequences of numbers similar to Python’s range. np.linspace creates evenly spaced numbers between two endpoints. np.eye creates identity matrices. np.random provides functions for random arrays with various distributions. In machine learning, you use these for initializing weights, creating test data, generating ranges for plotting, and setting up computational structures.

Q67. How does np.arange differ from np.linspace?

np.arange and np.linspace both create sequences, but differently. np.arange takes a start, stop, and step size, creating values spaced by the step amount. However, the exact endpoint might not be included due to floating-point precision. np.linspace takes a start, stop, and number of points, creating exactly that many evenly spaced values including both endpoints. Use arange when you care about step size, like creating array indices. Use linspace when you care about having a specific number of points, like creating a smooth range for plotting model predictions.

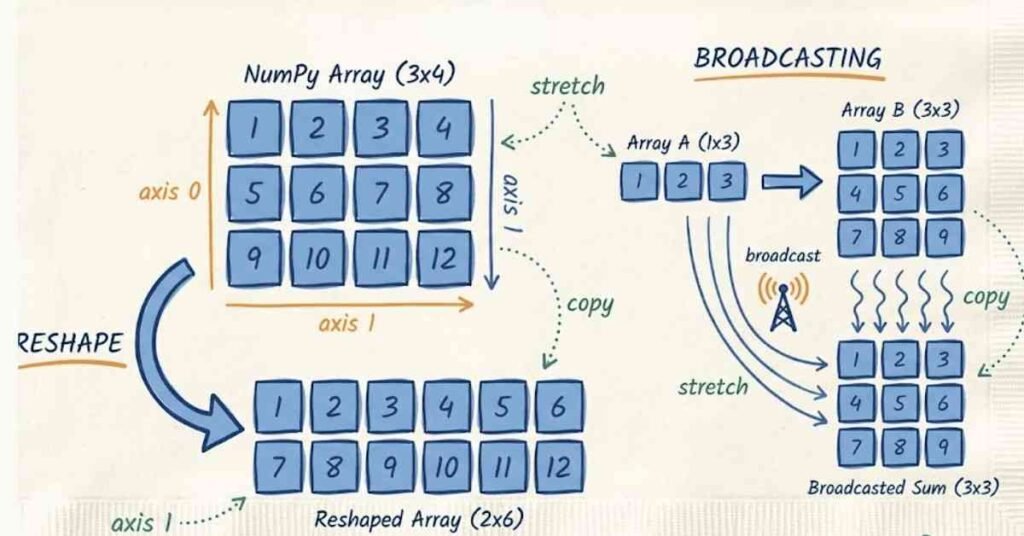

Q68. What is broadcasting in NumPy?

Broadcasting is NumPy’s way of performing operations on arrays of different shapes without explicitly replicating data. When you add a small array to a large one, NumPy automatically broadcasts the small array across the large one. For example, adding a single number to an array adds that number to every element. Adding a one-dimensional array to a two-dimensional array can add the same vector to every row. Broadcasting eliminates loops, saves memory, and makes code cleaner. Understanding broadcasting rules helps you write efficient vectorized code.

Q69. How do you reshape NumPy arrays?

Reshaping changes array dimensions without changing data. Use the reshape method with the desired shape. For example, array.reshape three comma four transforms a twelve-element array into a three-by-four matrix. The total number of elements must remain the same. You can use negative one in one dimension to have NumPy calculate that dimension automatically. In machine learning, reshaping is constant. You flatten images into vectors for some algorithms, reshape predictions to match targets, and adjust dimensions for specific operations. The reshape method is essential for preparing data correctly.

Q70. What does flattening an array mean?

Flattening converts a multidimensional array into a one-dimensional vector. You use the flatten or ravel methods. The difference is that flatten creates a copy while ravel creates a view when possible. In machine learning, flattening is common when feeding multidimensional data like images into algorithms expecting vectors. For example, a 28 by 28 pixel image becomes a 784-element vector. Understanding when to flatten and when to preserve structure is important for different algorithms and network architectures.

3.3 Indexing and Slicing Techniques

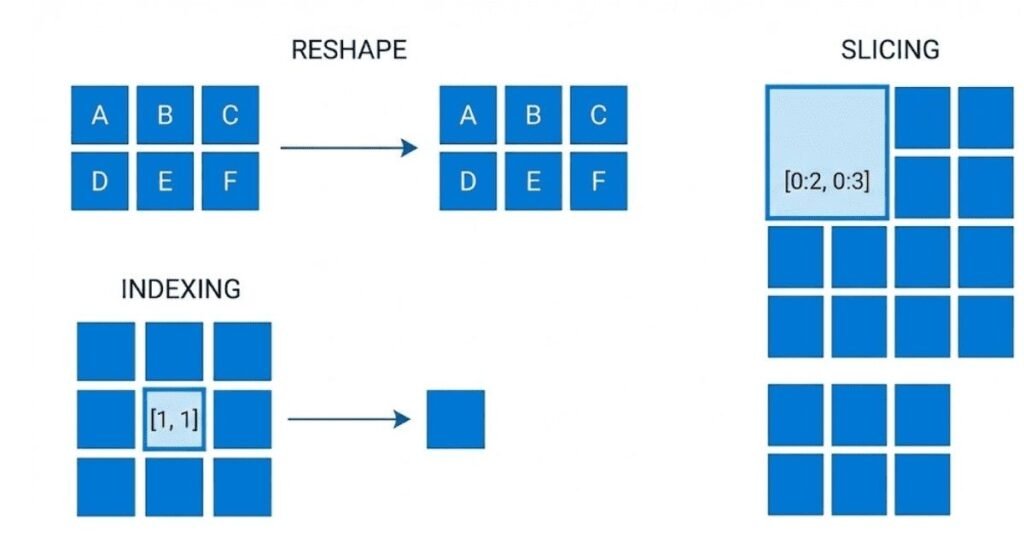

Q71. How does NumPy array indexing work?

NumPy indexing accesses specific elements or subsets of arrays. For one-dimensional arrays, use bracket notation with an index like array bracket three. For multidimensional arrays, separate indices with commas like array bracket two comma five for row two, column five. Negative indices count from the end. You can index multiple dimensions at once, making it easy to access specific values in matrices or higher-dimensional tensors. Proper indexing is fundamental for selecting data, examining specific values, and debugging your code.

Q72. Explain slicing in NumPy arrays.

Slicing extracts portions of arrays using colon notation. array bracket start colon stop colon step specifies the range. Omitting values uses defaults: start defaults to beginning, stop to end, and step to one. For multidimensional arrays, you slice each dimension separately with commas. For example, array bracket colon comma one selects all rows and column one. Slicing creates views rather than copies, meaning changes affect the original array. In machine learning, slicing selects training batches, extracts specific features, or focuses on particular data regions.

Q73. What is fancy indexing?

Fancy indexing uses arrays of indices to select elements in arbitrary order. Instead of simple slices, you pass an array of positions you want. For example, array bracket bracket zero comma two comma five bracket bracket selects elements at positions zero, two, and five. For multidimensional arrays, you can fancy index each dimension. This is powerful for selecting non-contiguous data, reordering elements, or picking specific samples from a dataset. Unlike slicing, fancy indexing creates copies rather than views.

Q74. How do you use boolean indexing?

Boolean indexing uses true-false arrays to select elements meeting certain conditions. You create a boolean array with a condition like array greater than five, which marks which elements satisfy the condition. Then you use this boolean array as an index like array bracket array greater than five bracket to select only those elements. In machine learning, boolean indexing filters data, removes outliers, selects specific classes, or masks invalid values. It’s an elegant way to work with subsets of data based on logical conditions.

Q75. What is the difference between a view and a copy?

A view references the original array’s data, so changes to the view affect the original. A copy is independent, so changes don’t affect the original. Slicing typically creates views, while fancy indexing creates copies. You can explicitly create copies with the copy method. This distinction matters in machine learning when you want to preserve original data while working with transformations. Accidentally modifying original data through a view causes subtle bugs. Understanding views versus copies prevents unexpected behavior and helps manage memory efficiently.

3.4 Array Transposition Methods

Q76. What does transposing an array mean?

Transposing swaps array dimensions, most commonly flipping a matrix’s rows and columns. You use the transpose method or the T attribute. An array with shape three by four becomes four by three. The element at row I, column J becomes the element at row J, column I. In machine learning, transposition aligns dimensions for matrix multiplication, prepares data for specific algorithms, and adjusts output formats. Understanding when to transpose prevents dimension errors and ensures operations work correctly.

Q77. How do you transpose multidimensional arrays?

For arrays with more than two dimensions, you specify how to permute axes using the transpose method with an axis argument. For example, transpose with argument zero comma two comma one swaps the second and third axes. This gives you complete control over dimension reordering. In deep learning, you might rearrange image tensors from channels-last to channels-first format or reorganize batch dimensions. The default transpose reverses all axes, but explicit axis specification lets you make precise rearrangements.

Q78. What is the swapaxes method?

The swapaxes method exchanges two specific axes while leaving others unchanged. You specify which two axes to swap. This is more convenient than full transposition when you only need to exchange two dimensions. For example, swapaxes one comma two swaps the second and third axes. In machine learning, this helps reformat tensors for different frameworks or operations. It’s particularly useful in image processing when you need to move the channel dimension to a different position.

Q79. When do you need to transpose arrays in ML workflows?

Transposition is needed frequently in machine learning. Matrix multiplication requires dimensions to align correctly, often necessitating transposition. Computing gradients involves transposing weight matrices. Some algorithms expect features as columns while your data has features as rows. Converting between different data format conventions requires transposition. Deep learning frameworks sometimes differ in whether they expect channels-first or channels-last image formats. Recognizing when dimensions don’t align and knowing how to transpose correctly is essential for preventing errors.

Q80. What is the moveaxis function?

The moveaxis function moves an axis from one position to another, sliding other axes to make room. You specify the source and destination positions. This provides another way to reorganize dimensions, sometimes more intuitive than transpose. For example, moveaxis source equals zero comma destination equals two moves the first axis to the third position. In machine learning, this helps rearrange tensors when you need a specific dimension order for operations or when converting between different data format conventions.

3.5 Universal Array Functions

Q81. What are universal functions in NumPy?

Universal functions, or ufuncs, are functions that operate element-wise on arrays, performing the same operation on each element efficiently. Examples include np.exp, np.log, np.sqrt, and trigonometric functions. These functions are implemented in compiled C code, making them much faster than Python loops. They also support broadcasting, allowing operations between arrays of different shapes. In machine learning, ufuncs appear everywhere: applying activation functions, computing losses, transforming features, and normalizing data. Understanding ufuncs helps you write fast, vectorized code.

Q82. How do mathematical operations work on NumPy arrays?

NumPy arrays support element-wise mathematical operations using standard operators. Addition, subtraction, multiplication, and division with the plus, minus, asterisk, and slash operators work element by element. Powers use the double asterisk operator. These operations are vectorized, applying to entire arrays at once without loops. For example, array times two multiplies every element by two. array1 plus array2 adds corresponding elements. This makes mathematical expressions clean and fast. Element-wise operations are default; for matrix multiplication, use the at symbol or dot method.

Q83. What is the difference between multiply and dot in NumPy?

The multiply function or asterisk operator performs element-wise multiplication, multiplying corresponding elements and returning an array of the same shape. The dot function or at symbol performs matrix multiplication following linear algebra rules, computing dot products of rows and columns. For two-dimensional arrays, dot performs traditional matrix multiplication. The shapes must be compatible: the inner dimensions must match. In machine learning, element-wise multiplication appears in scaling and masks, while dot multiplication computes predictions, propagates signals through networks, and performs many other core operations.

Q84. How do you apply functions like exponential or logarithm to arrays?

NumPy provides ufuncs for common mathematical functions. np.exp computes exponential for each element, used in softmax and sigmoid activations. np.log computes natural logarithm, appearing in cross-entropy loss. np.log10 and np.log2 provide other logarithm bases. np.sqrt computes square roots. These functions work element-wise on entire arrays, much faster than looping. For example, np.exp array exponentiates every element. These operations are fundamental in machine learning for transforming data, computing activations, and calculating metrics.

Q85. What are aggregation functions in NumPy?

Aggregation functions reduce arrays to single values or lower dimensions by computing statistics. np.sum adds elements, np.mean computes averages, np.max and np.min find extremes, np.std calculates standard deviation. You can aggregate the entire array or along specific axes. For example, np.mean array axis equals zero averages each column. In machine learning, aggregations compute batch statistics, calculate overall metrics, find maximum predictions, normalize features, and reduce dimensions. Understanding aggregation axes is crucial for operating on the correct dimensions of your data.

3.6 Array Processing Operations

Q86. How do you stack arrays together?

NumPy provides several stacking functions. np.vstack stacks arrays vertically, adding rows. np.hstack stacks horizontally, adding columns. np.concatenate joins arrays along an existing axis. np.stack creates a new axis and stacks along it. For example, stacking two arrays with shape ten by five vertically gives twenty by five. In machine learning, you stack arrays to combine datasets, merge batches, or assemble predictions. Choosing the right stacking function depends on how you want arrays arranged and whether you need a new dimension.

Q87. What is the difference between concatenate and stack?

Concatenate joins arrays along an existing axis, making that axis longer while keeping dimensions the same. Stack creates a new axis and arranges arrays along it, increasing dimensionality. For example, concatenating two ten by five arrays along axis zero gives twenty by five. Stacking them creates a two by ten by five array. In machine learning, concatenate combines similar data like adding more samples. Stack organizes separate arrays into a structured collection, useful when combining predictions from multiple models or organizing data batches.

Q88. How do you split arrays?

NumPy provides splitting functions opposite to stacking. np.split divides an array into multiple sub-arrays at specified positions. np.array_split splits into roughly equal parts even if the division isn’t exact. np.vsplit and np.hsplit split vertically and horizontally. For example, np.split array comma three splits into three equal parts along the first axis. In machine learning, splitting creates training and validation sets, separates features and targets, divides data into batches, or breaks large arrays into manageable chunks for processing.

Q89. What are tile and repeat functions?

The tile function repeats an entire array multiple times, like laying tiles. np.tile array comma two comma three repeats the array twice vertically and three times horizontally. The repeat function repeats individual elements. np.repeat array comma three repeats each element three times in place. In machine learning, these functions create replicated data for augmentation, broadcast values to match dimensions, or generate repeated patterns. Understanding when to use tile versus repeat depends on whether you want whole arrays duplicated or individual elements repeated.

Q90. How do you sort NumPy arrays?

NumPy provides sorting functions. np.sort returns a sorted copy, while array.sort sorts in place. You can sort along different axes for multidimensional arrays. np.argsort returns indices that would sort the array rather than sorted values themselves, useful for reordering other arrays consistently. np.partition partially sorts, efficiently finding top K elements without fully sorting. In machine learning, sorting identifies top predictions, ranks features by importance, orders data for visualization, or finds nearest neighbors. Choosing between sort and argsort depends on whether you need values or indices.

3.7 Array Input and Output Handling

Q91. How do you save NumPy arrays to files?

NumPy provides several saving functions. np.save writes a single array in binary format with npy extension. np.savez saves multiple arrays in a single compressed file with npz extension, accessing each array by name. For human-readable output, np.savetxt writes text files. Binary formats are faster and preserve precision perfectly but aren’t human-readable. In machine learning, saving arrays preserves processed datasets, stores model weights, saves predictions for analysis, or checkpoints intermediate results. Regular saving prevents data loss from crashes or errors.

Q92. How do you load NumPy arrays from files?

Loading matches saving functions. np.load reads npy files, returning the array. For npz files containing multiple arrays, np.load returns a dictionary-like object where you access arrays by name. np.loadtxt reads text files into arrays. When loading, verify the array shape and data type match expectations to catch corruption or format errors. In machine learning, loading retrieves saved datasets, loads pretrained weights, imports previously computed features, or resumes from checkpoints. Proper loading is essential for reproducibility and pipeline efficiency.

Q93. What is the difference between binary and text array formats?

Binary formats like npy store arrays in machine format, making saving and loading fast while preserving numeric precision exactly. They’re compact and efficient but not human-readable. Text formats like CSV store numbers as readable characters, allowing inspection in text editors and compatibility with other tools, but they’re slower, use more space, and may lose precision due to rounding. In machine learning, use binary formats for internal processing and checkpointing. Use text formats when sharing data with other tools or when human inspection is important.

Q94. How do you use memory mapping with NumPy?

Memory mapping treats files as if they’re in RAM without loading them entirely. np.memmap creates arrays backed by files on disk, reading portions as needed. This enables working with arrays larger than memory by accessing parts while keeping most on disk. Changes to mapped arrays modify the file directly. In machine learning, memory mapping handles huge datasets that don’t fit in RAM, allowing training on large corpora or high-resolution image collections without loading everything simultaneously. It’s a powerful technique for scaling to big data.

Q95. What are best practices for NumPy file I/O in ML projects?

Best practices include using meaningful filenames with version numbers or timestamps, saving metadata along with arrays describing what they contain, using compressed formats for large arrays to save space, organizing saved files in logical directory structures, and implementing error handling for corrupted files. Save data in reproducible formats with documentation enabling others to load it correctly. For large projects, consider using formats like HDF5 that handle hierarchical data structures. Proper file management ensures reproducibility, prevents data loss, and facilitates collaboration.

🗺️ Follow the 90 Days ML & Deep Learning Roadmap — Learn the Right Way →

Chapter 4: Data Libraries - Pandas

4.1 Series and DataFrames Fundamentals

Q96. What is a Pandas Series and how does it differ from a NumPy array?

A Pandas Series is a one-dimensional labeled array that can hold any data type. The key difference from NumPy arrays is that Series have an index, which gives each element a label. Think of it as a column from a spreadsheet where each row has a name or number. You can access elements both by position and by label, making data manipulation more intuitive. In machine learning, Series are perfect for representing a single feature or target variable across all your samples, and the index helps keep track of which value belongs to which data point.

Q97. What is a DataFrame and why is it central to data analysis?

A DataFrame is a two-dimensional labeled data structure, essentially a table with rows and columns like a spreadsheet or database table. Each column is a Series, and columns can have different data types. DataFrames are central to data analysis because they handle the tabular data that most real-world datasets come in. Your entire training dataset typically lives in a DataFrame where rows are samples and columns are features. DataFrames provide intuitive methods for cleaning, transforming, filtering, and analyzing data, making them the primary tool for the data preprocessing stage of machine learning projects.

Q98. How do you create a DataFrame from different sources?

You can create DataFrames in multiple ways. From a dictionary where keys become column names and values become data, using pd.DataFrame. From a list of lists where each inner list is a row. From NumPy arrays with column names specified separately. Most commonly, you load DataFrames from files using pd.read_csv for CSV files, pd.read_excel for Excel files, or pd.read_sql for database queries. You can also create DataFrames from JSON, HTML tables, and many other formats. Understanding these creation methods ensures you can bring data into Pandas regardless of its original format.

Q99. What are DataFrame attributes like shape, columns, and index?

DataFrame attributes provide essential information about your data structure. The shape attribute returns a tuple of rows and columns, helping you understand dataset size. The columns attribute lists all column names, useful for verifying features loaded correctly. The index attribute shows row labels, which might be numbers, dates, or custom identifiers. The dtypes attribute shows data types for each column, helping identify which columns need type conversion. The info method provides a comprehensive summary including memory usage. These attributes are your first check when loading data to verify it matches expectations.

Q100. How do you access columns in a DataFrame?

You can access columns using several methods. The simplest is bracket notation with the column name, like df bracket quote age quote. For column names without spaces, you can use dot notation like df.age. To select multiple columns, pass a list of names like df bracket bracket quote age quote comma quote salary quote bracket bracket. This returns a new DataFrame with just those columns. Understanding these access patterns is fundamental for feature selection in machine learning where you often work with subsets of columns.

4.2 Reading Data from Various Sources

Q101. What parameters should you know when using read_csv?

The read_csv function has many useful parameters. The filepath is required, but others control how data is interpreted. The sep parameter specifies the delimiter, defaulting to comma but accepting others like tabs or semicolons. The header parameter indicates which row contains column names, or None if there isn’t one. The names parameter lets you provide custom column names. The usecols parameter selects specific columns to load, saving memory. The dtype parameter specifies data types for columns, preventing incorrect inferences. Understanding these parameters ensures correct data loading and prevents common errors.

Q102. How do you handle missing values when loading data?

Pandas provides parameters to handle missing values during loading. The na_values parameter specifies which values should be treated as missing, like empty strings or specific codes like 999. By default, Pandas recognizes common missing indicators like NA, NaN, and null. The keep_default_na parameter controls whether to use default missing values. After loading, you can use the isna method to check for missing values and fillna or dropna to handle them. Proper missing value handling during loading prevents issues later in your machine learning pipeline.

Q103. What is the difference between read_csv and read_table?

Both functions read delimited text files, but read_csv defaults to comma delimiters while read_table defaults to tabs. Functionally, they’re nearly identical, and you can make them behave the same by setting the sep parameter. read_csv is more commonly used since CSV files are standard for data distribution. read_table is useful for tab-delimited files common in scientific data. In practice, most people use read_csv for all delimited files and just adjust the sep parameter as needed.

Q104. How do you read data from Excel files?

Pandas provides pd.read_excel for Excel files, which works similarly to read_csv but with additional Excel-specific parameters. You specify the file path and optionally the sheet_name parameter to select which sheet to read, using the sheet name or position number. You can read multiple sheets at once by passing a list of names or None to read all sheets, which returns a dictionary of DataFrames. Excel files can have complex formatting, so parameters like skiprows and usecols help extract just the data you need from elaborately formatted spreadsheets.

Q105. What is chunking and when do you need it?

Chunking reads large files in smaller pieces rather than loading everything into memory at once. You use the chunksize parameter in read_csv, specifying how many rows per chunk. This returns an iterator that yields DataFrames of that size. Process each chunk, perform calculations, then move to the next. Chunking is essential when working with datasets larger than your computer’s memory, which happens frequently in machine learning with large text corpora, log files, or sensor data. It enables processing massive datasets on limited hardware by streaming data through your pipeline.

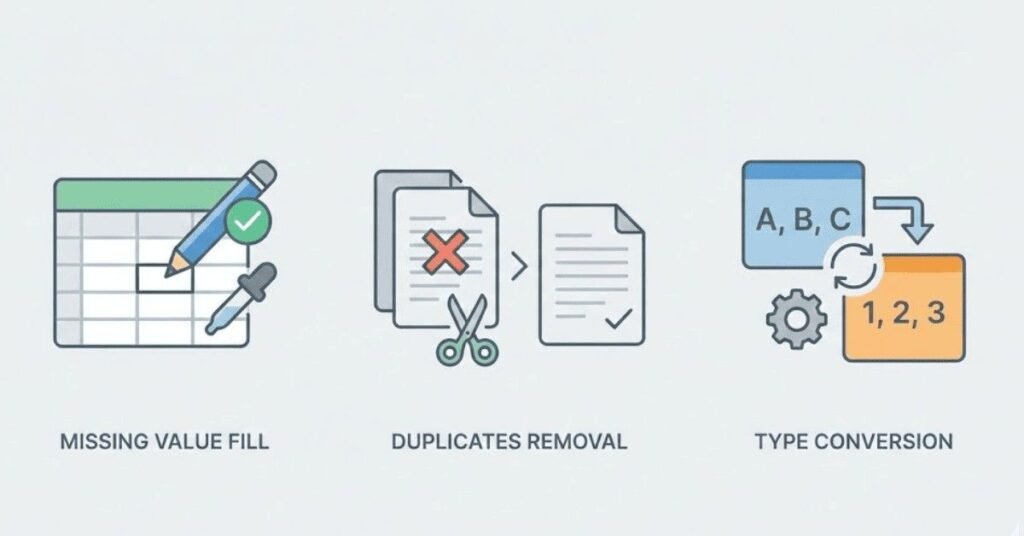

4.3 Data Cleaning Techniques

Q106. How do you identify and handle duplicate rows?

Pandas provides the duplicated method to identify duplicate rows, returning a boolean Series indicating which rows are duplicates. The drop_duplicates method removes duplicates, keeping the first occurrence by default. You can specify which columns to consider using the subset parameter, useful when only certain columns determine uniqueness. The keep parameter controls which duplicate to keep: first, last, or False to remove all duplicates. In machine learning, duplicates can bias models toward repeated examples, so identifying and handling them is crucial for data quality.

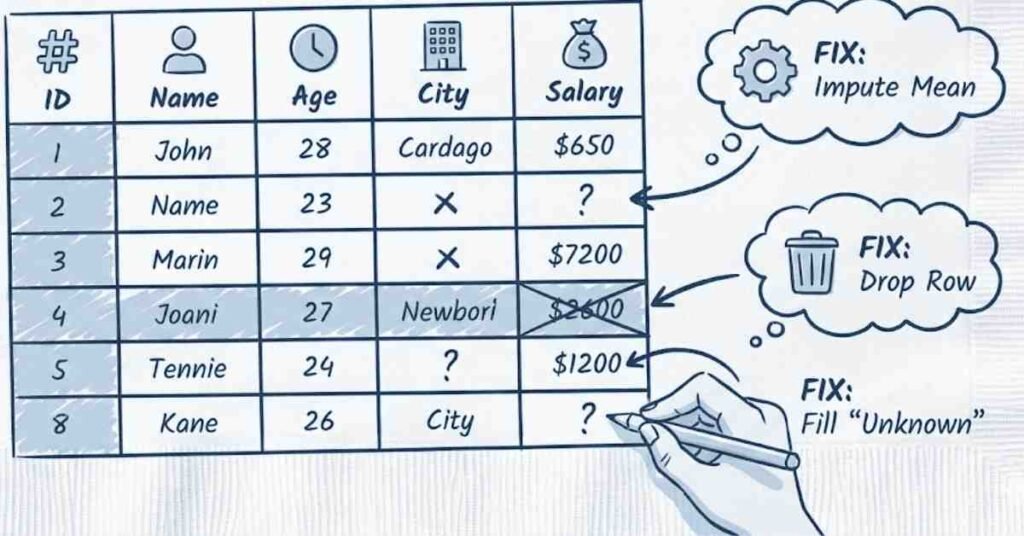

Q107. What are different strategies for handling missing data?

Several strategies exist for missing data. Deletion removes rows with missing values using dropna, simple but loses information. Mean or median imputation replaces missing values with column averages using fillna, preserving sample size but potentially distorting distributions. Forward fill carries the last valid value forward using fillna with method forward fill, useful for time series. Backward fill does the opposite. More sophisticated approaches use machine learning to predict missing values based on other features. The choice depends on how much data is missing, why it’s missing, and your model requirements.

Q108. How do you replace values in a DataFrame?

The replace method substitutes specified values with new ones. You can replace a single value across the entire DataFrame, or use a dictionary mapping old values to new ones. For specific columns, use bracket notation to select them first. The str.replace method handles string replacements within text columns, supporting regular expressions for pattern matching. In machine learning preprocessing, replace corrects data entry errors, standardizes category names, converts codes to meaningful values, or maps categorical values to numbers for modeling.

Q109. What is the difference between fillna and interpolate?

Both handle missing values but differently. fillna replaces missing values with specified constants, statistics like mean or median, or values from other rows using forward or backward fill. It’s simple and fast but doesn’t consider relationships between values. interpolate estimates missing values based on surrounding values using mathematical interpolation methods like linear, polynomial, or spline. It’s more sophisticated and works well for time series or ordered data where missing values should follow trends. Choose fillna for simple replacement and interpolate when missing values should reflect patterns in surrounding data.

Q110. How do you handle inconsistent data formats?

Inconsistent formats appear in many forms: dates in different formats, numbers with commas or currency symbols, inconsistent capitalization, or varying category names. The str accessor provides string methods for cleaning text columns like str.lower for consistent case, str.strip to remove whitespace, and str.replace for pattern replacement. The pd.to_datetime function converts various date formats to datetime objects, with the format parameter specifying the expected pattern. For numbers, str.replace removes unwanted characters before converting to numeric type. Systematic cleaning ensures your features are interpretable by machine learning algorithms.

4.4 Data Wrangling Strategies

Q111. What is the melt function and when do you use it?

The melt function transforms wide-format data into long format, also called unpivoting. Wide format has many columns representing different variables or time periods. Long format has fewer columns with one row per observation. You specify id_vars for columns to keep and value_vars for columns to melt. This creates a new DataFrame with identifiers, a variable column naming which original column the value came from, and a value column. Melting is useful when algorithms expect long format or when you need to plot or analyze data where current columns should be values of a variable.

Q112. How does the pivot function work?

Pivot does the opposite of melt, transforming long-format data into wide format. You specify which column’s values become the new index, which column’s values become new columns, and which column’s values fill the resulting table. This reshapes data from one row per observation to one row per entity with observations as columns. In machine learning, pivot creates feature matrices from transactional data, converts time series from long to wide format, or restructures datasets for specific algorithm requirements.

Q113. What is the groupby operation?

The groupby operation splits data into groups based on column values, applies a function to each group, and combines results. It follows the split-apply-combine pattern. After grouping with df.groupby, you call aggregation functions like sum, mean, or count. For example, grouping sales data by region then calculating average sales per region. You can group by multiple columns, creating hierarchical groups. In machine learning, groupby creates aggregate features, computes group-level statistics, analyzes model performance by segments, or prepares data for certain algorithms.

Q114. How do you merge and join DataFrames?

Merging combines DataFrames based on common columns or indices, similar to SQL joins. The merge function takes two DataFrames and specifies how to join them. Inner join keeps only rows with matches in both DataFrames. Left join keeps all rows from the left DataFrame. Right join keeps all from the right. Outer join keeps all rows from both. You specify which columns to join on using the on parameter. When column names differ, use left_on and right_on. In machine learning, merging combines features from different sources, adds target variables to feature data, or enriches datasets with external information.

Q115. What is the concat function used for?

Concat stacks DataFrames together vertically or horizontally. Vertical concatenation adds rows from one DataFrame below another, useful for combining datasets with the same columns. Horizontal concatenation adds columns side by side, useful for adding new features. The axis parameter controls direction: zero for vertical, one for horizontal. The ignore_index parameter resets the index in the result. Unlike merge, concat doesn’t require common columns; it simply stacks data. In machine learning, concat combines training batches, adds engineered features, or assembles final datasets from multiple preprocessing steps.

4.5 Data Selection and Filtering

Q116. What is the difference between loc and iloc?

Both select subsets of DataFrames but use different indexing. loc uses label-based indexing, selecting rows and columns by their names. You write df.loc bracket row_label comma column_label bracket. iloc uses position-based indexing, selecting by integer positions like NumPy arrays. You write df.iloc bracket row_position comma column_position bracket. loc is more intuitive when you know names, while iloc is useful for positional access. For slicing, loc includes both endpoints while iloc excludes the end. Understanding both is essential for flexible data selection in machine learning preprocessing.

Q117. How do you filter rows based on conditions?

You filter by creating boolean Series from conditions then using them to index DataFrames. Write conditions like df bracket quote age quote greater than 30, which returns a boolean Series. Use this inside brackets like df bracket df bracket quote age quote greater than 30 bracket to get filtered rows. Combine multiple conditions with ampersand for and, vertical bar for or, and tilde for not. Remember to parenthesize each condition. In machine learning, filtering selects training samples meeting criteria, removes outliers, creates subsets for analysis, or separates data by class.

Q118. What is the query method?

The query method filters rows using string expressions, providing cleaner syntax than boolean indexing. You write conditions as strings like df.query quote age greater than 30 and salary less than 100000 quote. This is more readable, especially with complex conditions. You can reference variables from your environment using the at symbol. The query method uses the same operators as Python but in string form. It’s particularly useful when conditions come from user input or configuration files, though boolean indexing is more common in production code.

Q119. How do you select rows by index position?

Use iloc with integer positions or slices. df.iloc bracket five bracket selects the sixth row. df.iloc bracket ten colon twenty bracket selects rows ten through nineteen. Negative indices count from the end. You can pass lists of positions like df.iloc bracket bracket zero comma five comma ten bracket bracket. For both rows and columns, separate with comma like df.iloc bracket zero colon ten comma zero colon five bracket for first ten rows and first five columns. Position-based selection is essential when row order matters or when you need to sample specific positions.

Q120. What are boolean masks and how do you use them?

Boolean masks are Series or arrays of True and False values that select rows where the value is True. Create masks from conditions, then use them to index DataFrames. You can combine masks using logical operators, save masks as variables for reuse, or create complex masks using multiple conditions. For example, create a mask for valid data, another for training set, then combine them to select valid training samples. Masks make complex selection logic clearer and enable reusing selection criteria across different operations.

Chapter 5: Data Visualization with Matplotlib

5.1 Basic Plotting Techniques

Q121. Why is data visualization important in machine learning?

Data visualization helps you understand your data before modeling, identify patterns and relationships, detect outliers and anomalies, and communicate results effectively. Visualizations reveal distributions, correlations, and trends that numbers alone don’t show. Before training models, plots help you decide on preprocessing steps and feature engineering. During training, visualizations track learning progress and diagnose problems. After training, plots communicate model behavior and predictions to stakeholders. Good visualization skills make you a more effective machine learning practitioner by enabling data-driven decisions.

Q122. How do you create a basic line plot with Matplotlib?

Start by importing Matplotlib with import matplotlib.pyplot as plt. Create a figure and axes with fig comma ax equals plt.subplots. Plot data with ax.plot passing x and y values. Add labels with ax.set_xlabel and ax.set_ylabel. Add a title with ax.set_title. Display the plot with plt.show. You can customize line color, style, and width using parameters. Multiple lines on one plot help compare different models or features. In machine learning, line plots visualize training curves, showing how loss decreases over epochs.

Q123. What are scatter plots and when do you use them?

Scatter plots display individual data points as dots in two-dimensional space, with x and y coordinates. Create them with ax.scatter passing x and y arrays. They reveal relationships between two variables, showing correlation, clustering, or patterns. In machine learning, scatter plots visualize feature relationships, show actual versus predicted values to assess model accuracy, display dimensionality reduction results like PCA, or identify outliers as points far from clusters. Color and size parameters add additional dimensions, encoding class labels or confidence scores.

Q124. How do you create bar charts and histograms?

Bar charts display categorical data with rectangular bars. Use ax.bar passing categories and heights. They compare quantities across categories like model accuracy for different algorithms. Histograms show distribution of continuous data by binning values and counting frequency. Use ax.hist passing data and number of bins. They reveal data distribution shape, helping you assess normality, identify skewness, or detect multiple modes. In machine learning, histograms visualize feature distributions, understand class imbalance, examine prediction distributions, or analyze residual distributions to validate model assumptions.

Q125. What is the purpose of the plot title and axis labels?