Database Admin Interview Preparation Guide

💡 Kickstart Your Database Career with a Step-by-Step Roadmap!

🚀 View Database Roadmap →

1. 245+ Technical Interview Questions & Answers

- Database Interview questions (30 Questions)

- SQL Basics and Advanced Queries (40 Questions)

- Database Performance Optimization (35 Questions)

- Backup and Recovery (30 Questions)

- Security and User Management (25 Questions)

- Platform-Specific Questions (40 Questions)

📚 Looking for more interview prep materials? 👉 Explore All Resources →

Section:1 Understanding Database Management Systems (30 questions)

Q1: What is a Database Management System, and why do we need it?

Think of a DBMS as a smart filing cabinet for your computer. Instead of throwing papers everywhere, you organize them properly so you can find what you need quickly. A DBMS helps store, manage, and retrieve data efficiently. Without it, every application would need to create its own way to save data, leading to chaos and inconsistency.

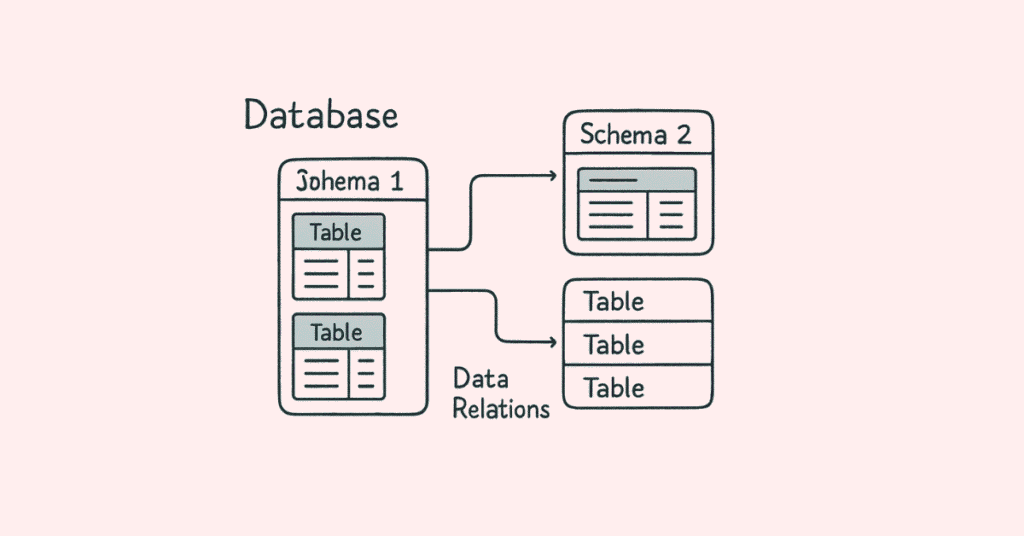

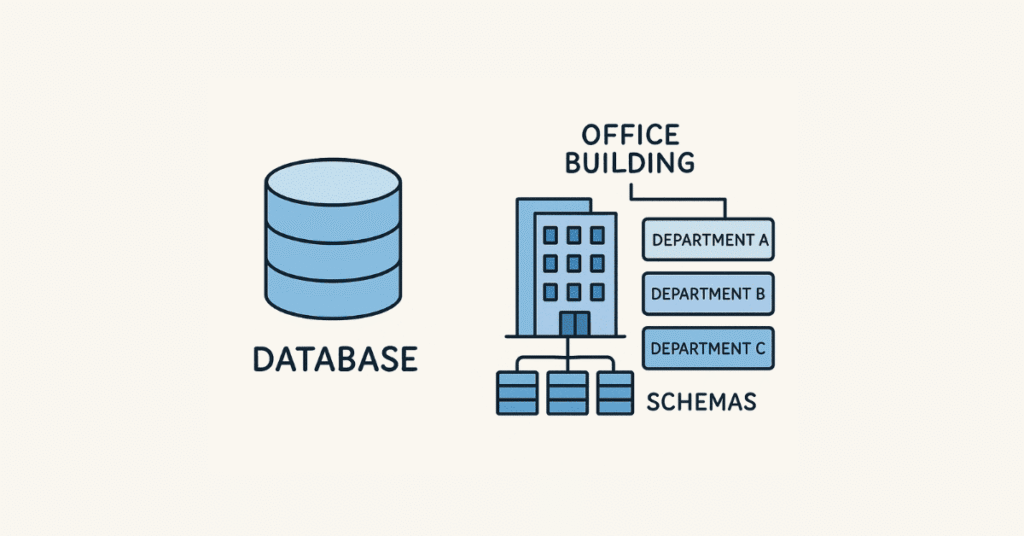

Q2: What’s the difference between a database and a schema?

A database is like an entire office building, while a schema is like one department inside that building. The database holds everything, but schemas help organize related tables and objects together. For example, in a hospital database, you might have one schema for patient records and another for billing information.

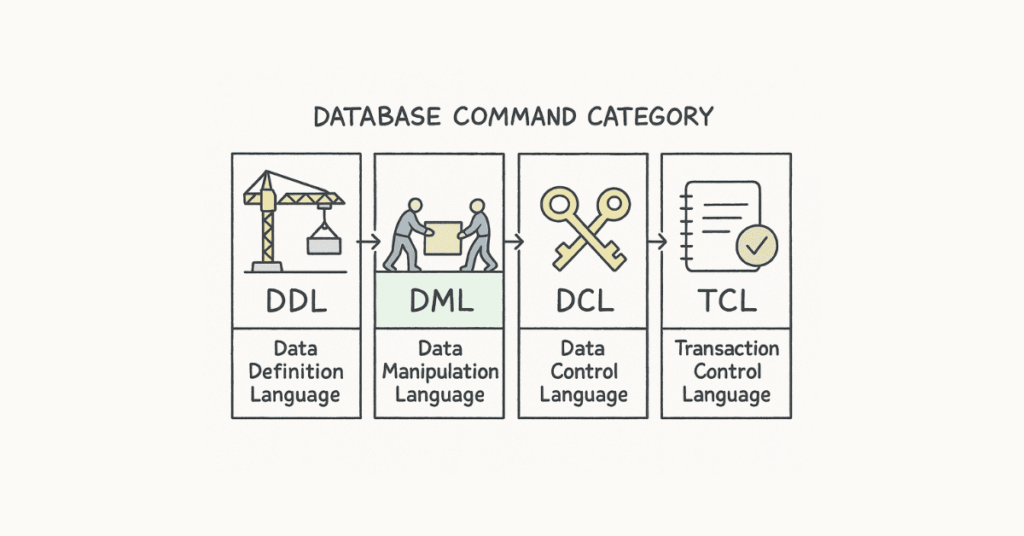

Q3: Explain the difference between DDL, DML, DCL, and TCL commands.

These are different categories of SQL commands:

- DDL (Data Definition Language) builds the structure – CREATE, ALTER, DROP. Think of it as constructing the building.

- DML (Data Manipulation Language) works with actual data – SELECT, INSERT, UPDATE, DELETE. This is like moving furniture inside.

- DCL (Data Control Language) manages permissions – GRANT, REVOKE. Like giving keys to specific people.

- TCL (Transaction Control Language) handles transactions – COMMIT, ROLLBACK. Similar to having save points in a video game.

Q4: What are ACID properties in databases?

ACID ensures your database transactions are reliable:

- Atomicity: All or nothing. If you transfer money between accounts, either both the debit and credit happen, or neither does.

- Consistency: Data always follows the rules you set. No negative bank balances if your rules forbid it.

- Isolation: Multiple transactions don’t interfere with each other. Two people booking the last concert ticket won’t both succeed.

- Durability: Once confirmed, data stays saved even if the system crashes immediately after.

Q5: What is database normalization and why is it important?

Normalization is organizing your data to avoid redundancy. Imagine writing your address on every form in your house versus keeping it in one place and referring to it. Normalized databases save space, prevent inconsistencies, and make updates easier. If your phone number changes, you update it once, not in fifty different places.

Q6: Explain the different normal forms (1NF, 2NF, 3NF, BCNF).

Each normal form removes specific types of redundancy:

- 1NF: Each column has only one value. No storing “John, Mary, Bob” in a single cell.

- 2NF: No partial dependencies. Information depends on the whole primary key, not just part of it.

- 3NF: No transitive dependencies. One non-key column shouldn’t depend on another non-key column.

- BCNF: A stricter version of 3NF where every determinant must be a candidate key.

Q7: When would you denormalize a database?

Sometimes breaking normalization rules improves performance. In a reporting database where you read data constantly but rarely update it, storing some repeated information speeds up queries. It’s like keeping a copy of frequently used documents on your desk instead of walking to the filing cabinet every time.

Q8: What is a primary key?

A primary key uniquely identifies each row in a table, like your social security number identifies you. It cannot be null and must be unique. Every table should have one to maintain data integrity and establish relationships with other tables.

Q9: What’s the difference between a primary key and a unique key?

Both ensure uniqueness, but with differences. A table can have only one primary key but multiple unique keys. Primary keys cannot be null, while unique keys can have one null value. Primary keys are automatically indexed for performance, and they’re the default choice for establishing relationships.

Q10: Explain foreign keys and their purpose.

A foreign key creates relationships between tables. If you have a customers table and an orders table, the customer ID in the orders table is a foreign key pointing to the customers table. It ensures referential integrity – you can’t create an order for a customer that doesn’t exist.

Q11: What are composite keys?

A composite key uses multiple columns together to uniquely identify a row. In a class enrollment system, you might use student ID and course ID together, since a student can take multiple courses and each course has multiple students.

Q12: What is a candidate key?

Any column or combination of columns that could serve as a primary key is a candidate key. In an employee table, both employee ID and email address might uniquely identify employees, making both candidate keys. You choose one as the primary key.

Q13: Explain the concept of a surrogate key.

A surrogate key is an artificial identifier you create, usually an auto-incrementing number, instead of using natural data. Employee ID 12345 is a surrogate key, while using someone’s social security number would be a natural key. Surrogate keys are simpler and more stable than natural keys.

Q14: What is referential integrity?

Referential integrity ensures relationships between tables remain consistent. If a customer record is deleted, what happens to their orders? Referential integrity rules define this behavior – maybe delete the orders too, or prevent the customer deletion, or set order references to null.

Q15: Explain CASCADE, SET NULL, and RESTRICT in foreign key constraints.

These define what happens when you delete or update referenced data:

- CASCADE: Automatically delete or update child records. Delete a customer, their orders vanish too.

- SET NULL: Child records remain but their reference becomes null. The order stays but loses its customer link.

- RESTRICT: Prevents deletion if child records exist. You must delete orders before deleting the customer.

Q16: What are database constraints?

Constraints are rules that protect data quality. They include NOT NULL (must have a value), UNIQUE (no duplicates), CHECK (must meet a condition), PRIMARY KEY, and FOREIGN KEY. They’re like guardrails preventing bad data from entering your database.

Q17: What is a CHECK constraint?

CHECK constraints validate data against specific conditions. For example, ensuring age is positive, salary isn’t below minimum wage, or email contains an @ symbol. They catch errors before bad data enters the database.

Q18: Explain the difference between DELETE and TRUNCATE.

DELETE removes rows one by one and can use WHERE clauses to be selective. It’s logged, can be rolled back, and triggers fire. TRUNCATE removes all rows quickly without logging individual deletions, can’t be rolled back easily, and doesn’t fire triggers. Use DELETE when you need control, TRUNCATE for speed when clearing entire tables.

Q19: What’s the difference between CHAR and VARCHAR?

CHAR stores fixed-length strings, padding with spaces if needed. VARCHAR stores variable-length strings, using only the space needed. CHAR(10) always uses 10 characters whether you store “Hi” or “Hello World”, while VARCHAR(10) adjusts. Use CHAR for consistently sized data like state codes, VARCHAR for variable content like names.

Q20: Explain different data types in SQL.

Common types include:

- Numeric: INT, BIGINT, DECIMAL, FLOAT for numbers

- Character: CHAR, VARCHAR, TEXT for text

- Date/Time: DATE, TIME, DATETIME, TIMESTAMP

- Binary: BLOB for files and images

- Boolean: TRUE/FALSE values

Each database system has variations and additional types.

Q21: What is a NULL value, and how is it different from zero or empty string?

NULL means “unknown” or “not applicable” – it’s the absence of a value. Zero is a specific number, and empty string is a string with no characters. NULL comparisons work differently – you can’t use “= NULL”, you must use “IS NULL”. Adding anything to NULL gives NULL.

Q22: How do you handle NULL values in queries?

Use IS NULL or IS NOT NULL to check for nulls. Functions like COALESCE or IFNULL provide default values when encountering nulls. In calculations, decide whether to exclude nulls or treat them as zero. Always consider how nulls affect your logic.

Q23: What is a view in a database?

A view is a saved query that acts like a virtual table. It doesn’t store data itself but displays results from underlying tables. Views simplify complex queries, enhance security by limiting what users see, and present data in specific ways without duplicating storage.

Q24: What’s the difference between a view and a materialized view?

A regular view recalculates results every time you query it. A materialized view stores the results physically, refreshing periodically. Materialized views are faster for complex calculations but require storage and refresh time. Use regular views for current data, materialized views for reporting on relatively stable data.

Q25: What is a stored procedure?

A stored procedure is pre-compiled SQL code saved in the database that you can execute repeatedly. Think of it as a recipe – instead of giving separate cooking instructions each time, you save the complete recipe and follow it whenever needed. Stored procedures improve performance, security, and code reusability.

Q26: What’s the difference between stored procedures and functions?

Stored procedures perform actions and can have multiple outputs. Functions return a single value and can be used in queries. Procedures are called standalone, functions are used within expressions. Use procedures for complex operations, functions for calculations you need in queries.

Q27: What are triggers?

Triggers are automatic actions that execute when specific events occur – like inserting, updating, or deleting data. Think of them as automated responses. When a new order is placed, a trigger might automatically update inventory. They enforce business rules and maintain audit trails.

Q28: Explain BEFORE and AFTER triggers.

BEFORE triggers execute before the data change, allowing you to validate or modify data before it’s saved. AFTER triggers execute after the change completes, useful for logging or cascading updates. Choose BEFORE to prevent invalid data, AFTER to respond to changes.

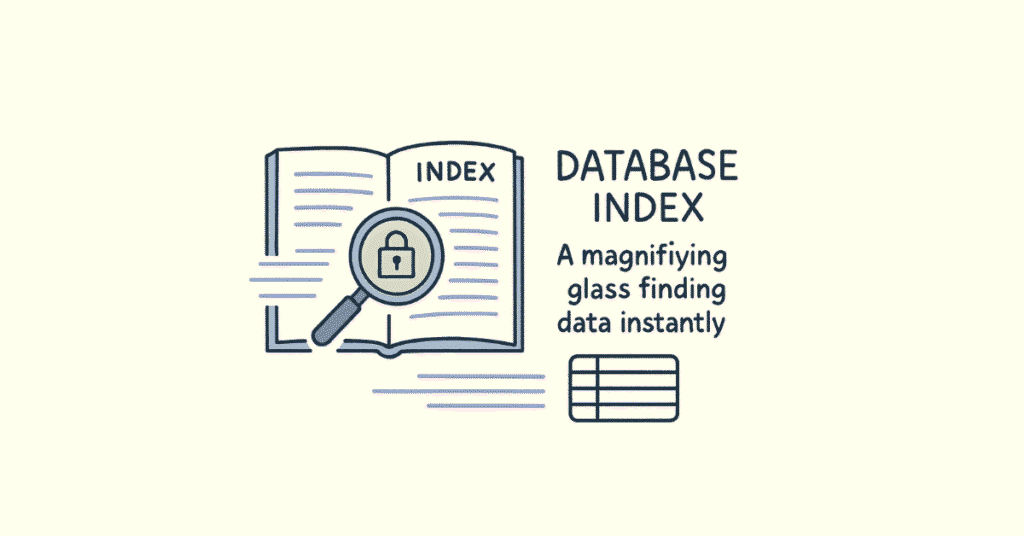

Q29: What is an index, and why is it important?

An index is like a book’s index – it helps find information quickly without reading everything. Database indexes speed up data retrieval but slow down inserts and updates since the index must be maintained. Proper indexing dramatically improves query performance.

Q30: What are the different types of relationships in databases?

Three main types exist:

- One-to-One: Each record in Table A relates to one record in Table B. Like a person and their passport.

- One-to-Many: One record in Table A relates to multiple records in Table B. Like a customer with multiple orders.

- Many-to-Many: Multiple records in both tables relate to each other. Like students and courses – students take multiple courses, courses have multiple students.

🎓 Master DBMS Concepts with Expert-Led Training

Join Database Course →

Section 2: SQL Basics and Advanced Queries (40 Questions)

Q31: Write a basic SELECT query to retrieve all columns from a table.

sql

SELECT * FROM employees;

The asterisk means “all columns.” However, in production, specify exact columns you need for better performance and clarity:

sql

SELECT employee_id, first_name, last_name, salary FROM employees;

Q32: How do you select distinct values from a column?

sql

SELECT DISTINCT department FROM employees;

This removes duplicates, showing each department only once. Useful when you want to know what unique values exist in a column.

Q33: How do you filter results using WHERE clause?

sql

SELECT * FROM employees WHERE salary > 50000;

WHERE filters rows based on conditions. You can combine multiple conditions:

sql

SELECT * FROM employees WHERE salary > 50000 AND department = ‘IT’;

Q34: What’s the difference between WHERE and HAVING?

WHERE filters rows before grouping occurs, HAVING filters after grouping. Use WHERE for individual row conditions, HAVING for aggregate conditions:

sql

SELECT department, AVG(salary) FROM employees

GROUP BY department

HAVING AVG(salary) > 60000;

Q35: Explain the ORDER BY clause.

ORDER BY sorts results. Default is ascending:

sql

SELECT * FROM employees ORDER BY salary DESC;

You can sort by multiple columns:

sql

SELECT * FROM employees ORDER BY department ASC, salary DESC;

Q36: How do you limit the number of rows returned?

Different databases use different syntax:

MySQL/PostgreSQL:

sql

SELECT * FROM employees LIMIT 10;

SQL Server:

sql

SELECT TOP 10 * FROM employees;

Oracle:

sql

SELECT * FROM employees WHERE ROWNUM <= 10;

Q37: What are wildcards in SQL, and how do you use them?

Wildcards help match patterns:

- % matches any sequence of characters

- _ matches single character

sql

SELECT * FROM employees WHERE first_name LIKE ‘J%’;

Finds names starting with J.

sql

SELECT * FROM employees WHERE first_name LIKE ‘_ohn’;

Finds John, Kohn, etc.

Q38: Explain the IN operator.

IN checks if a value matches any in a list:

sql

SELECT * FROM employees WHERE department IN (‘IT’, ‘HR’, ‘Sales’);

It’s cleaner than multiple OR conditions.

Q39: What is the BETWEEN operator?

BETWEEN checks if a value falls within a range, inclusive of boundaries:

sql

SELECT * FROM employees WHERE salary BETWEEN 40000 AND 60000;

Equivalent to:

sql

SELECT * FROM employees WHERE salary >= 40000 AND salary <= 60000;

Q40: How do you handle NULL values in WHERE clauses?

Use IS NULL or IS NOT NULL:

sql

SELECT * FROM employees WHERE manager_id IS NULL;

Never use = NULL or != NULL – they won’t work as expected.

JOIN Operations and Types

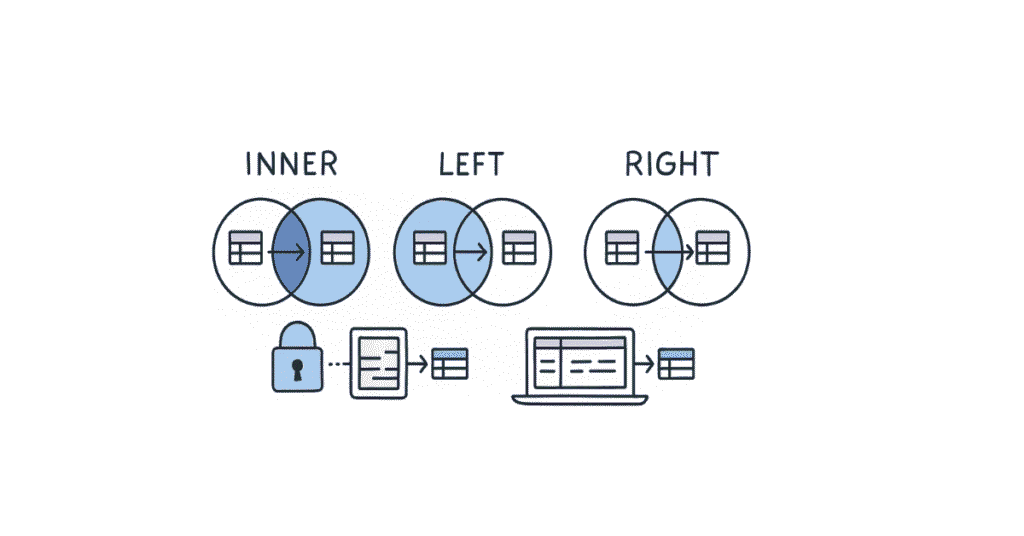

Q41: Explain INNER JOIN with an example.

INNER JOIN returns only matching rows from both tables:

sql

SELECT e.first_name, d.department_name

FROM employees e

INNER JOIN departments d ON e.department_id = d.department_id;

If an employee has no department or a department has no employees, those won’t appear in results.

Q42: What is a LEFT JOIN (LEFT OUTER JOIN)?

LEFT JOIN returns all rows from the left table and matching rows from the right. Non-matches get NULL values:

sql

SELECT e.first_name, d.department_name

FROM employees e

LEFT JOIN departments d ON e.department_id = d.department_id;

Shows all employees, even those without departments.

Q43: Explain RIGHT JOIN.

RIGHT JOIN is the opposite of LEFT JOIN – all rows from the right table and matches from left:

sql

SELECT e.first_name, d.department_name

FROM employees e

RIGHT JOIN departments d ON e.department_id = d.department_id;

Shows all departments, even those with no employees.

Q44: What is a FULL OUTER JOIN?

FULL OUTER JOIN returns all rows from both tables, with NULLs where there’s no match:

sql

SELECT e.first_name, d.department_name

FROM employees e

FULL OUTER JOIN departments d ON e.department_id = d.department_id;

Shows all employees and all departments, matching where possible.

Q45: Explain CROSS JOIN.

CROSS JOIN creates a Cartesian product – every row from the first table paired with every row from the second:

sql

SELECT * FROM colors CROSS JOIN sizes;

If you have 5 colors and 3 sizes, you get 15 combinations. Useful for generating combinations but use carefully as results grow quickly.

Q46: What is a SELF JOIN?

A table joined with itself, useful for hierarchical data:

sql

SELECT e.first_name AS employee, m.first_name AS manager

FROM employees e

LEFT JOIN employees m ON e.manager_id = m.employee_id;

Shows each employee with their manager’s name.

Q47: How do you join more than two tables?

Chain multiple JOINs:

sql

SELECT e.first_name, d.department_name, l.city

FROM employees e

JOIN departments d ON e.department_id = d.department_id

JOIN locations l ON d.location_id = l.location_id;

Each JOIN builds on previous results.

Q48: What’s the difference between JOIN and UNION?

JOIN combines columns from multiple tables horizontally. UNION stacks rows from multiple queries vertically:

sql

SELECT first_name FROM employees

UNION

SELECT first_name FROM contractors;

UNION removes duplicates; UNION ALL keeps them.

Q49: Explain the concept of JOIN conditions vs WHERE conditions.

JOIN conditions define how tables relate. WHERE conditions filter the combined results:

sql

SELECT e.first_name, d.department_name

FROM employees e

JOIN departments d ON e.department_id = d.department_id

WHERE e.salary > 50000;

Join first, then filter.

Q50: What happens if you don’t specify a JOIN condition?

You get a CROSS JOIN (Cartesian product) by default – every row from table one with every row from table two. Usually unintentional and creates huge result sets.

Subqueries and Nested Queries

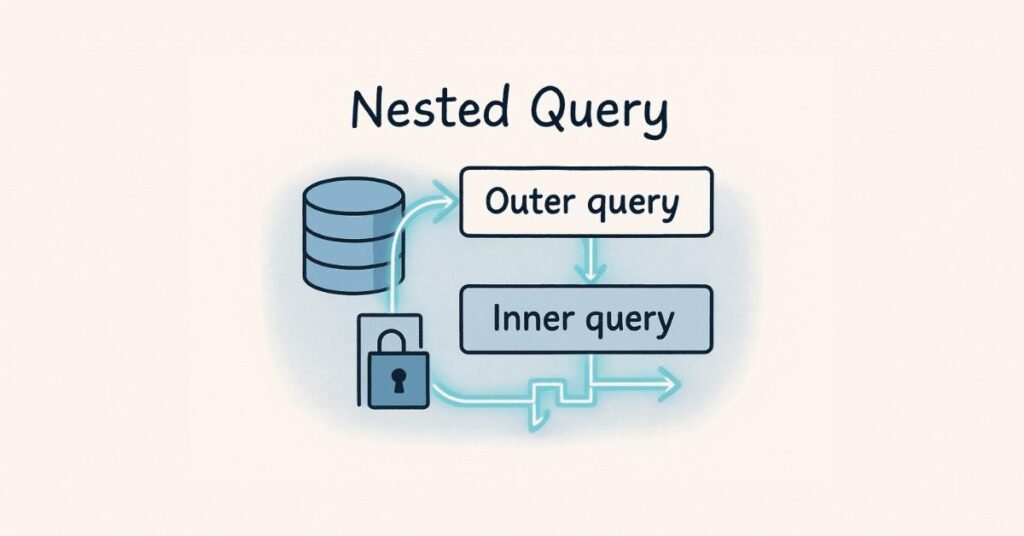

Q51: What is a subquery?

A query within another query. The inner query executes first, and its results feed the outer query:

sql

SELECT first_name FROM employees

WHERE salary > (SELECT AVG(salary) FROM employees);

Finds employees earning above average.

Q52: What’s the difference between correlated and non-correlated subqueries?

Non-correlated subqueries run once independently:

sql

SELECT * FROM employees WHERE department_id = (SELECT department_id FROM departments WHERE name = ‘IT’);

Correlated subqueries reference the outer query and run for each row:

sql

SELECT first_name, salary FROM employees e1

WHERE salary > (SELECT AVG(salary) FROM employees e2 WHERE e2.department_id = e1.department_id);

Q53: How do you use subqueries in the FROM clause?

Treat the subquery result as a temporary table:

sql

SELECT avg_sal FROM

(SELECT department_id, AVG(salary) as avg_sal FROM employees GROUP BY department_id) dept_avgs

WHERE avg_sal > 60000;

This creates an inline view.

Q54: Explain the EXISTS operator.

EXISTS checks if a subquery returns any rows:

sql

SELECT first_name FROM employees e

WHERE EXISTS (SELECT 1 FROM orders o WHERE o.employee_id = e.employee_id);

Shows employees who have placed orders. More efficient than IN for large datasets.

Q55: What’s the difference between IN and EXISTS?

IN compares actual values:

sql

WHERE department_id IN (SELECT department_id FROM departments WHERE location = ‘New York’)

EXISTS checks for existence:

sql

WHERE EXISTS (SELECT 1 FROM departments d WHERE d.department_id = e.department_id AND d.location = ‘New York’)

EXISTS often performs better with correlated subqueries.

Q56: How do you use subqueries in SELECT clause?

sql

SELECT first_name,

(SELECT department_name FROM departments d WHERE d.department_id = e.department_id) as dept_name

FROM employees e;

The subquery returns a single value for each row.

Q57: What is the ANY operator?

ANY compares a value to any value returned by the subquery:

sql

SELECT * FROM employees WHERE salary > ANY (SELECT salary FROM employees WHERE department = ‘IT’);

Returns true if salary exceeds at least one IT salary.

Q58: Explain the ALL operator.

ALL compares against all values returned:

sql

SELECT * FROM employees WHERE salary > ALL (SELECT salary FROM employees WHERE department = ‘IT’);

Returns true only if salary exceeds every IT salary.

Q59: Can you use subqueries in UPDATE statements?

Yes:

sql

UPDATE employees

SET salary = salary * 1.1

WHERE department_id = (SELECT department_id FROM departments WHERE name = ‘IT’);

Updates salaries for IT department employees.

Q60: How do you use subqueries in DELETE statements?

sql

DELETE FROM orders

WHERE customer_id IN (SELECT customer_id FROM customers WHERE status = ‘inactive’);

Deletes orders from inactive customers.

Aggregate Functions and GROUP BY

Q61: What are aggregate functions?

Aggregate functions perform calculations on multiple rows and return a single value:

- COUNT: counts rows

- SUM: adds values

- AVG: calculates average

- MAX: finds maximum

- MIN: finds minimum

sql

SELECT COUNT(*), AVG(salary), MAX(salary), MIN(salary) FROM employees;

Q62: Explain the GROUP BY clause.

GROUP BY groups rows with the same values:

sql

SELECT department_id, AVG(salary)

FROM employees

GROUP BY department_id;

Shows average salary per department.

Q63: What’s the difference between COUNT(*) and COUNT(column_name)?

COUNT(*) counts all rows, including those with NULL values. COUNT(column_name) counts only non-NULL values in that column:

sql

SELECT COUNT(*), COUNT(email) FROM employees;

Q64: How do you count distinct values?

sql

SELECT COUNT(DISTINCT department_id) FROM employees;

Counts unique departments, not total employee count.

Q65: Can you use multiple aggregate functions in one query?

Yes:

sql

SELECT department_id,

COUNT(*) as emp_count,

AVG(salary) as avg_salary,

MAX(salary) as max_salary

FROM employees

GROUP BY department_id;

Q66: Explain GROUP BY with multiple columns.

Groups by combinations of values:

sql

SELECT department_id, job_title, COUNT(*)

FROM employees

GROUP BY department_id, job_title;

Shows employee count for each job title within each department.

Q67: What’s the order of execution in a query with GROUP BY and HAVING?

- FROM – identify tables

- WHERE – filter individual rows

- GROUP BY – group filtered rows

- HAVING – filter groups

- SELECT – select columns

- ORDER BY – sort results

- LIMIT – limit output

Q68: How do you find the second highest salary?

Multiple approaches:

Using LIMIT:

sql

SELECT DISTINCT salary FROM employees

ORDER BY salary DESC

LIMIT 1 OFFSET 1;

Using subquery:

sql

SELECT MAX(salary) FROM employees

WHERE salary < (SELECT MAX(salary) FROM employees);

Q69: How do you find duplicate rows in a table?

sql

SELECT email, COUNT(*)

FROM employees

GROUP BY email

HAVING COUNT(*) > 1;

Shows emails appearing more than once.

Q70: Explain the ROLLUP operator.

ROLLUP creates subtotals and grand totals:

sql

SELECT department_id, job_title, SUM(salary)

FROM employees

GROUP BY ROLLUP(department_id, job_title);

Provides department totals, job title totals within departments, and a grand total.

Window Functions and CTEs

Q71: What are window functions?

Window functions perform calculations across rows related to the current row without collapsing results like GROUP BY:

sql

SELECT first_name, salary,

AVG(salary) OVER (PARTITION BY department_id) as dept_avg_salary

FROM employees;

Shows each employee with their department’s average salary.

Q72: Explain the ROW_NUMBER() function.

ROW_NUMBER assigns a unique sequential number to each row:

sql

SELECT first_name, salary,

ROW_NUMBER() OVER (ORDER BY salary DESC) as salary_rank

FROM employees;

Numbers employees by salary, highest to lowest.

Q73: What’s the difference between ROW_NUMBER, RANK, and DENSE_RANK?

All assign rankings:

- ROW_NUMBER: Always unique (1,2,3,4,5)

- RANK: Ties get same rank, next rank skips (1,2,2,4,5)

- DENSE_RANK: Ties get same rank, next rank doesn’t skip (1,2,2,3,4)

sql

SELECT salary,

ROW_NUMBER() OVER (ORDER BY salary DESC) as row_num,

RANK() OVER (ORDER BY salary DESC) as rank,

DENSE_RANK() OVER (ORDER BY salary DESC) as dense_rank

FROM employees;

Q74: Explain the PARTITION BY clause in window functions.

PARTITION BY divides results into groups for calculations:

sql

SELECT first_name, department_id, salary,

RANK() OVER (PARTITION BY department_id ORDER BY salary DESC) as dept_rank

FROM employees;

Ranks employees within their own department.

Q75: What are LEAD and LAG functions?

LEAD accesses the next row’s value, LAG accesses the previous row’s value:

sql

SELECT order_date, total_amount,

LAG(total_amount) OVER (ORDER BY order_date) as previous_amount,

LEAD(total_amount) OVER (ORDER BY order_date) as next_amount

FROM orders;

Useful for comparing sequential data.

Q76: Explain the NTILE function.

NTILE divides rows into specified number of groups:

sql

SELECT first_name, salary,

NTILE(4) OVER (ORDER BY salary) as salary_quartile

FROM employees;

Divides employees into four salary quartiles.

Q77: What is a Common Table Expression (CTE)?

A CTE is a temporary named result set that exists for one query:

sql

WITH high_earners AS (

SELECT * FROM employees WHERE salary > 80000

)

SELECT department_id, COUNT(*)

FROM high_earners

GROUP BY department_id;

More readable than subqueries for complex queries.

Q78: How do you create a recursive CTE?

Recursive CTEs reference themselves, useful for hierarchical data:

sql

WITH RECURSIVE employee_hierarchy AS (

SELECT employee_id, first_name, manager_id, 1 as level

FROM employees WHERE manager_id IS NULL

UNION ALL

SELECT e.employee_id, e.first_name, e.manager_id, eh.level + 1

FROM employees e

JOIN employee_hierarchy eh ON e.manager_id = eh.employee_id

)

SELECT * FROM employee_hierarchy;

Shows organizational hierarchy with levels.

Q79: What’s the difference between CTEs and temporary tables?

CTEs exist only for the duration of one query and aren’t physically stored. Temporary tables are created in tempdb, persist across multiple statements in a session, and require explicit cleanup. Use CTEs for readability in single queries, temp tables for complex multi-step operations.

Q80: Can you use multiple CTEs in one query?

Yes, separate them with commas:

sql

WITH

dept_avg AS (

SELECT department_id, AVG(salary) as avg_sal

FROM employees GROUP BY department_id

),

high_depts AS (

SELECT department_id FROM dept_avg WHERE avg_sal > 60000

)

SELECT e.* FROM employees e

JOIN high_depts h ON e.department_id = h.department_id;

🧭 Practice Real-World SQL Scenarios Step-by-Step

Follow SQL Roadmap →

Section 3: Database Performance Optimization (35 Questions)

Indexing Strategies and Types

Q81: What is an index in a database?

An index is a data structure that improves data retrieval speed. Like a book’s index helps you find topics without reading everything, a database index helps locate rows without scanning the entire table. Indexes speed up SELECT queries but slow down INSERT, UPDATE, and DELETE since the index needs updating.

Q82: Explain clustered vs non-clustered indexes.

A clustered index determines the physical order of data in the table – like a phone book sorted by last name. A table can have only one clustered index. Non-clustered indexes create a separate structure pointing to data locations – like a book’s index at the back. Tables can have multiple non-clustered indexes.

Q83: When should you create an index?

Create indexes on:

- Primary keys and foreign keys

- Columns frequently used in WHERE clauses

- Columns used in JOIN conditions

- Columns used in ORDER BY

- Columns with high selectivity (many unique values)

Avoid indexing:

- Small tables

- Columns frequently updated

- Columns with low selectivity (few unique values like gender)

- Tables with heavy INSERT/UPDATE operations

Q84: What is a composite index?

A composite index includes multiple columns:

sql

CREATE INDEX idx_name_dept ON employees(last_name, department_id);

Most effective when querying both columns together. Column order matters – the index works best when the leftmost column is in the WHERE clause.

Q85: Explain index selectivity.

Selectivity measures how unique values are in a column. High selectivity (many unique values like employee ID) makes good indexes. Low selectivity (few values like gender) makes poor indexes since the index doesn’t narrow down results much.

Q86: What is a covering index?

A covering index includes all columns needed for a query, eliminating the need to access the table:

sql

CREATE INDEX idx_cover ON employees(department_id, last_name, salary);

For this query, the index contains everything needed:

sql

SELECT last_name, salary FROM employees WHERE department_id = 10;

Q87: What are the disadvantages of having too many indexes?

- Slows down INSERT, UPDATE, and DELETE operations

- Increases storage space

- Complicates query optimizer decisions

- Requires maintenance overhead

Balance is key – index wisely, not excessively.

Q88: How do you identify missing indexes?

In SQL Server:

sql

SELECT * FROM sys.dm_db_missing_index_details;

In MySQL, analyze slow query logs. In PostgreSQL, use pg_stat_statements. Most databases provide tools to suggest helpful indexes based on actual query patterns.

Q89: What is index fragmentation?

Over time, as data is inserted, updated, and deleted, indexes become fragmented – data pages aren’t contiguous. This slows queries as the database reads scattered pages. Regular index maintenance (rebuild or reorganize) addresses fragmentation.

Q90: How do you maintain indexes?

Rebuild indexes periodically to eliminate fragmentation:

SQL Server:

sql

ALTER INDEX ALL ON employees REBUILD;

MySQL:

sql

OPTIMIZE TABLE employees;

Schedule maintenance during low-activity periods since rebuilding can lock tables.

Query Optimization Techniques

Q91: How do you identify slow queries?

Enable slow query logging:

MySQL:

sql

SET GLOBAL slow_query_log = ‘ON’;

SET GLOBAL long_query_time = 2;

SQL Server: Use Extended Events or Query Store. PostgreSQL: Check pg_stat_statements. Review logs to find queries exceeding acceptable execution times.

Q92: What is an execution plan?

An execution plan shows how the database executes a query – which indexes it uses, join methods, and estimated costs. Use it to identify bottlenecks:

sql

EXPLAIN SELECT * FROM employees WHERE department_id = 10;

Look for table scans, missing indexes, and expensive operations.

Q93: Explain table scans vs index scans.

Table scan reads every row sequentially – slow for large tables. Index scan uses an index to find relevant rows quickly. If your query causes a table scan on a large table with a WHERE clause, you probably need an index.

Q94: What is query cost?

The database optimizer estimates the computational cost of different execution strategies and chooses the cheapest. Cost considers CPU, I/O, and memory. Understanding costs helps you write efficient queries and create appropriate indexes.

Q95: How does the database optimizer work?

The optimizer analyzes your query, generates multiple execution plans, estimates their costs based on statistics, and selects the most efficient plan. It considers indexes, join orders, and access methods. Statistics must be current for accurate optimization.

Q96: What are database statistics, and why are they important?

Statistics contain information about data distribution, row counts, and value frequencies. The optimizer uses statistics to estimate costs and choose plans. Outdated statistics lead to poor plan choices and slow queries.

Update statistics:

SQL Server:

sql

UPDATE STATISTICS employees;

Oracle:

sql

EXEC DBMS_STATS.GATHER_TABLE_STATS(‘schema’, ’employees’);

Q97: How do you optimize JOIN operations?

- Index foreign key columns

- Join on indexed columns

- Filter early with WHERE clauses

- Consider join order (smaller tables first)

- Use appropriate join types

- Ensure statistics are current

Example optimization:

sql

— Instead of

SELECT * FROM large_table t1 JOIN small_table t2 ON t1.id = t2.id WHERE t2.status = ‘active’;

— Write

SELECT * FROM small_table t2 JOIN large_table t1 ON t1.id = t2.id WHERE t2.status = ‘active’;

Q98: What is query rewriting?

Rewriting queries to equivalent but more efficient forms:

Instead of:

sql

SELECT * FROM employees WHERE YEAR(hire_date) = 2020;

Write:

sql

SELECT * FROM employees WHERE hire_date >= ‘2020-01-01’ AND hire_date < ‘2021-01-01’;

The second version can use an index on hire_date.

Q99: Explain the N+1 query problem.

Occurs when you execute one query to fetch records, then one query per record for related data:

sql

— Gets 100 employees

SELECT * FROM employees;

— Then for each employee (100 more queries!)

SELECT * FROM departments WHERE id = ?;

Solution: Use JOINs or batch loading:

sql

SELECT e.*, d.* FROM employees e JOIN departments d ON e.dept_id = d.id;

Q100: How do you optimize subqueries?

Often, JOINs perform better than subqueries:

Instead of:

sql

SELECT * FROM employees WHERE department_id IN (SELECT department_id FROM departments WHERE location = ‘NYC’);

Use JOIN:

sql

SELECT e.* FROM employees e JOIN departments d ON e.department_id = d.department_id WHERE d.location = ‘NYC’;

Modern optimizers often convert subqueries to joins automatically, but explicit joins give you more control.

Q101: What is query caching?

Some databases cache query results. If the same query runs again with unchanged underlying data, cached results return instantly. Useful for frequently run, infrequently changing data. Not all databases support it – MySQL does, PostgreSQL doesn’t natively.

Q102: How do you handle large result sets efficiently?

- Use pagination with LIMIT and OFFSET

- Implement cursor-based pagination for better performance

- Return only needed columns

- Process in batches

- Consider data warehousing for analytical queries

sql

— Pagination

SELECT * FROM orders ORDER BY order_date LIMIT 50 OFFSET 100;

Q103: What is query parallelization?

Breaking a query into smaller parts that execute simultaneously across multiple CPU cores. Large analytical queries benefit most. Database engines handle this automatically when beneficial, but you can influence it with hints or configuration settings.

Q104: Explain the importance of WHERE clause placement.

Filter as early as possible to reduce data volume:

sql

— Good: Filters before join

SELECT e.name, d.name

FROM (SELECT * FROM employees WHERE active = 1) e

JOIN departments d ON e.dept_id = d.id;

— Better: Even cleaner syntax

SELECT e.name, d.name

FROM employees e

JOIN departments d ON e.dept_id = d.id

WHERE e.active = 1;

Q105: How do you optimize ORDER BY operations?

- Create indexes on ORDER BY columns

- Order in the same direction as the index

- Limit results before sorting when possible

- Consider denormalization for frequently sorted columns

sql

CREATE INDEX idx_salary ON employees(salary DESC);

SELECT * FROM employees ORDER BY salary DESC LIMIT 10;

Execution Plans and Analysis

Q106: How do you read an execution plan?

Execution plans show operations as a tree. Read from inside out and bottom up. Look for:

- Table scans (bad on large tables)

- Index seeks (good) vs scans (depends)

- Join types and order

- Estimated vs actual rows (big differences indicate stale statistics)

- Expensive operations (high cost percentage)

Q107: What is the difference between estimated and actual execution plans?

Estimated plans show what the optimizer thinks will happen without running the query. Actual plans show what really happened, including actual row counts and execution times. Compare both to identify optimizer mistakes.

Q108: What are table hints?

Hints override the optimizer’s decisions:

sql

SELECT * FROM employees WITH (INDEX(idx_department)) WHERE department_id = 10;

Use sparingly – the optimizer usually knows best. Useful when you have specific knowledge the optimizer lacks.

Q109: How do you force index usage?

MySQL:

sql

SELECT * FROM employees FORCE INDEX (idx_name) WHERE last_name = ‘Smith’;

SQL Server:

sql

SELECT * FROM employees WITH (INDEX(idx_name)) WHERE last_name = ‘Smith’;

Only force when you’re certain the optimizer is wrong.

Q110: What is query plan cache?

Databases cache execution plans to avoid recompiling queries. Subsequent executions reuse cached plans, saving time. Parameterized queries maximize cache hits:

sql

PREPARE stmt FROM ‘SELECT * FROM employees WHERE id = ?’;

Q111: How do you clear the query plan cache?

SQL Server:

sql

DBCC FREEPROCCACHE;

MySQL:

sql

RESET QUERY CACHE;

Clear when you suspect bad cached plans, but understand it forces recompilation for all queries.

Q112: What causes plan regressions?

Plan regressions occur when a previously fast query becomes slow due to:

- Outdated statistics

- Parameter sniffing issues

- Data distribution changes

- Index fragmentation

- Schema changes

Q113: Explain parameter sniffing.

The optimizer creates plans based on the first parameter values it sees. If later executions use different values with different data distributions, the cached plan might be inefficient. Solutions include query recompilation, query hints, or optimizing for unknown.

Q114: What is adaptive query processing?

Modern databases adjust execution plans during runtime based on actual data encountered. If the optimizer’s estimates are wrong, adaptive processing can switch strategies mid-execution, improving performance for complex queries.

Q115: How do you compare execution plans?

Most database tools let you compare plans visually. Look for:

- Different join orders

- Different index choices

- Changed operation types

- Cost differences

Identify what changed and why one plan is better.

Partitioning and Sharding

Q116: What is table partitioning?

Partitioning divides a large table into smaller, manageable pieces called partitions, while logically remaining one table. Each partition can be stored, indexed, and maintained independently:

sql

CREATE TABLE orders (

order_id INT,

order_date DATE,

amount DECIMAL

) PARTITION BY RANGE (YEAR(order_date)) (

PARTITION p2021 VALUES LESS THAN (2022),

PARTITION p2022 VALUES LESS THAN (2023),

PARTITION p2023 VALUES LESS THAN (2024)

);

Q117: What are the benefits of partitioning?

- Improved query performance (partition pruning)

- Easier maintenance (archive/delete old partitions)

- Better manageability of large tables

- Parallel query execution per partition

- Faster backup and restore of specific partitions

Q118: Explain different types of partitioning.

- Range partitioning: Based on value ranges (dates, numbers)

- List partitioning: Based on specific value lists (regions, categories)

- Hash partitioning: Based on hash function results (distributes evenly)

- Composite partitioning: Combination of methods

Q119: What is partition pruning?

The optimizer automatically excludates irrelevant partitions from queries:

sql

SELECT * FROM orders WHERE order_date = ‘2023-05-15’;

Only the 2023 partition is scanned, not all years. This dramatically improves performance on partitioned tables.

Q120: What is database sharding?

Sharding distributes data across multiple database servers, with each shard containing a subset of data. Unlike partitioning (one database), sharding uses multiple databases, improving scalability for massive datasets.

Q121: How do you choose a sharding key?

Select a key that:

- Distributes data evenly across shards

- Allows queries to target specific shards

- Avoids hotspots (uneven load)

- Aligns with your access patterns

Common choices: user ID, geographic region, customer ID.

Q122: What are the challenges of sharding?

- Complex queries across shards

- Maintaining referential integrity

- Rebalancing when adding/removing shards

- Increased application complexity

- Cross-shard transactions

- Backup and recovery coordination

Q123: What is horizontal vs vertical partitioning?

Horizontal partitioning (sharding) divides rows across partitions – like splitting customers by region. Vertical partitioning divides columns – frequently accessed columns in one table, rarely accessed in another. Both improve performance differently.

Q124: How do you maintain partitioned tables?

- Add new partitions for future time periods

- Archive or drop old partitions

- Rebuild partition indexes periodically

- Update partition statistics

- Monitor partition size and growth

sql

ALTER TABLE orders ADD PARTITION (PARTITION p2024 VALUES LESS THAN (2025));

Q125: Can you partition indexes?

Yes, indexes can be partitioned the same way as tables:

- Local indexes: Each partition has its own index

- Global indexes: One index across all partitions

Local indexes are easier to maintain, global indexes sometimes offer better query performance.

⚙️ Learn to Optimize Database Performance Like a Pro!

Read How-to Guide →

Section 4: Backup and Recovery (30 Questions)

Backup Types and Strategies

Q126: What is a full backup?

A full backup copies the entire database completely. It’s the foundation of any backup strategy. While it takes the longest time and most storage, full backups allow complete restoration with a single backup file.

Q127: What is an incremental backup?

An incremental backup copies only data changed since the last backup (of any type). It’s fast and storage-efficient but requires all previous backups for restoration. If you lose one incremental backup, you can’t restore fully.

Q128: What is a differential backup?

A differential backup copies all changes since the last full backup. Faster than full backups but slower than incremental. For restoration, you need only the full backup plus the latest differential – simpler recovery than incremental.

Q129: Which backup strategy should you use?

A common approach:

- Full backup weekly (Sunday night)

- Differential backup daily

- Transaction log backups hourly (for point-in-time recovery)

This balances storage, backup time, and recovery complexity. Adjust frequency based on your data change rate and recovery requirements.

Q130: What is a transaction log backup?

Transaction log backups capture all transactions since the last log backup. They enable point-in-time recovery – restoring to a specific moment. Essential for minimizing data loss after failures. Only available when using full recovery model.

Q131: How do you automate database backups?

Use built-in scheduling:

SQL Server: SQL Server Agent jobs

sql

BACKUP DATABASE AdventureWorks TO DISK = ‘D:\Backups\AdventureWorks.bak’;

MySQL: Cron jobs with mysqldump

bash

0 2 * * * mysqldump -u root -p database_name > backup.sql

Oracle: RMAN scripts

PostgreSQL: pg_dump with cron

Q132: What should you verify after taking backups?

- Backup completed successfully

- File size is reasonable

- No corruption in backup file

- Backup can be restored to test environment

- Backup is stored securely offsite

- Retention policies are followed

Never trust backups you haven’t tested restoring.

Q133: What is the 3-2-1 backup rule?

Keep:

- 3 copies of your data

- On 2 different media types

- With 1 copy offsite

This protects against hardware failure, site disasters, and accidental deletion.

Q134: How do you calculate Recovery Time Objective (RTO)?

RTO is the maximum acceptable downtime. Calculate by:

- Time to detect failure

- Time to decide recovery approach

- Time to restore backup

- Time to verify data integrity

- Time to redirect applications

Faster RTO requires better infrastructure and more frequent backups.

Q135: What is Recovery Point Objective (RPO)?

RPO is the maximum acceptable data loss measured in time. If your RPO is 1 hour, you can’t lose more than 1 hour of data. This determines backup frequency – hourly transaction log backups for 1-hour RPO.

Point-in-Time Recovery

Q136: What is point-in-time recovery (PITR)?

PITR restores a database to a specific moment in time, not just when a backup was taken. Useful when you discover problems hours after they occurred:

sql

RESTORE DATABASE AdventureWorks FROM DISK = ‘backup.bak’

WITH STOPAT = ‘2024-10-30 10:30:00’;

Q137: What recovery models does SQL Server support?

- Simple: No point-in-time recovery, minimal logging, automatic transaction log management

- Full: Point-in-time recovery possible, complete logging, requires log backups

- Bulk-Logged: Similar to full but with minimal logging for bulk operations

Choose based on your data criticality and recovery requirements.

Q138: How do you perform a point-in-time recovery?

- Restore the most recent full backup (with NORECOVERY)

- Restore any differential backups (with NORECOVERY)

- Restore transaction log backups in sequence up to the desired point

- Final restore with RECOVERY

sql

RESTORE DATABASE db FROM DISK = ‘full.bak’ WITH NORECOVERY;

RESTORE LOG db FROM DISK = ‘log1.trn’ WITH NORECOVERY;

RESTORE LOG db FROM DISK = ‘log2.trn’ WITH NORECOVERY;

RESTORE DATABASE db WITH RECOVERY;

Q139: What is a tail-log backup?

A tail-log backup captures active transaction log after a failure, before restoring. It prevents data loss from the period between the last scheduled backup and the failure moment.

Q140: How do you recover from accidental data deletion?

If discovered quickly:

- Take a tail-log backup

- Restore to point just before deletion

- Export affected data

- Restore current state

- Reimport recovered data

If using transaction log backups, you can pinpoint the exact moment before deletion.

Q141: What tools help with backup automation?

- SQL Server: Maintenance Plans, Ola Hallengren scripts

- MySQL: MySQL Enterprise Backup, mysqldump with scripts

- Oracle: RMAN (Recovery Manager)

- PostgreSQL: pg_basebackup, Barman

- Third-party: Veeam, Commvault, Rubrik

Q142: How do you compress backups?

SQL Server:

sql

BACKUP DATABASE db TO DISK = ‘backup.bak’ WITH COMPRESSION;

MySQL: Use gzip with mysqldump

bash

mysqldump database | gzip > backup.sql.gz

Compression reduces storage and transfer time but increases CPU usage during backup.

Q143: What is backup encryption?

Encrypting backups protects data if backup media is stolen:

sql

BACKUP DATABASE db TO DISK = ‘backup.bak’

WITH ENCRYPTION (ALGORITHM = AES_256, SERVER CERTIFICATE = BackupCert);

Always encrypt backups containing sensitive data.

Q144: How do you test backup validity?

Regularly restore backups to test environments:

- Restore to non-production server

- Verify database consistency

- Check data integrity

- Run application smoke tests

- Document restore time for RTO planning

Quarterly testing is minimum; critical databases need monthly testing.

Q145: What is a cold backup vs hot backup?

Cold backup: Database is shut down, guaranteeing consistency but requiring downtime.

Hot backup: Database remains online during backup. No downtime but requires special features like snapshot technology or specific backup tools.

Disaster Recovery Planning

Q146: What should a disaster recovery plan include?

- Contact information for DBA team

- Step-by-step recovery procedures

- Backup location details

- Required access credentials

- Hardware/software requirements

- Recovery time estimates

- Testing schedule and results

- Update procedures

Document thoroughly and keep copies offsite.

Q147: What is a disaster recovery site?

An alternate location where you can restore operations after a disaster. Types include:

- Hot site: Fully equipped, ready immediately

- Warm site: Partially equipped, ready in hours/days

- Cold site: Empty space, ready in days/weeks

Choose based on RTO requirements and budget.

Q148: How do you implement database replication for disaster recovery?

Set up replication to a remote site:

SQL Server: Always On Availability Groups

sql

CREATE AVAILABILITY GROUP MyAG FOR DATABASE MyDB

REPLICA ON ‘Primary’ WITH (…),

‘Secondary’ WITH (…);

MySQL: Binary log replication

Oracle: Data Guard

PostgreSQL: Streaming replication

Q149: What is the difference between backup and replication?

Backups are point-in-time copies stored separately. Replication maintains a live copy that updates continuously. Use both – replication for high availability, backups for recovery from logical errors (like accidental deletion, which replicates to the replica).

Q150: How do you handle database corruption?

- Identify corrupted pages/objects

- Attempt automatic repair if available

- Restore from backup if repair fails

- Validate restored data

- Investigate cause to prevent recurrence

sql

— Check for corruption

DBCC CHECKDB (database_name);

— Attempt repair (last resort)

ALTER DATABASE database_name SET SINGLE_USER;

DBCC CHECKDB (database_name, REPAIR_ALLOW_DATA_LOSS);

ALTER DATABASE database_name SET MULTI_USER;

Q151: What is the importance of backup retention policies?

Retention policies define how long to keep backups. Consider:

- Regulatory requirements (often 7 years)

- Storage costs

- Recovery scenarios (long-term audits)

- Database size and change rate

Common approach: Keep daily backups for 30 days, weekly for 6 months, monthly for years.

Q152: How do you restore a database to a different server?

- Copy backup files to target server

- Restore database:

sql

RESTORE DATABASE new_name FROM DISK = ‘backup.bak’

WITH MOVE ‘data_file’ TO ‘D:\new_path\data.mdf’,

MOVE ‘log_file’ TO ‘D:\new_path\log.ldf’;

- Update server-specific settings

- Recreate logins and permissions

- Update connection strings in applications

Q153: What is database mirroring?

An older high-availability solution that maintains an exact copy of a database on another server. Transactions are sent to both principal and mirror. If the principal fails, the mirror takes over. Modern alternatives include Always On Availability Groups.

Q154: How do you backup very large databases efficiently?

- Use compressed backups

- Implement file group backups (backup portions separately)

- Consider snapshot-based backups

- Use backup striping (multiple backup files simultaneously)

- Leverage backup solutions with deduplication

- Schedule during low-activity windows

Q155: What post-recovery steps are critical?

After recovery:

- Verify database consistency (DBCC CHECKDB)

- Update statistics

- Rebuild fragmented indexes

- Test application connectivity

- Verify critical queries work

- Monitor performance

- Communicate restoration completion

- Document what happened and lessons learned

🚀 Want to go from interview prep to mastery? Learn PEGA from industry experts. → [Explore PEGA Course]

Section 5: Security and User Management (25 Questions)

User Authentication and Authorization

Q156: What is the difference between authentication and authorization?

Authentication verifies who you are (username/password). Authorization determines what you can do (permissions). You must be authenticated before being authorized. Think of it like entering a building – your ID badge authenticates you, but your clearance level authorizes which rooms you can enter.

Q157: What authentication methods do databases support?

- SQL authentication: Database manages credentials

- Windows authentication: OS manages credentials (SQL Server)

- LDAP/Active Directory: Centralized directory service

- Certificate-based: Uses digital certificates

- Kerberos: Network authentication protocol

- Multi-factor authentication: Additional security layer

Q158: How do you create database users?

SQL Server:

sql

CREATE LOGIN user_name WITH PASSWORD = ‘StrongPassword123!’;

USE database_name;

CREATE USER user_name FOR LOGIN user_name;

MySQL:

sql

CREATE USER ‘user_name’@’localhost’ IDENTIFIED BY ‘password’;

PostgreSQL:

sql

CREATE USER user_name WITH PASSWORD ‘password’;

Q159: What is the principle of least privilege?

Grant users only the minimum permissions needed to perform their jobs. Don’t give everyone admin rights “just in case.” This limits damage from compromised accounts or insider threats. Review and audit permissions regularly.

Q160: How do you grant permissions to users?

sql

— Grant SELECT on specific table

GRANT SELECT ON employees TO user_name;

— Grant multiple permissions

GRANT SELECT, INSERT, UPDATE ON orders TO user_name;

— Grant all permissions on database

GRANT ALL PRIVILEGES ON database.* TO user_name;

Q161: How do you revoke permissions?

sql

— Revoke specific permission

REVOKE INSERT ON employees FROM user_name;

— Revoke all permissions

REVOKE ALL PRIVILEGES ON database.* FROM user_name;

Q162: What are database roles?

Roles group permissions together for easier management. Instead of granting permissions individually to each user, grant permissions to roles and add users to roles:

sql

CREATE ROLE analyst;

GRANT SELECT ON ALL TABLES IN SCHEMA public TO analyst;

GRANT analyst TO user_name;

Q163: What built-in roles do databases typically have?

SQL Server: sysadmin, db_owner, db_datareader, db_datawriter, db_ddladmin

MySQL: root, several privilege levels (global, database, table)

PostgreSQL: superuser, createdb, createrole, replication

Never use sysadmin/root for applications – create specific accounts with limited permissions.

Q164: How do you implement role-based access control (RBAC)?

- Define roles based on job functions (analyst, developer, manager)

- Grant appropriate permissions to each role

- Assign users to roles

- Review and update periodically

sql

CREATE ROLE sales_team;

GRANT SELECT ON customers, orders TO sales_team;

GRANT INSERT, UPDATE ON orders TO sales_team;

GRANT sales_team TO sales_user1, sales_user2;

Q165: What is row-level security?

Row-level security restricts which rows users can see based on their identity:

sql

CREATE POLICY sales_policy ON orders

FOR SELECT TO sales_users

USING (salesperson_id = current_user_id());

Users only see their own sales records, even though everyone queries the same table.

Data Encryption Methods

Q166: What is Transparent Data Encryption (TDE)?

TDE encrypts database files at rest – the data, log files, and backups. Encryption/decryption happens automatically without application changes. If someone steals the physical hard drive, they can’t read the data without the encryption key.

Q167: How do you enable TDE?

SQL Server:

sql

CREATE MASTER KEY ENCRYPTION BY PASSWORD = ‘StrongPassword!’;

CREATE CERTIFICATE TDE_Cert WITH SUBJECT = ‘TDE Certificate’;

USE database_name;

CREATE DATABASE ENCRYPTION KEY WITH ALGORITHM = AES_256

ENCRYPTION BY SERVER CERTIFICATE TDE_Cert;

ALTER DATABASE database_name SET ENCRYPTION ON;

Q168: What is column-level encryption?

Encrypting specific columns containing sensitive data (credit cards, SSNs). Users without decryption keys see encrypted values:

sql

INSERT INTO customers (name, ssn)

VALUES (‘John Doe’, EncryptByKey(Key_GUID(‘SSN_Key’), ‘123-45-6789’));

SELECT name, CONVERT(varchar, DecryptByKey(ssn)) as ssn FROM customers;

Q169: What is the difference between symmetric and asymmetric encryption?

Symmetric encryption uses the same key for encryption and decryption – faster but requires secure key distribution. Asymmetric encryption uses public/private key pairs – slower but solves key distribution problems. Use symmetric for data at rest, asymmetric for key exchange and digital signatures.

Q170: How do you protect encryption keys?

- Store in hardware security modules (HSM)

- Use key management services (AWS KMS, Azure Key Vault)

- Implement key rotation policies

- Separate key storage from data storage

- Backup keys securely offsite

- Restrict key access to minimal personnel

Never store keys in application code or configuration files.

Q171: What is Always Encrypted?

A SQL Server feature that encrypts data end-to-end. Data is encrypted in the application, transmitted encrypted, stored encrypted, and only decrypted in the client application. The database server never sees plaintext, protecting against DBA access and server compromises.

Q172: How do you implement SSL/TLS for database connections?

Enable encrypted connections between applications and database:

MySQL:

sql

GRANT ALL PRIVILEGES ON *.* TO ‘user’@’%’ REQUIRE SSL;

SQL Server: Force encryption in configuration, use certificates

PostgreSQL: Set ssl = on in postgresql.conf

Always use encrypted connections for sensitive data over networks.

Q173: What is data masking?

Showing modified versions of sensitive data to unauthorized users. Production data remains unchanged, but queries return masked values:

sql

— Create masked column

CREATE TABLE customers (

name VARCHAR(100),

email VARCHAR(100) MASKED WITH (FUNCTION = ’email()’),

ssn VARCHAR(11) MASKED WITH (FUNCTION = ‘default()’)

);

Developers see “xxxx@xxxx.com” instead of real emails.

Q174: How do you audit encryption implementation?

- Verify all sensitive columns are encrypted

- Test decryption permissions

- Confirm backup encryption

- Check network encryption settings

- Review key access logs

- Validate certificate expiration dates

- Test recovery procedures with encrypted backups

Q175: What compliance requirements affect encryption?

- GDPR: Personal data protection

- PCI DSS: Credit card data encryption

- HIPAA: Healthcare data protection

- SOX: Financial data integrity

- Each has specific encryption requirements

Know which regulations apply to your data and implement accordingly.

SQL Injection Prevention

Q176: What is SQL injection?

SQL injection occurs when attackers insert malicious SQL into application inputs, tricking the database into executing unintended commands. Example: username field with admin’ OR ‘1’=’1 bypasses authentication. The most common and dangerous web application vulnerability.

Q177: How do parameterized queries prevent SQL injection?

Parameterized queries separate SQL code from data:

python

# Vulnerable

query = “SELECT * FROM users WHERE username = ‘” + user_input + “‘”

# Safe

query = “SELECT * FROM users WHERE username = ?”

cursor.execute(query, (user_input,))

Parameters are treated as data only, never as code.

Q178: What are prepared statements?

Prepared statements are pre-compiled SQL with placeholders for parameters:

java

PreparedStatement stmt = conn.prepareStatement(“SELECT * FROM users WHERE username = ?”);

stmt.setString(1, userInput);

ResultSet rs = stmt.executeQuery();

The database knows the SQL structure beforehand, preventing code injection.

Q179: How do stored procedures help prevent SQL injection?

Stored procedures with parameterized inputs provide a safe interface:

sql

CREATE PROCEDURE GetUser

@username NVARCHAR(50)

AS

BEGIN

SELECT * FROM users WHERE username = @username;

END

Applications call procedures with parameters, not dynamic SQL.

Q180: What input validation should you implement?

- Whitelist allowed characters

- Reject suspicious patterns (SQL keywords, special characters)

- Limit input length

- Validate data types

- Escape special characters if dynamic SQL is unavoidable

Never trust user input – validate everything.

Audit and Compliance

Q181: What is database auditing?

Tracking and logging database activities including:

- Who accessed what data

- What changes were made

- When activities occurred

- Unsuccessful access attempts

- Permission changes

Essential for security, compliance, and troubleshooting.

Q182: How do you enable database auditing?

SQL Server:

sql

CREATE SERVER AUDIT CompanyAudit TO FILE (FILEPATH = ‘C:\Audits\’);

CREATE DATABASE AUDIT SPECIFICATION audit_spec FOR SERVER AUDIT CompanyAudit

ADD (SELECT ON dbo.employees BY public);

ALTER SERVER AUDIT CompanyAudit WITH (STATE = ON);

Q183: What should you audit?

- Login attempts (success and failure)

- Permission changes

- Schema modifications

- Access to sensitive tables

- Data modifications on critical tables

- Admin activities

- Backup and restore operations

Balance security needs with performance impact.

Q184: How do you detect unusual database activity?

- Monitor failed login attempts

- Track queries from unexpected sources

- Alert on after-hours access to sensitive data

- Watch for bulk data exports

- Flag permission escalations

- Review audit logs regularly

Implement automated alerting for suspicious patterns.

Q185: What is database activity monitoring (DAM)?

Real-time monitoring and analysis of database activity. DAM tools watch for:

- Policy violations

- Anomalous behavior

- Potential attacks

- Compliance violations

They provide alerts and detailed audit trails.

Q186: How do you implement change control for databases?

- Require documented approval for schema changes

- Use version control for database scripts

- Test changes in non-production first

- Implement during maintenance windows

- Maintain rollback procedures

- Document all changes

Never modify production databases manually.

Q187: What is database hardening?

Securing databases by:

- Removing default accounts

- Disabling unnecessary features

- Applying security patches promptly

- Using strong passwords

- Limiting network exposure

- Configuring secure defaults

- Regular security reviews

Follow CIS benchmarks for your database platform.

Q188: How do you maintain compliance with data regulations?

- Know applicable regulations (GDPR, HIPAA, PCI DSS)

- Implement required controls (encryption, access logs)

- Document policies and procedures

- Conduct regular audits

- Train staff on compliance requirements

- Maintain audit evidence

- Review and update regularly

Q189: What is database forensics?

Investigating database security incidents:

- Reviewing audit logs

- Analyzing transaction logs

- Identifying compromised accounts

- Determining data exposure

- Reconstructing attacker actions

- Collecting evidence for legal proceedings

Proper auditing makes forensics possible.

Q190: How do you handle security incidents?

- Detect and confirm the incident

- Contain the threat (disable accounts, block IPs)

- Investigate the scope and impact

- Eradicate the vulnerability

- Recover to normal operations

- Document lessons learned

- Update security measures

Have an incident response plan ready.

🔐 Secure Your Databases with Best Practices Explore Security Guides →

Section 6: Platform-Specific Questions (40 Questions)

MySQL Administration Specifics

Q191: What storage engines does MySQL support?

- InnoDB: Default, ACID-compliant, supports transactions and foreign keys

- MyISAM: Older, faster for read-heavy workloads, no transaction support

- MEMORY: Stores data in RAM, very fast but volatile

- CSV: Data stored as CSV files

- ARCHIVE: Compressed storage for historical data

Always use InnoDB unless you have specific reasons otherwise.

Q192: How do you optimize MySQL configuration?

Key parameters in my.cnf:

text

innodb_buffer_pool_size = 70% of RAM

max_connections = Based on application needs

innodb_log_file_size = 256M or larger

query_cache_size = 0 (disabled in MySQL 8.0+)

tmp_table_size and max_heap_table_size = Match values

Q193: What is the MySQL slow query log?

Logs queries exceeding a time threshold:

sql

SET GLOBAL slow_query_log = ‘ON’;

SET GLOBAL long_query_time = 2;

SET GLOBAL slow_query_log_file = ‘/var/log/mysql/slow.log’;

Review regularly to identify optimization opportunities.

Q194: How do you perform MySQL replication?

- Configure master:

sql

[mysqld]

server-id = 1

log_bin = mysql-bin

binlog_format = ROW

- Create replication user:

sql

CREATE USER ‘repl’@’%’ IDENTIFIED BY ‘password’;

GRANT REPLICATION SLAVE ON *.* TO ‘repl’@’%’;

- Configure slave:

sql

CHANGE MASTER TO MASTER_HOST=‘master_ip’,

MASTER_USER=‘repl’, MASTER_PASSWORD=‘password’,

MASTER_LOG_FILE=‘mysql-bin.000001’, MASTER_LOG_POS=107;

START SLAVE;

Q195: What tools help manage MySQL?

- MySQL Workbench: GUI for design, development, administration

- phpMyAdmin: Web-based administration

- Percona Toolkit: Command-line tools for optimization and troubleshooting

- mysqldump: Backup and migration tool

- mysqlcheck: Table maintenance

Q196: How do you backup MySQL databases?

Logical backup with mysqldump:

bash

mysqldump -u root -p –single-transaction –routines –triggers database_name > backup.sql

Physical backup with MySQL Enterprise Backup or Percona XtraBackup for larger databases.

Q197: What is MySQL partitioning?

Dividing tables into smaller pieces:

sql

CREATE TABLE orders (

order_id INT,

order_date DATE

) PARTITION BY RANGE (YEAR(order_date)) (

PARTITION p2022 VALUES LESS THAN (2023),

PARTITION p2023 VALUES LESS THAN (2024)

);

Improves query performance and management.

Q198: How do you troubleshoot MySQL connection issues?

Check:

- MySQL service is running: systemctl status mysql

- Network connectivity and firewall rules

- User permissions: SELECT user, host FROM mysql.user;

- Connection limit: SHOW VARIABLES LIKE ‘max_connections’;

- Error logs: /var/log/mysql/error.log

Q199: What is the difference between MySQL and MariaDB?

MariaDB is a MySQL fork with:

- Better performance optimizations

- More storage engines

- Enhanced features (window functions, JSON)

- Active open-source development

- Compatible with MySQL

Many organizations use them interchangeably.

Q200: How do you upgrade MySQL versions?

- Backup everything

- Review release notes for breaking changes

- Test on non-production environment

- Run mysql_upgrade utility

- Verify application compatibility

- Monitor performance after upgrade

Never skip major versions – upgrade incrementally.

Oracle Database and PL/SQL

Q201: What is an Oracle instance vs an Oracle database?

Database: Physical files (datafiles, control files, redo logs)

Instance: Memory structures and background processes

Multiple instances can access one database (RAC), or one instance manages one database.

Q202: What are Oracle tablespaces?

Logical storage containers holding database objects. Common tablespaces:

- SYSTEM: Data dictionary

- SYSAUX: Auxiliary system data

- USERS: User data

- TEMP: Temporary operations

- UNDO: Transaction rollback

Q203: How do you create a tablespace?

sql

CREATE TABLESPACE app_data

DATAFILE ‘/u01/oradata/ORCL/app_data01.dbf’ SIZE 100M

AUTOEXTEND ON NEXT 10M MAXSIZE UNLIMITED

EXTENT MANAGEMENT LOCAL

SEGMENT SPACE MANAGEMENT AUTO;

Q204: What is Oracle’s Cost-Based Optimizer?

The CBO uses statistics to choose execution plans. Keep statistics current:

sql

EXEC DBMS_STATS.GATHER_SCHEMA_STATS(‘schema_name’);

The Rule-Based Optimizer is deprecated.

Q205: What are Oracle initialization parameters?

Configuration settings in spfile or pfile:

sql

SHOW PARAMETER memory_target;

ALTER SYSTEM SET memory_target=2G SCOPE=BOTH;

Key parameters: memory_target, processes, sessions, db_cache_size

Q206: How does Oracle handle transactions?

Oracle provides automatic undo management:

- Changes are recorded in undo tablespace

- Uncommitted changes are invisible to other users (read consistency)

- COMMIT makes changes permanent

- ROLLBACK discards changes

sql

BEGIN

UPDATE accounts SET balance = balance – 100 WHERE id = 1;

UPDATE accounts SET balance = balance + 100 WHERE id = 2;

COMMIT;

EXCEPTION

WHEN OTHERS THEN

ROLLBACK;

END;

Q207: What is Oracle RAC?

Real Application Clusters allow multiple instances to access one database simultaneously, providing high availability and scalability. If one node fails, others continue serving requests.

Q208: How do you use Oracle Data Pump?

Export:

bash

expdp username/password DIRECTORY=pump_dir DUMPFILE=export.dmp SCHEMAS=schema_name

Import:

bash

impdp username/password DIRECTORY=pump_dir DUMPFILE=export.dmp SCHEMAS=schema_name

Faster than exp/imp and supports filtering, parallel processing, and network transfers.

Q209: What are Oracle materialized views?

Pre-computed query results stored physically for faster access:

sql

CREATE MATERIALIZED VIEW sales_summary

BUILD IMMEDIATE

REFRESH COMPLETE ON DEMAND

AS

SELECT product_id, SUM(quantity) as total_qty, SUM(amount) as total_amount

FROM sales

GROUP BY product_id;

Refresh options:

- ON DEMAND: Manual refresh

- ON COMMIT: Refresh when underlying data changes

- Scheduled refresh at intervals

Q210: How do you write PL/SQL procedures?

sql

CREATE OR REPLACE PROCEDURE update_salary(

p_emp_id IN NUMBER,

p_increase IN NUMBER

) AS

v_current_salary NUMBER;

BEGIN

SELECT salary INTO v_current_salary

FROM employees

WHERE employee_id = p_emp_id;

UPDATE employees

SET salary = salary + p_increase

WHERE employee_id = p_emp_id;

COMMIT;

EXCEPTION

WHEN NO_DATA_FOUND THEN

DBMS_OUTPUT.PUT_LINE(‘Employee not found’);

WHEN OTHERS THEN

ROLLBACK;

RAISE;

END;

Q211: What are Oracle cursors?

Cursors handle query results row by row:

sql

DECLARE

CURSOR emp_cursor IS SELECT employee_id, first_name FROM employees;

v_emp_id employees.employee_id%TYPE;

v_name employees.first_name%TYPE;

BEGIN

OPEN emp_cursor;

LOOP

FETCH emp_cursor INTO v_emp_id, v_name;

EXIT WHEN emp_cursor%NOTFOUND;

DBMS_OUTPUT.PUT_LINE(v_name);

END LOOP;

CLOSE emp_cursor;

END;

Q212: What is Oracle Automatic Storage Management (ASM)?

ASM simplifies storage management by:

- Automatic load balancing across disks

- Automatic mirroring for redundancy

- Simplified disk group management

- No need for volume managers or file systems

Q213: How do you monitor Oracle performance?

Key views:

- V$SESSION: Current sessions

- V$SQL: SQL statements in cache

- V$SYSSTAT: System statistics

- V$LOCKED_OBJECT: Locked objects

- AWR reports: Performance snapshots

sql

SELECT sql_text, executions, elapsed_time

FROM v$sql

ORDER BY elapsed_time DESC;

Q214: What is Oracle Data Guard?

Disaster recovery solution maintaining standby databases:

- Physical standby: Exact block-by-block copy

- Logical standby: Maintains through SQL apply

- Automatic failover on primary failure

- Can be used for read-only queries

Q215: How do you tune PL/SQL code?

- Use BULK COLLECT for multi-row operations

- Minimize context switches between SQL and PL/SQL

- Use FORALL for bulk DML

- Avoid implicit conversions

- Use PL/SQL profiler to identify bottlenecks

sql

— Instead of row-by-row

DECLARE

TYPE emp_tab IS TABLE OF employees%ROWTYPE;

v_employees emp_tab;

BEGIN

SELECT * BULK COLLECT INTO v_employees FROM employees;

FORALL i IN 1..v_employees.COUNT

UPDATE employees SET salary = salary * 1.1

WHERE employee_id = v_employees(i).employee_id;

END;

PostgreSQL Advanced Features

Q216: What makes PostgreSQL unique?

- Extensive data types (JSON, arrays, ranges, geometric)

- Advanced indexing (GiST, GIN, BRIN)

- Full-text search built-in

- MVCC for high concurrency

- Extensibility through custom functions and extensions

- Strong SQL compliance

Q217: How do you work with JSON data in PostgreSQL?

sql

— Store JSON

CREATE TABLE orders (

id SERIAL,

order_data JSONB

);

INSERT INTO orders (order_data) VALUES

(‘{“customer”: “John”, “items”: [{“product”: “laptop”, “qty”: 1}]}’);

— Query JSON

SELECT order_data->>‘customer’ as customer_name FROM orders;

SELECT * FROM orders WHERE order_data @> ‘{“customer”: “John”}’;

JSONB (binary JSON) is faster for querying than JSON text.

Q218: What are PostgreSQL extensions?

Extensions add functionality:

- pg_stat_statements: Query performance tracking

- PostGIS: Geographic data

- pgcrypto: Encryption functions

- hstore: Key-value storage

- uuid-ossp: UUID generation

sql

CREATE EXTENSION pg_stat_statements;

SELECT query, calls, total_time FROM pg_stat_statements

ORDER BY total_time DESC LIMIT 10;

Q219: How does PostgreSQL handle concurrency?

PostgreSQL uses MVCC (Multi-Version Concurrency Control):

- Readers don’t block writers

- Writers don’t block readers

- Each transaction sees a consistent snapshot

- Old row versions maintained until no longer needed

This provides excellent concurrent performance.

Q220: What is VACUUM in PostgreSQL?

VACUUM reclaims space from dead tuples (old row versions):

sql

— Manual vacuum

VACUUM ANALYZE table_name;

— Aggressive vacuum

VACUUM FULL table_name;

Autovacuum runs automatically but may need tuning for large tables. Regular vacuuming prevents table bloat.

Q221: How do you configure PostgreSQL for performance?

Key parameters in postgresql.conf:

text

shared_buffers = 25% of RAM

effective_cache_size = 50-75% of RAM

work_mem = RAM / max_connections / 2

maintenance_work_mem = 1-2GB

max_connections = Based on application needs

random_page_cost = 1.1 (for SSDs)

Q222: What are PostgreSQL table inheritance and partitioning?

Table inheritance (older approach):

sql

CREATE TABLE employees (id INT, name TEXT);

CREATE TABLE managers (bonus DECIMAL) INHERITS (employees);

Declarative partitioning (modern approach):

sql